MHA GTID based failover代碼解析

作為以下文章的補充,說明MHA GTID based failover的處理流程。

http://blog.chinaunix.net/uid-20726500-id-5700631.html

MHA判斷是GTID based failover需要滿足下面3個條件(參考函數get_gtid_status)

所有節點gtid_mode=1

所有節點Executed_Gtid_Set不為空

至少一個節點Auto_Position=1

GTID basedMHA故障切換

- MHA::MasterFailover::main()

- ->do_master_failover

- Phase 1: Configuration Check Phase

- -> check_settings:

- check_node_version:查看MHA的版本信息

- connect_all_and_read_server_status:確認各個node的MySQL實例是否可以連接

- get_dead_servers/get_alive_servers/get_alive_slaves:double check各個node的死活狀態

- start_sql_threads_if:查看Slave_SQL_Running是否為Yes,若不是則啟動SQL thread

- Phase 2: Dead Master Shutdown Phase:對於我們來說,唯一的作用就是stop IO thread

- -> force_shutdown($dead_master):

- stop_io_thread:所有slave的IO thread stop掉(將stop掉master)

- force_shutdown_internal(實際上就是執行配置文件中的master_ip_failover_script/shutdown_script,若無則不執行):

- master_ip_failover_script:如果設置了VIP,則首先切換VIP

- shutdown_script:如果設置了shutdown腳本,則執行

- Phase 3: Master Recovery Phase

- -> Phase 3.1: Getting Latest Slaves Phase(取得latest slave)

- read_slave_status:取得各個slave的binlog file/position

- check_slave_status:調用"SHOW SLAVE STATUS"來取得slave的如下信息:

- Slave_IO_State, Master_Host,

- Master_Port, Master_User,

- Slave_IO_Running, Slave_SQL_Running,

- Master_Log_File, Read_Master_Log_Pos,

- Relay_Master_Log_File, Last_Errno,

- Last_Error, Exec_Master_Log_Pos,

- Relay_Log_File, Relay_Log_Pos,

- Seconds_Behind_Master, Retrieved_Gtid_Set,

- Executed_Gtid_Set, Auto_Position

- Replicate_Do_DB, Replicate_Ignore_DB, Replicate_Do_Table,

- Replicate_Ignore_Table, Replicate_Wild_Do_Table,

- Replicate_Wild_Ignore_Table

- identify_latest_slaves:

- 通過比較各個slave中的Master_Log_File/Read_Master_Log_Pos,來找到latest的slave

- identify_oldest_slaves:

- 通過比較各個slave中的Master_Log_File/Read_Master_Log_Pos,來找到oldest的slave

- -> Phase 3.2: Determining New Master Phase

- get_most_advanced_latest_slave:找到(Relay_Master_Log_File,Exec_Master_Log_Pos)最靠前的Slave

- select_new_master:選出新的master節點

- If preferred node is specified, one of active preferred nodes will be new master.

- If the latest server behinds too much (i.e. stopping sql thread for online backups),

- we should not use it as a new master, we should fetch relay log there. Even though preferred

- master is configured, it does not become a master if it's far behind.

get_candidate_masters:

就是配置文件中配置了candidate_master>0的節點

get_bad_candidate_masters:

# The following servers can not be master:

# - dead servers

# - Set no_master in conf files (i.e. DR servers)

# - log_bin is disabled

# - Major version is not the oldest

# - too much replication delay(slave與master的binlog position差距大於100000000)

Searching from candidate_master slaves which have received the latest relay log events

if NOT FOUND:

Searching from all candidate_master slaves

if NOT FOUND:

Searching from all slaves which have received the latest relay log events

if NOT FOUND:

Searching from all slaves

-> Phase 3.3: Phase 3.3: New Master Recovery Phase

recover_master_gtid_internal:

wait_until_relay_log_applied

stop_slave

如果new master不是擁有最新relay的Slave

$latest_slave->wait_until_relay_log_applied:等待直到最新relay的Slave上Exec_Master_Log_Pos等於Read_Master_Log_Pos

change_master_and_start_slave( $target, $latest_slave)

wait_until_in_sync( $target, $latest_slave )

save_from_binlog_server:

遍歷所有binary server,執行save_binary_logs --command=save獲取後面的binlog

apply_binlog_to_master:

應用從binary server上獲取的binlog(如果有的話)

如果設置了master_ip_failover_script,調用$master_ip_failover_script --command=start進行啟用vip

如果未設置skip_disable_read_only,設置read_only=0

Phase 4: Slaves Recovery Phase

recover_slaves_gtid_internal

-> Phase 4.1: Starting Slaves in parallel

對所有Slave執行change_master_and_start_slave

如果設置了wait_until_gtid_in_sync,通過"SELECT WAIT_UNTIL_SQL_THREAD_AFTER_GTIDS(?,0)"等待Slave數據同步

Phase 5: New master cleanup phase

reset_slave_on_new_master

清理New Master其實就是重置slave info,即取消原來的Slave信息。至此整個Master故障切換過程完成

啟用GTID時的在線切換流程和不啟用GTID時一樣(唯一不同的是執行的change master語句),所以省略。

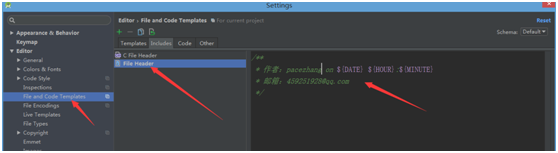

注釋設置,eclipse設置注釋模板

注釋設置,eclipse設置注釋模板

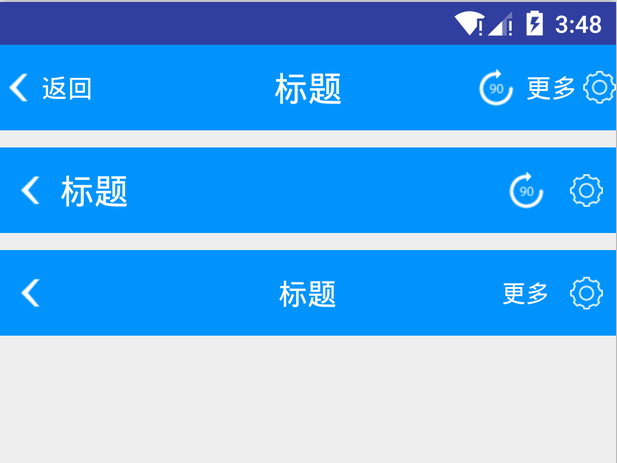

Android自定義標題TitleView,androidtitleview

Android自定義標題TitleView,androidtitleview

Android快樂貪吃蛇游戲實戰項目開發教程-03虛擬方向鍵(二)繪制一個三角形,android-03

Android快樂貪吃蛇游戲實戰項目開發教程-03虛擬方向鍵(二)繪制一個三角形,android-03

【原創】StickHeaderListView的簡單實現,解決footerView問題,stickheaderlistview

【原創】StickHeaderListView的簡單實現,解決footerView問題,stickheaderlistview