編輯:關於Android編程

1. Java代碼實現部分VtActivity.java

package com.whbs.vt;

import java.io.IOException;

import android.app.Activity;

import android.graphics.Bitmap;

import android.graphics.Canvas;

import android.graphics.PixelFormat;

import android.hardware.Camera;

import android.hardware.Camera.PreviewCallback;

import android.os.Bundle;

import android.util.Log;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

import android.view.Window;

import android.view.WindowManager;

import android.view.SurfaceHolder.Callback;

import android.widget.ImageView;

public class VtActivity extends Activity implements Callback,PreviewCallback{

private final static String TAG = "VtActivity";

boolean isBack = false;

Camera mCamera;

SurfaceView mRemoteView;

SurfaceHolder mRemoteSurfaceHolder;

SurfaceView mLocalView;

SurfaceHolder mLocalSurfaceHolder;

ImageView imageView;

boolean isStartPrview = false;

int previewWith = 176,privateHeight = 144;

/** Called when the activity is first created. */

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

final Window win = getWindow();

win.setFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN, WindowManager.LayoutParams.FLAG_FULLSCREEN);

requestWindowFeature(Window.FEATURE_NO_TITLE);

//高亮

win.addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

setContentView(R.layout.main_2);

mRemoteView = (SurfaceView)findViewById(R.id.remoteView);

mLocalView = (SurfaceView)findViewById(R.id.localView);

mLocalSurfaceHolder = mLocalView.getHolder();

mRemoteSurfaceHolder = mRemoteView.getHolder();

mLocalSurfaceHolder.addCallback(this);

mLocalSurfaceHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

// mLocalSurfaceHolder.setFormat(PixelFormat.TRANSPARENT);

mRemoteSurfaceHolder.setType(SurfaceHolder.SURFACE_TYPE_GPU);

mRemoteSurfaceHolder.setFormat(PixelFormat.TRANSPARENT);

}

@Override

protected void onResume() {

super.onResume();

Log.e(TAG, "[onResume]isBack ="+isBack);

isBack = false;

}

@Override

protected void onPause() {

Log.i(TAG, "[onPause]");

isBack = true;

super.onPause();

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

Log.i(TAG, "[surfaceCreated]");

mCamera = Camera.open(1);

mCamera.setPreviewCallback(this);

mCamera.setDisplayOrientation(90);

try{

mCamera.setPreviewDisplay(mLocalSurfaceHolder);

}catch(IOException e){

mCamera.release();

mCamera = null;

}

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width,

int height) {

Log.i(TAG, "[surfaceChanged]");

if(mCamera == null){return;}

Camera.Parameters param = mCamera.getParameters();

param.setPreviewSize(privateHeight,previewWith);//144, 176);

param.setPreviewFrameRate(15);

mCamera.setParameters(param);

mCamera.startPreview();

isStartPrview = true;

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

Log.i(TAG, "[surfaceDestroyed]");

// TODO Auto-generated method stub

if(mCamera != null){

mCamera.setPreviewCallback(null);

mCamera.stopPreview();

mCamera.release();

mCamera = null;

}

isStartPrview = false;

}

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

// TODO Auto-generated method stub

if(isStartPrview && !isBack){

int w = previewWith;//camera.getParameters().getPreviewSize().width;

int h = privateHeight;//camera.getParameters().getPreviewSize().height;

data = draw90yuv(data,w,h);

drawRemoteVide(data,w,h);

}

}

/**

* 下面兩中旋轉90方法都是成功的

* @param data

* @param w

* @param h

* @return

*/

private synchronized byte[] draw90yuv(byte[] data,int w,int h){

// w = 3;

// h=4;

byte[] temp = new byte[w*h*3/2];

int n=0,s=0;

// Log.i(TAG, " data:"+data.length+",temp:"+temp.length);

//YYYYYYYYYYYYYYYYYYYYYYYYY

n=176-1;//+16;

s=0;

for(int i=0;i<144;i++){

for(int j=0;j<176;j++){

// Log.i(TAG, "YY: temp:"+temp.length+",n:"+n);

temp[n] = data[s];

--n;

s +=144;

}

s=i+1;

n +=(176+176);

}

//UUUUUUUUUUUUUUUUUUUUUUUUU

n=w*h+88-1;

s=w*h;

for(int i=0;i<72;i++){

for(int j=0;j<88;j++){

// Log.i(TAG, "UUU temp:"+temp.length+",s:"+s);

temp[n] = data[s];

--n;

s +=72;

}

s=w*h+i+1;

n +=(88+88);

}

//////// //VVVVVVVVVVVVVVVVVVVVVVVVVV

n=w*h*5/4+88-1;

s=w*h*5/4;

for(int i=0;i<72;i++){

for(int j=0;j<88;j++){

// Log.i(TAG, "VVV temp:"+temp.length+",n:"+n+",s="+s);

temp[n] = data[s];

--n;

s+=72;

}

s=w*h*5/4+i+1;

n +=(88+88);

}

return temp;

}

private synchronized byte[] draw90yuv2(byte[] data,int w,int h){

// w = 3;

// h=4;

byte[] temp = new byte[w*h*3/2];

int n=0,s=0;

// Log.i(TAG, " data:"+data.length+",temp:"+temp.length);

//YYYYYYYYYYYYYYYYYYYYYYYYY

for(int i=0;i<144;i++){

for(int j=176-1;j>=0;j--){

// Log.i(TAG, "YY: temp:"+temp.length+",s:"+s+",n="+n);

temp[n++] = data[j*144+i];

}

}

//UUUUUUUUUUUUUUUUUUUUUUUUU

s=w*h;

for(int i=0;i<72;i++){

for(int j=88-1;j>=0;j--){

// Log.i(TAG, "UUU temp:"+temp.length+",s:"+s);

temp[n++] = data[s+72*j+i];

}

}

//VVVVVVVVVVVVVVVVVVVVVVVVVV

s=w*h*5/4;

for(int i=0;i<72;i++){

for(int j=88-1;j>=0;j--){

// Log.i(TAG, "VVV temp:"+temp.length+",s:"+s);

temp[n++] = data[s+72*j+i];

}

}

//

return temp;

}

private void drawRemoteVide(final byte[] imageData,int w,int h){

int[] rgb = decodeYUV420SP(imageData, w, h);

Bitmap bmp = Bitmap.createBitmap(rgb, w, h, Bitmap.Config.ARGB_8888);

//這裡處理的圖像大小會是截取部分的,因為remoteView的大小與取得的圖像不同。所以如果要顯示全像,要再處理圖像的縮放:

bmp = Bitmap.createScaledBitmap(bmp, mRemoteView.getWidth(), mRemoteView.getHeight(), true);

// System.out.println("mRemoteView.getWidth()="+mRemoteView.getWidth()+",mRemoteView.getHeight():"+ mRemoteView.getHeight());

Canvas canvas = mRemoteSurfaceHolder.lockCanvas();

canvas.drawBitmap(bmp, 0,0, null);

// canvas.d

mRemoteSurfaceHolder.unlockCanvasAndPost(canvas);

}

/*

* 把YUV420轉換為RGB格式

*/

public int[] decodeYUV420SP(byte[] yuv420sp, int width, int height) {

final int frameSize = width * height;

int rgb[] = new int[width * height];

for (int j = 0, yp = 0; j < height; j++) {

int uvp = frameSize + (j >> 1) * width, u = 0, v = 0;

for (int i = 0; i < width; i++, yp++) {

int y = (0xff & ((int) yuv420sp[yp])) - 16;

if (y < 0) y = 0;

if ((i & 1) == 0) {

v = (0xff & yuv420sp[uvp++]) - 128;

u = (0xff & yuv420sp[uvp++]) - 128;

}

int y1192 = 1192 * y;

int r = (y1192 + 1634 * v);

int g = (y1192 - 833 * v - 400 * u);

int b = (y1192 + 2066 * u);

if (r < 0) r = 0;

else if (r > 262143) r = 262143;

if (g < 0) g = 0;

else if (g > 262143) g = 262143;

if (b < 0) b = 0;

else if (b > 262143) b = 262143;

rgb[yp] = 0xff000000 | ((r << 6) & 0xff0000) | ((g >> 2) &

0xff00) | ((b >> 10) & 0xff);

}

}

return rgb;

}

}

2. xml代碼 main_2.xml

<?xml version="1.0" encoding="utf-8"?>

<AbsoluteLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:id="@+id/layoutMain"

>

<SurfaceView android:id="@+id/localView"

android:layout_width="176dip"

android:layout_height="144dip"

/>

<!--

<TextView android:id="@+id/txt"

android:layout_width="fill_parent"

android:layout_height="wrap_content"

android:text="ddddd"

/>

-->

<SurfaceView android:id="@+id/remoteView"

android:layout_x="0dip"

android:layout_y="0dip"

android:layout_width="432dip"

android:layout_height="531dip"

/>

</AbsoluteLayout>

3. 缺陷

1. 當關閉屏再開屏時小的畫面就消失了,不知為什麼

2. 如果把小的畫面SurfaceView localView放到SurfaceView remoteView 之後,關閉屏就在開屏就可以有localView的畫面,但剛啟動時沒有此畫面

android控件之間事件傳遞

android控件之間事件傳遞

public boolean dispatchTouchEvent(MotionEvent ev){} 用於事件的分發,Android中所有的事件都必須經

Android DigitalClock組件用法實例

Android DigitalClock組件用法實例

本文實例講述了Android DigitalClock組件用法。分享給大家供大家參考,具體如下:DigitalClock組件的使用很簡單,先看看效果圖:DigitalCl

AndroidSDK Support自帶夜間、日間模式切換詳解

AndroidSDK Support自帶夜間、日間模式切換詳解

寫這篇博客的目的就是教大家利用AndroidSDK自帶的support lib來實現APP日間/夜間模式的切換,最近看到好多帖子在做關於這個日夜間模式切換的開源項目,其實

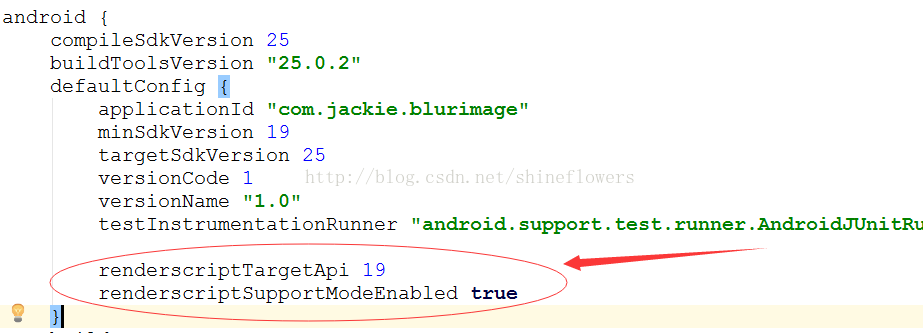

Android實現動態高斯模糊效果示例代碼

Android實現動態高斯模糊效果示例代碼

寫在前面現在,越來越多的App裡面使用了模糊效果,這種模糊效果稱之為高斯模糊。大家都知道,在Android平台上進行模糊渲染是一個相當耗CPU也相當耗時的操作