編輯:關於Android編程

public class MyParcelable implements Parcelable { private int mData; public int describeContents() { return 0; } public void writeToParcel(Parcel out, int flags) { out.writeInt(mData); } public static final Parcelable.CreatorCREATOR = new Parcelable.Creator () { public MyParcelable createFromParcel(Parcel in) { return new MyParcelable(in); } public MyParcelable[] newArray(int size) { return new MyParcelable[size]; } }; private MyParcelable(Parcel in) { mData = in.readInt(); } }

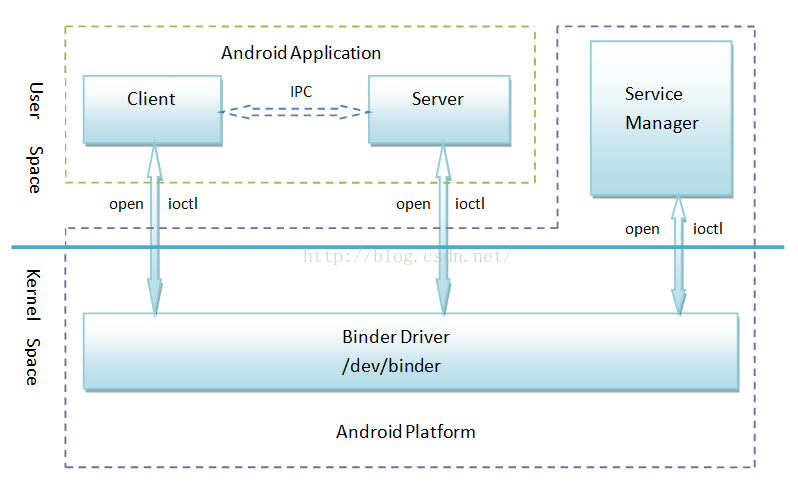

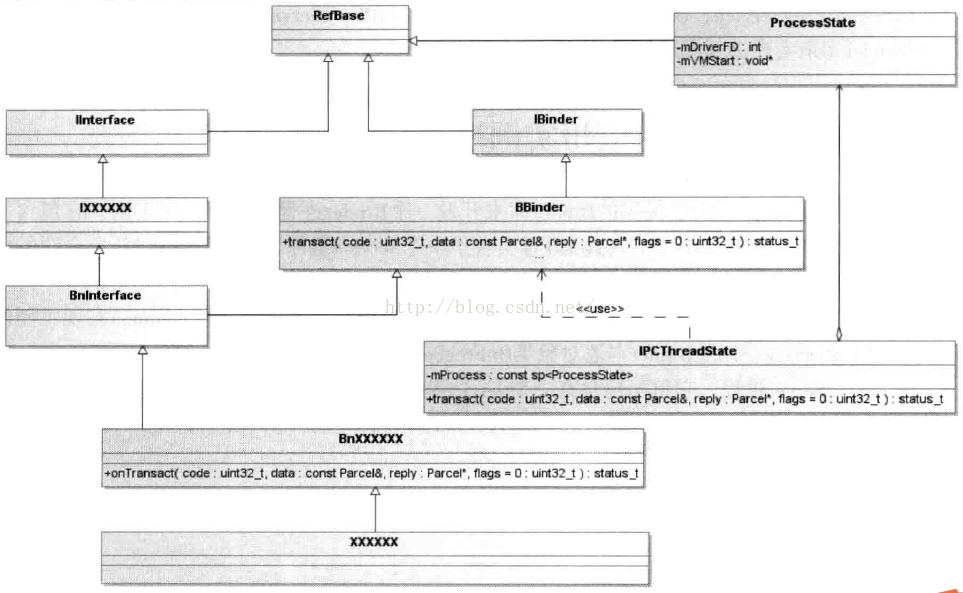

class IInterface : public virtual RefBase templateINTERFACE是進程自定義的Service組件接口、BBinder為Binder本地對象提供了抽象的進程間通信接口、BpRefBase為Binder代理對象提供了抽象的進程間通信接口。 BnInterface為Binder本地對象, Service端使用,對應Binder驅動程序中的Binder實體對象;BpInterface為Binder代理對象,Client端使用,對應Binder驅動程序中的Binder引用對象。用戶需要編寫一個BnXXXX實現BnInterface//INTERFACE為自定義的一個接口繼承IInterface class BnInterface : public INTERFACE, public BBinder template ///INTERFACE為自定義的一個接口繼承IInterface class BpInterface : public INTERFACE, public BpRefBase

class BBinder : public IBinder{

public: ``````````````

virtual status_t transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0);

//IPCThreadState就會代用BBinder子類的該方法,該方法內部會調用BnXXXX對onTransact方法的實現。

protected: ````````````

virtual status_t onTransact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0);

//BnXXXX實現BnInterface時就會定義該方法

};

class BpRefBase : public virtual RefBase {

protected: ·······

BpRefBase(const sp& o);

inline IBinder* remote(){ return mRemote; }

private: ······

BpRefBase(const BpRefBase& o);

IBinder* const mRemote; //note1

};

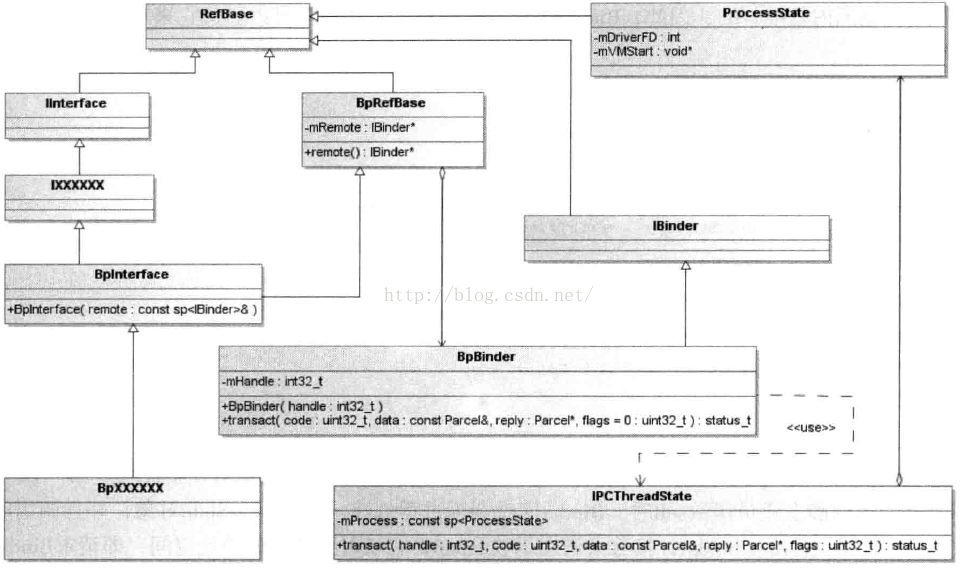

1、指向一個BpBinder對象

BpBinder@{ binder / BpBinder.h}

class BpBinder : public IBinder {

public: ·········

inline int32_t handle() const { return mHandle; }

virtual status_t transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0); //note2

private: ·······

const int32_t mHandle; //note1

};

1、該整數表示一個Client組件的句柄值。每一個Client組件在驅動程序對應一個Binder引用對象。

2、BpBinder的transact方法會把自己的mHandler和進程間通信數據發送給Binder驅動程序。Binder驅動程序根據mHandler找到對應的Binder引用對象,進而找到Binder實體對象(Binder驅動層能夠完成BpBinder到BBinder之間的映射),最後將通信數據發送給Service組件。(對於如何通過BpBinder找到BBBinder推薦查閱博文,搜索關鍵字sp

class IPCThreadState {

public: ········

static IPCThreadState* self();

//當前線程是Binder線程,通過它獲取一個IPCThreadState對象,該對象可以跟Binder驅動層通信。

status_t transact(int32_t handle, uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags);

//與Binder驅動程序交互,底層通過talkWithDriver實現

private:············

status_t sendReply(const Parcel& reply, uint32_t flags);

status_t waitForResponse(Parcel *reply, status_t *acquireResult=NULL);

status_t talkWithDriver(bool doReceive=true); //向Binder驅動發送數據也從Binder驅動接收數據

status_t writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle,

uint32_t code,

const Parcel& data,

status_t* statusBuffer);

status_t executeCommand(int32_t command);

const sp mProcess; //初始化Binder設備

};

對於每個Binder線程池中它都有一個IPCThreadState對象

Service組件結構圖

Client組件結構圖

Client組件結構圖

上面的分析我們可以簡單的獲得以下幾個結論:

上面的分析我們可以簡單的獲得以下幾個結論:

void *svcmgr_handle; //ServerManager.c的一個局部變量

int main(int argc, char **argv)

{

struct binder_state *bs; //note 1

void *svcmgr = BINDER_SERVICE_MANAGER; //note2

bs = binder_open(128*1024); //note3

if (binder_become_context_manager(bs)) { //note4

return -1;

}

svcmgr_handle = svcmgr; //note5

binder_loop(bs, svcmgr_handler); //note6

return 0;

}

1、

struct binder_state{

int fd; //設備描述符

void *mmaped; //映射空間起始地址

unsigned mapsize; //映射空間大小

//映射是將設備文件映射到進程的地址空間,用於緩存進程間通信的數據

}

struct binder_state *binder_open(unsigned mapsize)

{

struct binder_state *bs;

bs = malloc(sizeof(*bs));

if (!bs) { errno = ENOMEM; return 0; }

bs->fd = open("/dev/binder", O_RDWR); //note1

if (bs->fd < 0) { fprintf(stderr,"binder: cannot open device (%s)\n",strerror(errno)); goto fail_open; }

bs->mapsize = mapsize;

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0); //note2

if (bs->mapped == MAP_FAILED) {fprintf(stderr,"binder: cannot map device (%s)\n", strerror(errno)); goto fail_map;}

return bs;

fail_map:

close(bs->fd);

fail_open:

free(bs);

return 0;

}

1、該方法最終導致Binder驅動程序的binder_open方法被調用

binder_become_context_manager()@{ servicemanager / binder.c}

#define BINDER_SET_CONTEXT_MGR _IOW('b', 7, int)

int binder_become_context_manager(struct binder_state *bs)

{

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0); //note1

}

1、該方法會導致Binder驅動的binder_ioctl方法被調用。binder_ioctl方法多次被使用到,已經貼在後面的附錄一中。

通過向Binder驅動層發送BINDER_SET_CONTEXT_MGR協議將自己注冊到Binder驅動程序中。ioctl中最後一個參數,表示當前Service Manager對應的本地Binder對象的地址值,Service Manager對應Binder本地對象值為0;

binder_loop()@{ servicemanager / binder.c}

BC_ENTER_LOOPER = _IO('c', 12),

BINDER_WRITE_READ _IOWR('b', 1, struct binder_write_read)

void binder_loop(struct binder_state *bs, binder_handler func){

int res;

struct binder_write_read bwr;

unsigned readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(unsigned));//note1

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); //note2

if (res < 0) { LOGE("binder_loop: ioctl failed (%s)\n", strerror(errno)); break; }

res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func); //note3

if (res == 0) { LOGE("binder_loop: unexpected reply?!\n"); break;}

if (res < 0) { LOGE("binder_loop: io error %d %s\n", res, strerror(errno)); break; }

}

}

1、該方法用於將當前線程注冊到Binder驅動程序中,成為Binder線程,Binder驅動程序隨後可以將進程間通信請求交付給該線程進行處理

int binder_write(struct binder_state *bs, void *data, unsigned len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (unsigned) data;

bwr.read_size = 0; //輸出緩存區為空,則一旦完成注冊,就會退回到用戶空間中。

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); //note1

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n", strerror(errno));

}

return res;

}

1、向Binder驅動層發送一個BINDER_WRITE_READ協議報文

2、該方法的bwr參數的輸出緩存區長度為readbuf大小,輸入緩存區長度等於0,因此只會調用函數binder_thread_read。如果ServiceManager進程的主線程沒有待處理的工作項,它將會睡眠在Binder驅動程序的binder_thread_read中,等待其它Service和Client向它發送進程間通信請求。

3、解析獲得的readbuf數據,使用binder_handler func處理

binder_parse()@{ servicemanager / binder.c}

int binder_parse(struct binder_state *bs, struct binder_io *bio, uintptr_t ptr, size_t size, binder_handler func) {

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

switch(cmd) {

case BR_NOOP:

break;

case BR_TRANSACTION_COMPLETE:

break;

case BR_INCREFS:

case BR_ACQUIRE:

case BR_RELEASE:

case BR_DECREFS:

ptr += sizeof(struct binder_ptr_cookie);

break;

case BR_TRANSACTION: { //note1

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) { return -1; }

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

res = func(bs, txn, &msg, &reply); //note2

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res); //note3

}

ptr += sizeof(*txn);

break;

}

case BR_REPLY: {

......

break;

}

case BR_DEAD_BINDER: {

........

break;

}

case BR_FAILED_REPLY:

r = -1;

break;

case BR_DEAD_REPLY:

r = -1;

break;

default:

return -1;

}

}

return r;

}

1、接收到一個IPCThreadState層協議——BR_TRANSACTION;

2、調用func處理,func等於servicemanager / service_manager.c的svcmgr_handle對象。

3、向Binder驅動器發送BC_REPLY協議報文

svcmgr_handler()@{ servicemanager / service_manager.c}

int svcmgr_handler(struct binder_state *bs, struct binder_txn *txn, struct binder_io *msg, struct binder_io *reply) {

struct svcinfo *si;

uint16_t *s;

unsigned len;

void *ptr;

uint32_t strict_policy;

if (txn->target != svcmgr_handle) return -1;

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len);

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

fprintf(stderr,"invalid id %s\n", str8(s));

return -1;

}

switch(txn->code) {

case SVC_MGR_GET_SERVICE:

case SVC_MGR_CHECK_SERVICE:

s = bio_get_string16(msg, &len);

ptr = do_find_service(bs, s, len); //從列表中查找服務

if (!ptr) break;

bio_put_ref(reply, ptr); //這裡是{servicemanager / binder.c}中的方法;ptr是句柄號,將內容寫入到reply中。

return 0;

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

ptr = bio_get_ref(msg);

if (do_add_service(bs, s, len, ptr, txn->sender_euid)) return -1;

break;

case SVC_MGR_LIST_SERVICES: {

unsigned n = bio_get_uint32(msg);

si = svclist;

while ((n-- > 0) && si)

si = si->next;

if (si) {

bio_put_string16(reply, si->name);

return 0;

}

return -1;

}

default: return -1;

}

bio_put_uint32(reply, 0);

return 0;

}

binder_send_reply()@{ servicemanager / binder.c}

void binder_send_reply(struct binder_state *bs, struct binder_io *reply, binder_uintptr_t buffer_to_free, int status) {

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

data.cmd_reply = BC_REPLY;

data.txn.target.ptr = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

data.txn.flags = TF_STATUS_CODE;

data.txn.data_size = sizeof(int);

data.txn.offsets_size = 0;

data.txn.data.ptr.buffer = (uintptr_t)&status;

data.txn.data.ptr.offsets = 0;

} else {

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offsets_size = ((char*) reply->offs) - ((char*) reply->offs0);

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

binder_write(bs, &data, sizeof(data)); //在binder_loop()已經介紹過會

}

這裡需要特別注意的是ServiceManager跟普通Service的不同之處還在於。對於普通XXService,Client獲取到一個BpXXService,然後調用它的一個方法,最終通過Binder機制會將數據交給BnXXService的同名方法處理。然而對於ServiceManager,Client獲取到一個BpServiceManager,然後調用它的一個方法,如getService,最終通過Binder機制會將數據交給svcmgr_handler()@{ servicemanager / service_manager.c}處理,而不是交給BnServiceManage處理!!!詳細參考鏈接

ServiceManager注冊:

spBinder庫中定義了一個gDefaultServiceManager全局變量,而且采用了單例的模式進行實現,getDefaultServiceManager方法獲得的就是該全局變量。在第一次請求該變量的時候會通過如下的方式獲取一個ServiceManager代理。interface_casrdefaultServiceManager() { if (gDefaultServiceManager != NULL) return gDefaultServiceManager; { AutoMutex _l(gDefaultServiceManagerLock); if (gDefaultServiceManager == NULL) { gDefaultServiceManager = interface_cast ( ProcessState::self()->getContextObject(NULL)); } } return gDefaultServiceManager; }

getStrongProxyForHandle的操作主要有:(方法參數為int32_t handle) spresult; handle_entry* e = lookupHandleLocked(handle); if (e != NULL) { IBinder* b = e->binder; if (b == NULL || !e->refs->attemptIncWeak(this)) { b = new BpBinder(handle); //創建一個BpBinder,該構造器會調用IPCThreadState::self()->incWeakHandle(handle)方法,創建了一個IPCThreadState對象 e->binder = b; if (b) e->refs = b->getWeakRefs(); result = b; } else { result.force_set(b); e->refs->decWeak(this); } } return result;

virtual status_t addService(const String16& name, const sp前面已經介紹Remote方法獲取的是一個BpBinder對象,調用BpBinder對象的transact方法將數據和當前BpBinder對應的句柄發送給Binder驅動層處理。數據封裝成一個ADD_SERVICE_TRANSACTION的協議報文交給Binder層處理。& service){ Parcel data, reply; data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()); data.writeString16(name); data.writeStrongBinder(service); status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply); return err == NO_ERROR ? reply.readExceptionCode() : err; }

status_t BpBinder::transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) //最後一個參數為0表示同步進程間請求,否則異步;默認為0

{

if (mAlive) {

status_t status = IPCThreadState::self()->transact( mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

Binder層的數據繼續包裝成一個IPCThreadState層的BC_TRANSACTION協議報文交給IPCThreadState層處理,調用IPCThreadState對象的transact方法

IPCThreadState::transact()@{ binder / IPCThreadState.cpp}

status_t IPCThreadState::transact(int32_t handle, uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) {

status_t err = data.errorCheck(); //檢查數據知否正確

flags |= TF_ACCEPT_FDS; //flag設置標志位

if (err == NO_ERROR) {

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL); //note1

}

if ((flags & TF_ONE_WAY) == 0) { //同步請求

if (reply) { err = waitForResponse(reply); }

else {

Parcel fakeReply;

err = waitForResponse(&fakeReply); //等待Service的返回數據

}

}

else { err = waitForResponse(NULL, NULL); } //不等待Service的返回數據

return err;

}

1、 將BC_TRANSACTION協議報文數據寫入到binder_transaction_data結構體中。 再將該對象寫入到IPCThreadState中的一個Parcel輸出緩沖區中

IPCThreadState::waitForResponse()@{ binder / IPCThreadState.cpp}

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult){

int32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break; //note1

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = mIn.readInt32(); //note2

switch (cmd) {

case BR_TRANSACTION_COMPLETE: //note3

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT:

```````

goto finish;

case BR_REPLY: { //note4

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

if (err != NO_ERROR) goto finish;

if (reply) { //reply不為空則給它設值

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast(tr.data.ptr.offsets),

tr.offsets_size/sizeof(size_t),

freeBuffer, this);

} .....

}

} else {

......

continue;

}

}

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

}

return err;

}

1、與Binder驅動程序交互,將前面IPCThreadState::transact()方法中向Parcel輸出緩沖區中寫入的數據傳給Binder驅動

2、讀取Binder驅動返回的協議報文

3、收到來自Binder驅動器層的BR_TRANSACTION_COMPLETE協議報文,如果 if (!reply && !acquireResult)為真則退出giant方法,否則繼續等待下一個協議報文

4、收到來自Binder驅動器層的BR_REPLY:協議報文,讀取報文中的數據交給Parcel *reply。隨後用戶就可以讀取其中的數據了

IPCThreadState::talkWithDriver()@{ binder / IPCThreadState.cpp}

status_t IPCThreadState::talkWithDriver(bool doReceive){

binder_write_read bwr;

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (long unsigned int)mOut.data(); //即將寫入到Binder驅動的數據

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (long unsigned int)mIn.data(); //獲得Binder驅動返回的數據

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) ///note1

....

}

1、將獲取到的數據封裝成一個Binder驅動層的BINDER_WRITE_READ協議報文,交給Binder啟動層處理。使用ioctl方法向Binder驅動層傳輸數據,IPCThreadState的mProcess變量的mDriverFD即/dev/driver文件描述符。

綜上addService方法首先將參數String16& name, spvirtual spcheckService()@{binder/IServiceManager.cpp}getService(const String16& name) const { unsigned n; for (n = 0; n < 5; n++){ sp svc = checkService(name); if (svc != NULL) return svc; sleep(1); } return NULL; }

virtual sp與addService方法類似,getService方法首先將參數String16& name包裝成一個Binder層的CHECK_SERVICE_TRANSACTION協議報文,傳給Binder層處理。之後數據包裝成 IPCThreadState層的BC_TRANSACTION協議報文。最後數據又被包裝成Binder驅動層的BINDER_WRITE_READ協議報文交給Binder驅動層處理。checkService( const String16& name) const{ Parcel data, reply; data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()); data.writeString16(name); remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply); return reply.readStrongBinder(); }

int main(int argc, char** argv){

//獲得一個ProcessState實例,前面已經介紹過

sp proc(ProcessState::self());

//得到一個ServiceManager對象,前面已經介紹過

sp sm = defaultServiceManager();

MediaPlayerService::instantiate();//note1

ProcessState::self()->startThreadPool();//note2

IPCThreadState::self()->joinThreadPool();//note3

}

1、MediaPlayerService實例化,該方法內部為defaultServiceManager()->addService(String16("media.player"), new MediaPlayerService())方法。service_manager收到addService的請求,然後把對應信息放到自己保存的一個服務list中。具體內容參見前面的addService說明。

2、啟動一個Binder線程池

void ProcessState::startThreadPool() {

AutoMutex _l(mLock);

if (!mThreadPoolStarted) {

mThreadPoolStarted = true;

spawnPooledThread(true);

}

}

spawnPooledThread()@{ binder / ProcessState.cpp}

void ProcessState::spawnPooledThread(bool isMain) {

if (mThreadPoolStarted) {

int32_t s = android_atomic_add(1, &mThreadPoolSeq);

char buf[32];

sprintf(buf, "Binder Thread #%d", s);

sp t = new PoolThread(isMain); //創建PoolThread對象

t->run(buf); //啟動該線程池

}

}

joinThreadPool()@{ binder / IPCThreadState.cpp}

void IPCThreadState::joinThreadPool(bool isMain) { //默認參數為ture

mOut.writeInt32(isMain ? BC_ENTER_LOOPER : BC_REGISTER_LOOPER); //note1

status_t result;

do {

int32_t cmd;

......

result = talkWithDriver(); //note2

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) continue;

cmd = mIn.readInt32();

result = executeCommand(cmd); //處理從Binder驅動返回回來的協議數據

}

....

if(result == TIMED_OUT && !isMain) {

break;

}

} while (result != -ECONNREFUSED && result != -EBADF);

mOut.writeInt32(BC_EXIT_LOOPER);

talkWithDriver(false);

}

1、形成一個BC_ENTER_LOOPER 協議報文,將報文寫入mOut的Parcel輸出緩沖區中。isMain == true表示進入到Looper,否則為注冊到Looper;

2、將mOut緩沖區中的數據傳給Binder驅動,上面我們知道這裡會將數據包裝成一個Binder驅動層的BINDER_WRITE_READ協議報文交給Binder驅動層處理

executeCommand()@{ binder / IPCThreadState.cpp}

status_t IPCThreadState::executeCommand(int32_t cmd)

{

BBinder* obj;

RefBase::weakref_type* refs;

status_t result = NO_ERROR;

switch (cmd) {

case BR_ERROR:

result = mIn.readInt32();

break;

case BR_OK:

break;

case BR_ACQUIRE:

refs = (RefBase::weakref_type*)mIn.readInt32();

obj = (BBinder*)mIn.readInt32();

obj->incStrong(mProcess.get());

mOut.writeInt32(BC_ACQUIRE_DONE);

mOut.writeInt32((int32_t)refs);

mOut.writeInt32((int32_t)obj);

break;

case BR_RELEASE:

refs = (RefBase::weakref_type*)mIn.readInt32();

obj = (BBinder*)mIn.readInt32();

mPendingStrongDerefs.push(obj);

break;

case BR_INCREFS:

refs = (RefBase::weakref_type*)mIn.readInt32();

obj = (BBinder*)mIn.readInt32();

refs->incWeak(mProcess.get());

mOut.writeInt32(BC_INCREFS_DONE);

mOut.writeInt32((int32_t)refs);

mOut.writeInt32((int32_t)obj);

break;

case BR_DECREFS:

refs = (RefBase::weakref_type*)mIn.readInt32();

obj = (BBinder*)mIn.readInt32();

mPendingWeakDerefs.push(refs);

break;

case BR_ATTEMPT_ACQUIRE:

refs = (RefBase::weakref_type*)mIn.readInt32();

obj = (BBinder*)mIn.readInt32(); {

const bool success = refs->attemptIncStrong(mProcess.get());

mOut.writeInt32(BC_ACQUIRE_RESULT);

mOut.writeInt32((int32_t)success);

}

break;

case BR_TRANSACTION:{

binder_transaction_data tr;

result = mIn.read(&tr, sizeof(tr));

if (result != NO_ERROR) break;

Parcel buffer;

buffer.ipcSetDataReference(

reinterpret_cast(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast(tr.data.ptr.offsets),

tr.offsets_size/sizeof(size_t), freeBuffer, this);

const pid_t origPid = mCallingPid;

const uid_t origUid = mCallingUid;

mCallingPid = tr.sender_pid;

mCallingUid = tr.sender_euid;

mOrigCallingUid = tr.sender_euid;

int curPrio = getpriority(PRIO_PROCESS, mMyThreadId);

if (gDisableBackgroundScheduling) {

if (curPrio > ANDROID_PRIORITY_NORMAL) {

setpriority(PRIO_PROCESS, mMyThreadId, ANDROID_PRIORITY_NORMAL);

}

} else {

if (curPrio >= ANDROID_PRIORITY_BACKGROUND) {

set_sched_policy(mMyThreadId, SP_BACKGROUND);

}

}

Parcel reply;

if (tr.target.ptr) {

sp b((BBinder*)tr.cookie);

const status_t error = b->transact(tr.code, buffer, &reply, tr.flags); //調用BBinder的transact方法處理,實則底層就是調用具體服務的onTransact方法!

if (error < NO_ERROR) reply.setError(error);

} else {

const status_t error = the_context_object->transact(tr.code, buffer, &reply, tr.flags);

if (error < NO_ERROR) reply.setError(error);

}

if ((tr.flags & TF_ONE_WAY) == 0) {

sendReply(reply, 0); //發送一個IPCThreadState層的BC_REPLY協議報文給Binder驅動層

} else { }

mCallingPid = origPid;

mCallingUid = origUid;

mOrigCallingUid = origUid;

}

break;

case BR_DEAD_BINDER:

{

BpBinder *proxy = (BpBinder*)mIn.readInt32();

proxy->sendObituary();

mOut.writeInt32(BC_DEAD_BINDER_DONE);

mOut.writeInt32((int32_t)proxy);

} break;

case BR_CLEAR_DEATH_NOTIFICATION_DONE:

{

BpBinder *proxy = (BpBinder*)mIn.readInt32();

proxy->getWeakRefs()->decWeak(proxy);

} break;

case BR_FINISHED:

result = TIMED_OUT;

break;

case BR_NOOP:

break;

case BR_SPAWN_LOOPER:

mProcess->spawnPooledThread(false);

break;

default:

result = UNKNOWN_ERROR;

break;

}

if (result != NO_ERROR) {

mLastError = result;

}

return result;

}

BBinder::transact()

status_t BBinder::transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) {

err = onTransact(code, data, reply, flags); //就是調用自己的onTransact函數嘛

return err;

}

sendReply()@{ binder / IPCThreadState.cpp}

status_t IPCThreadState::sendReply(const Parcel& reply, uint32_t flags)

{

status_t err;

status_t statusBuffer;

err = writeTransactionData(BC_REPLY, flags, -1, 0, reply, &statusBuffer);

if (err < NO_ERROR) return err;

return waitForResponse(NULL, NULL);

}

Service注冊:

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

trace_binder_ioctl(cmd, arg);

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

goto err_unlocked;

binder_lock(__func__);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) { //獲取用戶態的binder_write_read對象

ret = -EFAULT;

goto err;

}

if (bwr.write_size > 0) {//如果輸入緩沖區長度大於0則調用binder_thread_write方法

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) {//如果輸出緩沖區長度大於0則調用binder_thread_read方法

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

break;

}

case BINDER_SET_MAX_THREADS:

if (copy_from_user(&proc->max_threads, ubuf, sizeof(proc->max_threads))) {

ret = -EINVAL;

goto err;

}

break;

case BINDER_SET_CONTEXT_MGR:

if (binder_context_mgr_node != NULL) {

printk(KERN_ERR "binder: BINDER_SET_CONTEXT_MGR already set\n");

ret = -EBUSY;

goto err;

}

ret = security_binder_set_context_mgr(proc->tsk);

if (ret < 0)

goto err;

if (binder_context_mgr_uid != -1) {

if (binder_context_mgr_uid != current->cred->euid) {

printk(KERN_ERR "binder: BINDER_SET_"

"CONTEXT_MGR bad uid %d != %d\n",

current->cred->euid,

binder_context_mgr_uid);

ret = -EPERM;

goto err;

}

} else

binder_context_mgr_uid = current->cred->euid;

binder_context_mgr_node = binder_new_node(proc, NULL, NULL);

if (binder_context_mgr_node == NULL) {

ret = -ENOMEM;

goto err;

}

binder_context_mgr_node->local_weak_refs++;

binder_context_mgr_node->local_strong_refs++;

binder_context_mgr_node->has_strong_ref = 1;

binder_context_mgr_node->has_weak_ref = 1;

break;

case BINDER_THREAD_EXIT:

binder_debug(BINDER_DEBUG_THREADS, "binder: %d:%d exit\n",

proc->pid, thread->pid);

binder_free_thread(proc, thread);

thread = NULL;

break;

case BINDER_VERSION:

if (size != sizeof(struct binder_version)) {

ret = -EINVAL;

goto err;

}

if (put_user(BINDER_CURRENT_PROTOCOL_VERSION, &((struct binder_version *)ubuf)->protocol_version)) {

ret = -EINVAL;

goto err;

}

break;

default:

ret = -EINVAL;

goto err;

}

ret = 0;

err:

if (thread)

thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

binder_unlock(__func__);

wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret && ret != -ERESTARTSYS)

printk(KERN_INFO "binder: %d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

err_unlocked:

trace_binder_ioctl_done(ret);

return ret;

}

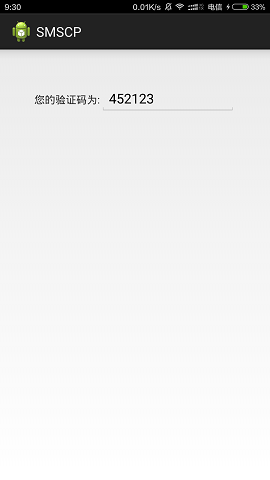

Android短信驗證碼自動填充功能

Android短信驗證碼自動填充功能

筆者發現在很多應用中,都有自動獲取驗證碼的功能:點擊獲取驗證碼按鈕,收到短信,當前應用不需要退出程序就可以獲取到短信中的驗證碼,並自動填充。覺得這種用戶體驗很贊,無須用戶

Android提供的系統服務之--AlarmManager(鬧鐘服務)

Android提供的系統服務之--AlarmManager(鬧鐘服務)

本節引言: 本節主要介紹的是Android系統服務中的---AlarmManager(鬧鐘服務), 除了開發手機鬧鐘外,更多的時候是作為一個全

Android手機無法連接無線網絡解決辦法匯總大全

Android手機無法連接無線網絡解決辦法匯總大全

一、手機搜索不到無線信號怎麼辦?1、在無法搜索到無線信號時,請確定無線終端在無線網絡覆蓋范圍內,點擊“掃描”刷新無線網絡列表。如下圖

解決Android設備插入打印機無法啟動

解決Android設備插入打印機無法啟動

一直在想起一個什麼題目好一些,題目只是最初的現實,經過不斷調試最後很是其它問題,想要起一個其它名字比如《打印機驅動中熱插拔事件中添加DEVTYPE》。但是最後想了想還是回