編輯:關於Android編程

目前Android平台上進行人臉特征識別非常火爆,本人研究生期間一直從事人臉特征的處理,所以曾經用過一段ASM(主動形狀模型)提取人臉基礎特征點,所以這裡采用JNI的方式將ASM在Android平台上進行了實現,同時在本應用實例中,給出了幾個其他的圖像處理的示例。

由於ASM (主動形狀模型,Active Shape Model)的核心算法比較復雜,所以這裡不進行算法介紹,我之前寫過一篇詳細的算法介紹和公式推導,有興趣的朋友可以參考下面的連接:

ASM(主動形狀模型)算法詳解

接下來介紹本應用的實現。

首先,給出本應用的項目源碼:

Android ASM Demo

在這個項目源碼的README中詳細介紹了怎麼配置運行時環境,請仔細閱讀。

本項目即用到了Android JNI開發,又用到了Opencv4Android,所以,配置起來還是很復雜的。Android JNI開發配置請參考:Android JNI,Android 上使用Opencv請參考:Android Opencv

整個應用的代碼比較多,所以如果想很好的了解項目原理,最好還是將代碼下載下來仔細看看。

首先給出本地cpp代碼,下面的本地cpp代碼負責調用stasm提供的c語言接口:

#include

#include

#include

#include

#include

#include

#include ./stasm/stasm_lib.h

using namespace cv;

using namespace std;

CascadeClassifier cascade;

bool init = false;

const String APP_DIR = /data/data/com.example.asm/app_data/;

extern C {

/*

* do Canny edge detect

*/

JNIEXPORT void JNICALL Java_com_example_asm_NativeCode_DoCanny(JNIEnv* env,

jobject obj, jlong matSrc, jlong matDst, jdouble threshold1 = 50,

jdouble threshold2 = 150, jint aperatureSize = 3) {

Mat * img = (Mat *) matSrc;

Mat * dst = (Mat *) matDst;

cvtColor(*img, *dst, COLOR_BGR2GRAY);

Canny(*img, *dst, threshold1, threshold2, aperatureSize);

}

/*

* face detection

* matDst: face region

* scaleFactor = 1.1

* minNeighbors = 2

* minSize = 30 * 30

*/

JNIEXPORT void JNICALL Java_com_example_asm_NativeCode_FaceDetect(JNIEnv* env,

jobject obj, jlong matSrc, jlong matDst, jdouble scaleFactor, jint minNeighbors, jint minSize) {

Mat * src = (Mat *) matSrc;

Mat * dst = (Mat *) matDst;

float factor = 0.3;

Mat img;

resize(*src, img, Size((*src).cols * factor, (*src).rows * factor));

String cascadeFile = APP_DIR + haarcascade_frontalface_alt2.xml;

if (!init) {

cascade.load(cascadeFile);

init = true;

}

if (cascade.empty() != true) {

vector faces;

cascade.detectMultiScale(img, faces, scaleFactor, minNeighbors, 0

| CV_HAAR_FIND_BIGGEST_OBJECT

| CV_HAAR_DO_ROUGH_SEARCH

| CV_HAAR_SCALE_IMAGE, Size(minSize, minSize));

for (int i = 0; i < faces.size(); i++) {

Rect rect = faces[i];

rect.x /= factor;

rect.y /= factor;

rect.width /= factor;

rect.height /= factor;

if (i == 0) {

(*src)(rect).copyTo(*dst);

}

rectangle(*src, rect.tl(), rect.br(), Scalar(0, 255, 0, 255), 3);

}

}

}

/*

* do ASM

* error code:

* -1: illegal input Mat

* -2: ASM initialize error

* -3: no face detected

*/

JNIEXPORT jintArray JNICALL Java_com_example_asm_NativeCode_FindFaceLandmarks(

JNIEnv* env, jobject, jlong matAddr, jfloat ratioW, jfloat ratioH) {

const char * PATH = APP_DIR.c_str();

clock_t StartTime = clock();

jintArray arr = env->NewIntArray(2 * stasm_NLANDMARKS);

jint *out = env->GetIntArrayElements(arr, 0);

Mat img = *(Mat *) matAddr;

cvtColor(img, img, COLOR_BGR2GRAY);

if (!img.data) {

out[0] = -1; // error code: -1(illegal input Mat)

out[1] = -1;

img.release();

env->ReleaseIntArrayElements(arr, out, 0);

return arr;

}

int foundface;

float landmarks[2 * stasm_NLANDMARKS]; // x,y coords

if (!stasm_search_single(&foundface, landmarks, (const char*) img.data,

img.cols, img.rows, , PATH)) {

out[0] = -2; // error code: -2(ASM initialize failed)

out[1] = -2;

img.release();

env->ReleaseIntArrayElements(arr, out, 0);

return arr;

}

if (!foundface) {

out[0] = -3; // error code: -3(no face found)

out[1] = -3;

img.release();

env->ReleaseIntArrayElements(arr, out, 0);

return arr;

} else {

for (int i = 0; i < stasm_NLANDMARKS; i++) {

out[2 * i] = cvRound(landmarks[2 * i] * ratioW);

out[2 * i + 1] = cvRound(landmarks[2 * i + 1] * ratioH);

}

}

double TotalAsmTime = double(clock() - StartTime) / CLOCKS_PER_SEC;

__android_log_print(ANDROID_LOG_INFO, com.example.asm.native,

running in native code,

Stasm Ver:%s Img:%dx%d ---> Time:%.3f secs., stasm_VERSION,

img.cols, img.rows, TotalAsmTime);

img.release();

env->ReleaseIntArrayElements(arr, out, 0);

return arr;

}

}

stasm代碼比較多,這裡不具體給出,這裡特別給出一下Android.mk這個Android平台JNI代碼的makefile

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

OPENCV_CAMERA_MODULES:=off

OPENCV_INSTALL_MODULES:=on

OPENCV_LIB_TYPE:=STATIC

ifeq ($(wildcard $(OPENCV_MK_PATH)),)

#try to load OpenCV.mk from default install location

include /home/wesong/software/OpenCV-2.4.10-android-sdk/sdk/native/jni/OpenCV.mk

else

include $(OPENCV_MK_PATH)

endif

LOCAL_MODULE := Native

FILE_LIST := $(wildcard $(LOCAL_PATH)/stasm/*.cpp)

$(wildcard $(LOCAL_PATH)/stasm/MOD_1/*.cpp)

LOCAL_SRC_FILES := Native.cpp

$(FILE_LIST:$(LOCAL_PATH)/%=%)

LOCAL_LDLIBS += -llog -ldl

include $(BUILD_SHARED_LIBRARY)

# other library

include $(CLEAR_VARS)

LOCAL_MODULE := opencv_java-prebuild

LOCAL_SRC_FILES := libopencv_java.so

include $(PREBUILT_SHARED_LIBRARY)

需要特別注意: NDK在Ubuntu平台下build代碼時會自動刪除已經存在了的動態鏈接庫文件,因為我們需要在Android項目中引用OpenCV4Android提供的libopencv_java.so這個鏈接庫,然而每次build JNI代碼的時候NDK都會把這個.so文件刪了,所以,需要用一個小trick,就是上面的Android.mk文件中最後一部分,采用prebuild的libopencv_java.so

這個地方當時迷糊了我很久,並且浪費了很多時間進行處理,這個現象在Windows上是不存在的。WTF!

然後是Android中java代碼對Native JNI code的調用

package com.example.asm;

public class NativeCode {

static {

System.loadLibrary(Native);

}

/*

* Canny edge detect

* threshold1 = 50

* threshold2 = 150

* aperatureSize = 3

*/

public static native void DoCanny(long matAddr_src, long matAddr_dst, double threshold1,

double threshold2, int aperatureSize);

/*

* do face detect

* scaleFactor = 1.1

* minNeighbors = 2

* minSize = 30 (30 * 30)

*/

public static native void FaceDetect(long matAddr_src, long matAddr_dst,

double scaleFactor, int minNeighbors, int minSize);

/*

* do ASM

* find landmarks

*/

public static native int[] FindFaceLandmarks(long matAddr, float ratioW, float ratioH);

}

然後就是主程序啦,主程序中有很多trick,目的是讓Android能夠高效的進行計算,因為ASM的計算量非常大,在Android平台上來說,需要消耗大量的時間,所以肯定不能放在UI線程中進行ASM計算。

本應用中通過AsyncTask來進行ASM特征點人臉定位

private class AsyncAsm extends AsyncTask> {

private Context context;

private Mat src;

public AsyncAsm(Context context) {

this.context = context;

}

@Override

protected List doInBackground(Mat... mat0) {

List list = new ArrayList();

Mat src = mat0[0];

this.src = src;

int[] points = NativeImageUtil.FindFaceLandmarks(src, 1, 1);

for (int i = 0; i < points.length; i++) {

list.add(points[i]);

}

return list;

}

// run on UI thread

@Override

protected void onPostExecute(List list) {

MainActivity.this.drawAsmPoints(this.src, list);

}

};

並且在主界面中,實時的進行人臉檢測,這裡人臉檢測是通過開啟一個新的線程進行的:

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

Log.d(TAG, onPreviewFrame);

Size size = camera.getParameters().getPreviewSize();

Bitmap bitmap = ImageUtils.yuv2bitmap(data, size.width, size.height);

Mat src = new Mat();

Utils.bitmapToMat(bitmap, src);

src.copyTo(currentFrame);

Log.d(com.example.asm.CameraPreview, image size: w: + src.width()

+ h: + src.height());

// do canny

Mat canny_mat = new Mat();

Imgproc.Canny(src, canny_mat, Params.CannyParams.THRESHOLD1,

Params.CannyParams.THRESHOLD2);

Bitmap canny_bitmap = ImageUtils.mat2Bitmap(canny_mat);

iv_canny.setImageBitmap(canny_bitmap);

// do face detect in Thread

faceDetectThread.assignTask(Params.DO_FACE_DETECT, src);

}

線程定義如下:

package com.example.asm;

import org.opencv.core.Mat;

import android.content.Context;

import android.os.Handler;

import android.os.Looper;

import android.os.Message;

public class FaceDetectThread extends Thread {

private final String TAG = com.example.asm.FaceDetectThread;

private Context mContext;

private Handler mHandler;

private ImageUtils imageUtils;

public FaceDetectThread(Context context) {

mContext = context;

imageUtils = new ImageUtils(context);

}

public void assignTask(int id, Mat src) {

// do face detect

if (id == Params.DO_FACE_DETECT) {

Message msg = new Message();

msg.what = Params.DO_FACE_DETECT;

msg.obj = src;

this.mHandler.sendMessage(msg);

}

}

@Override

public void run() {

Looper.prepare();

mHandler = new Handler() {

@Override

public void handleMessage(Message msg) {

if (msg.what == Params.DO_FACE_DETECT) {

Mat detected = new Mat();

Mat face = new Mat();

Mat src = (Mat) msg.obj;

detected = imageUtils.detectFacesAndExtractFace(src, face);

Message uiMsg = new Message();

uiMsg.what = Params.FACE_DETECT_DONE;

uiMsg.obj = detected;

// send Message to UI

((MainActivity) mContext).mHandler.sendMessage(uiMsg);

}

}

};

Looper.loop();

}

}

貌似代碼有點多,所以,還是請看源代碼吧。

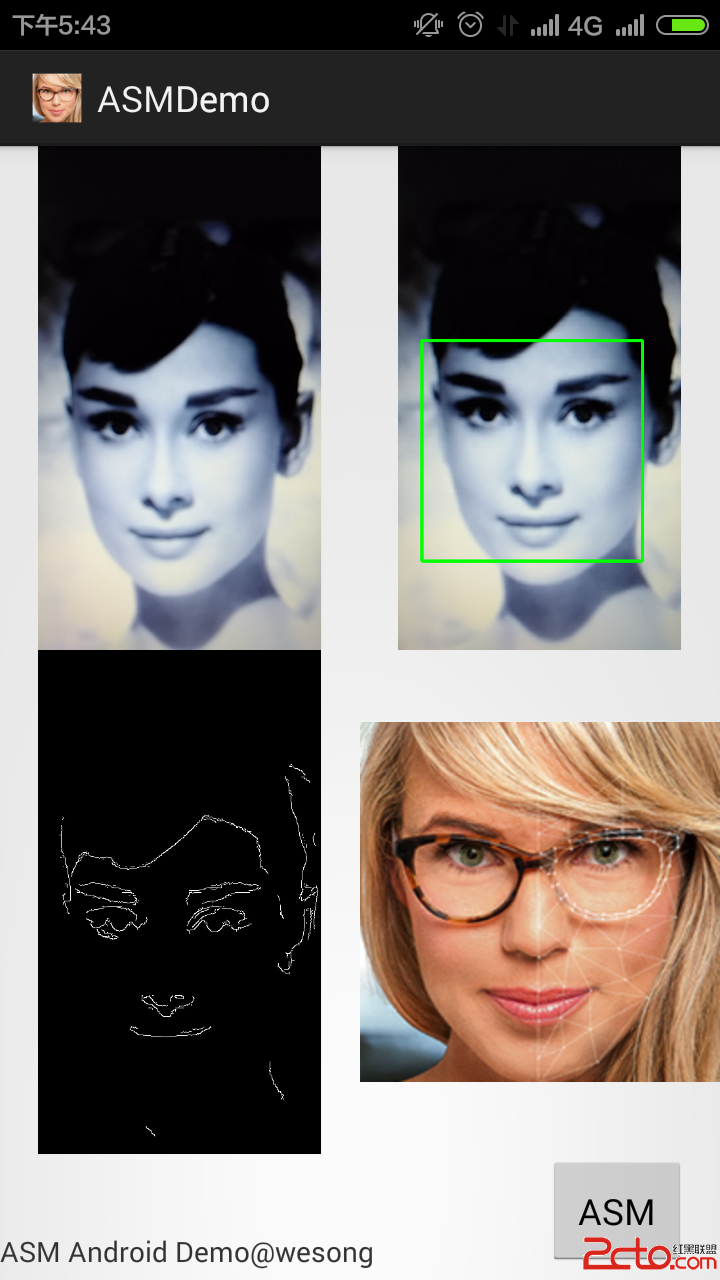

下面給出幾個系統的應用截圖,由於本人太屌絲,所以用的紅米1S,性能不是很好,請見諒。。。

同時感謝Google提供赫本照片,再次申明文明轉載,MD.

應用啟動之後:

vc+6xMqxo6zL+dLUzOG5qcHL0ru49rC0xaW21Nfu0MK1xMjLwbO8xsvjQVNNLjwvcD4NCjxwPrzGy+NBU03S1Lrzo7o8YnIgLz4NCjxpbWcgYWx0PQ=="這裡寫圖片描述" src="/uploadfile/Collfiles/20150724/20150724083539163.png" title="\" />

vc+6xMqxo6zL+dLUzOG5qcHL0ru49rC0xaW21Nfu0MK1xMjLwbO8xsvjQVNNLjwvcD4NCjxwPrzGy+NBU03S1Lrzo7o8YnIgLz4NCjxpbWcgYWx0PQ=="這裡寫圖片描述" src="/uploadfile/Collfiles/20150724/20150724083539163.png" title="\" />

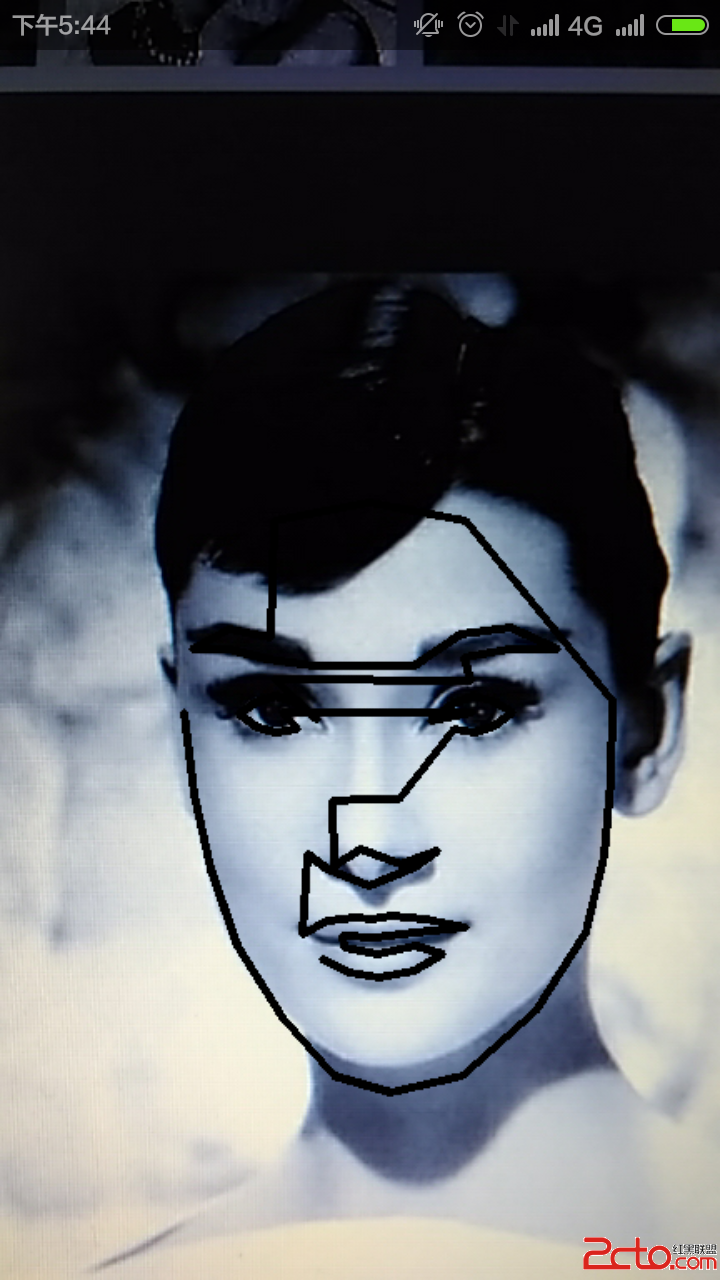

然後點擊第四個區域可以進行ASM特征點的圖片查看:

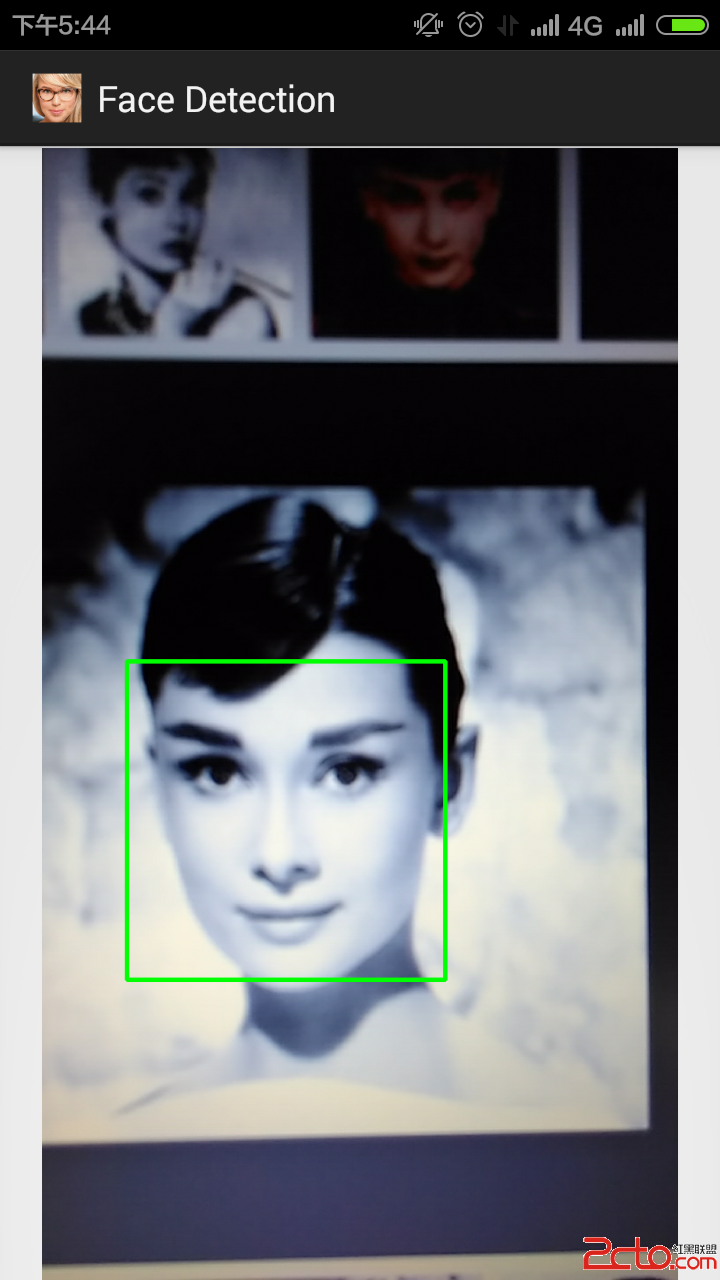

第二個人臉檢測窗口點擊以後會進行一個人臉檢測的Activity:

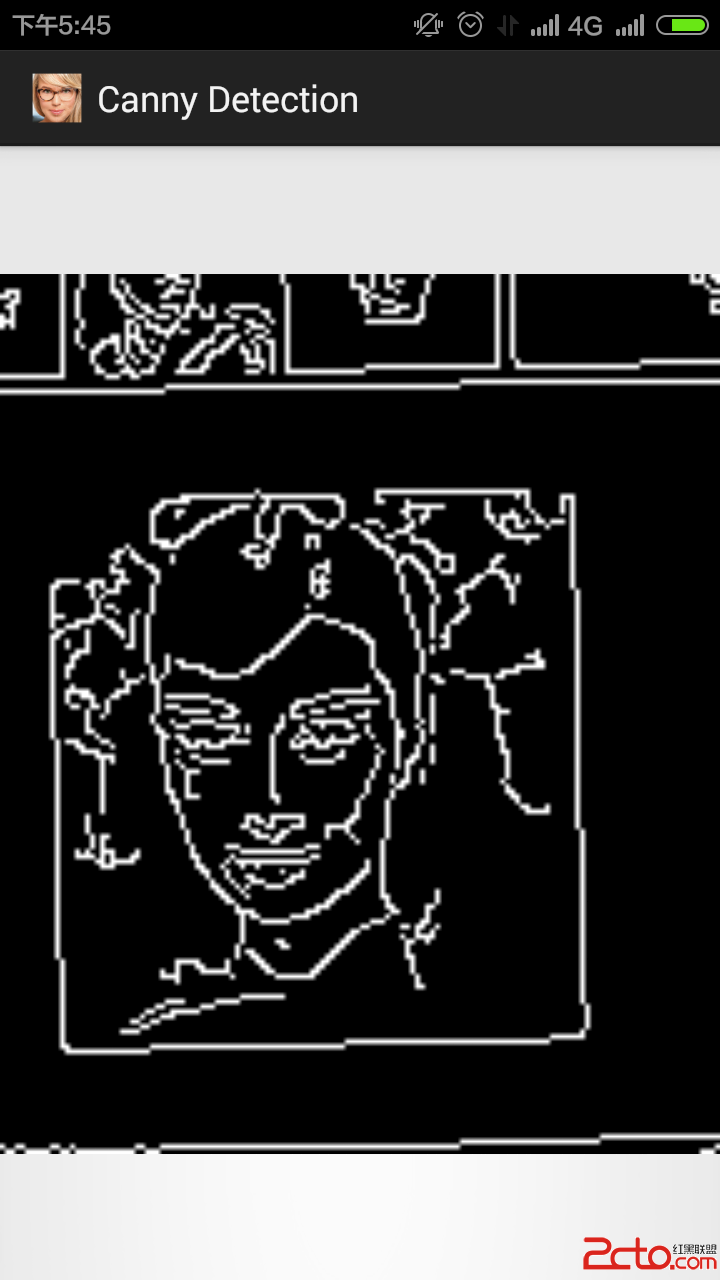

點擊第三個窗口可以進入Canny邊緣檢測的Activity:

一步一步實現直播和彈幕

一步一步實現直播和彈幕

序言最近在研究直播的彈幕,東西有點多,准備記錄一下免得自己忘了又要重新研究,也幫助有這方面需要的同學少走點彎路。關於直播的技術細節其實就是兩個方面一個是推流一個是拉流,而

android使用PopupWindow實現頁面點擊頂部彈出下拉菜單

android使用PopupWindow實現頁面點擊頂部彈出下拉菜單

實現此功能沒有太多的技術難點,主要通過PopupWindow方法,同時更進一步加深了PopupWindow的使用,實現點擊彈出一個自定義的view,view裡面可以自由設

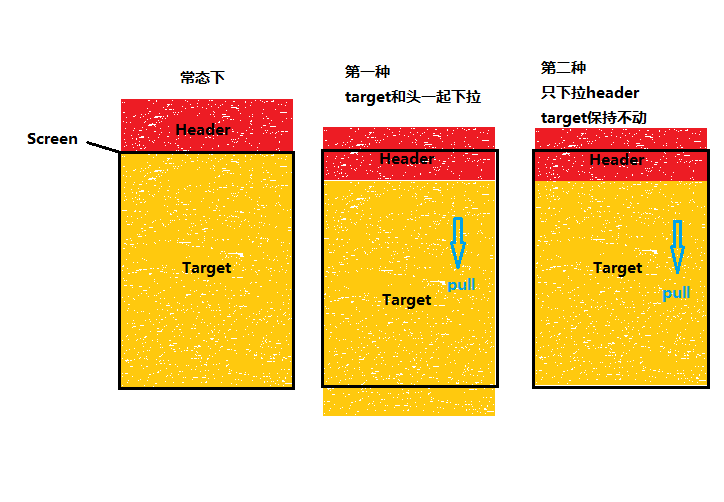

Android 怎麼實現支持所有View的通用的下拉刷新控件

Android 怎麼實現支持所有View的通用的下拉刷新控件

下拉刷新對於一個app來說是必不可少的一個功能,在早期大多數使用的是chrisbanes的PullToRefresh,或是修改自該框架的其他庫。而到現在已經有了更多的選擇

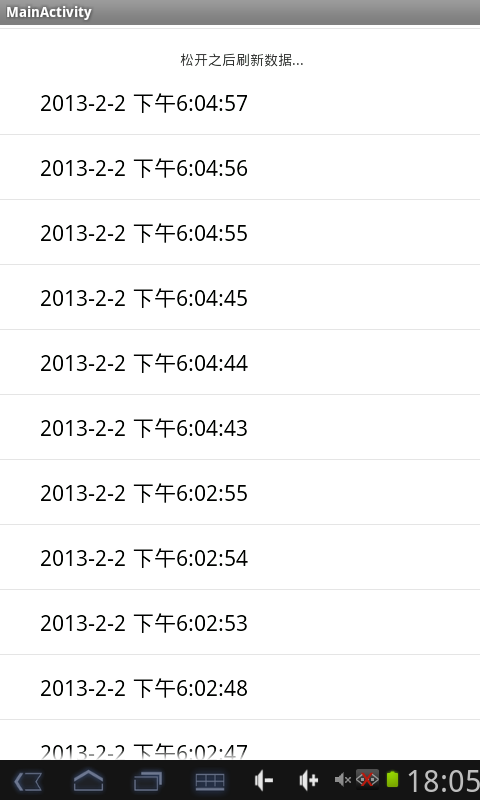

Android開發之ListView列表刷新和加載更多實現方法

Android開發之ListView列表刷新和加載更多實現方法

本文實例講述了Android開發之ListView列表刷新和加載更多實現方法。分享給大家供大家參考。具體如下:上下拉實現刷新和加載更多的ListView,如下:packa