編輯:關於Android編程

初學opengl ES,每一個教你在屏幕上貼圖的opengl版hello world都有這麼兩數組:

static final float COORD[] = {

-1.0f, -1.0f,

1.0f, -1.0f,

-1.0f, 1.0f,

1.0f, 1.0f,

};

static final float TEXTURE_COORD[] = {

0.0f, 1.0f,

1.0f, 1.0f,

0.0f, 0.0f,

1.0f, 0.0f,

};

但是幾乎都不解釋,所以我學的時候都不明白這些點為什麼要這麼寫,前後順序有沒有什麼規律。於是各種查資料試驗,終於搞懂了。

PS:本人學opengl es主要是為了2D貼圖,所以不涉及Z軸

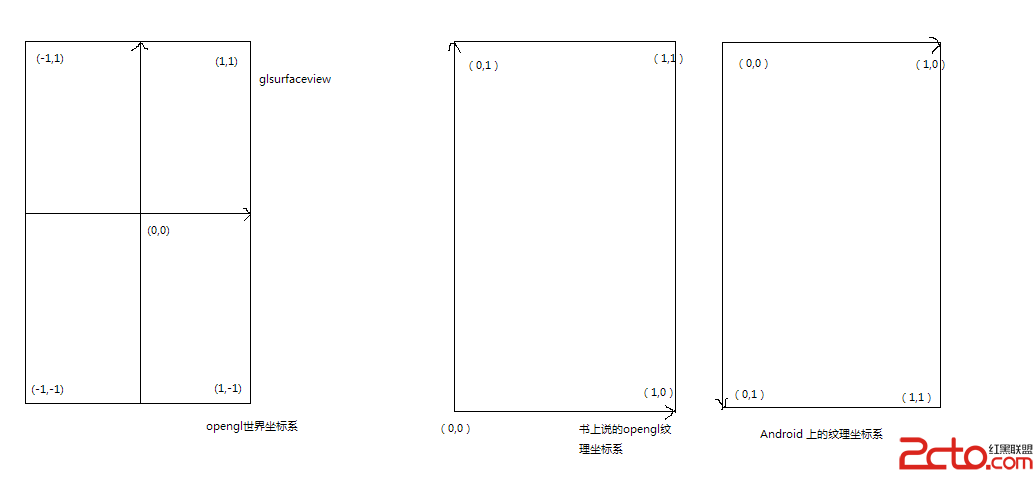

如圖,圖一是Z喎?/kf/ware/vc/" target="_blank" class="keylink">vcGVuZ2y1xMrAvefX+LHqz7WjrNXiuPa7+bG+w7vJts7KzOKjrNb30qrKx7rctuC9zLPMy7XOxsDt1/ix6srH1/PPwtStteOho8q1vPm1w7P21NpBbmRyb2lkyc/TprjDysfX7tPSsd+1xM28xMfR+aOs0tTX88nPzqrUrbXjoaM8YnIgLz4NCrj2yMuywrLizsbA7bDJxuTKtb7NysfSu9fp0dXJq7Xj1+mzybXEyv3X6aOsQW5kcm9pZNPJ09pVSdf4serKx9LU1/PJz86q1K2146Osy/nS1LDRyv3X6cDv0dXJq7XjtcS05rSiy7PQ8rjEwcvSu8/Co6zT2srH1/ix6s+1vs2yu9K70fnBy6GjPC9wPg0KPGgzIGlkPQ=="2示例代碼">2.示例代碼

public class Filter {

protected static final String VERTEX_SHADER = "" +

"attribute vec4 position;\n" +

"attribute vec4 inputTextureCoordinate;\n" +

" \n" +

"varying vec2 textureCoordinate;\n" +

" \n" +

"void main()\n" +

"{\n" +

" gl_Position = position;\n" +

" textureCoordinate = inputTextureCoordinate.xy;\n" +

"}";

protected static final String FRAGMENT_SHADER = "" +

"varying highp vec2 textureCoordinate;\n" +

" \n" +

"uniform sampler2D inputImageTexture;\n" +

" \n" +

"void main()\n" +

"{\n" +

" gl_FragColor = texture2D(inputImageTexture, textureCoordinate);\n" +

"}";

static final float COORD1[] = {

-1.0f, -1.0f,

1.0f, -1.0f,

-1.0f, 1.0f,

1.0f, 1.0f,

};

static final float TEXTURE_COORD1[] = {

0.0f, 1.0f,

1.0f, 1.0f,

0.0f, 0.0f,

1.0f, 0.0f,

};

static final float COORD2[] = {

-1.0f, 1.0f,

-1.0f, -1.0f,

1.0f, 1.0f,

1.0f, -1.0f,

};

static final float TEXTURE_COORD2[] = {

0.0f, 0.0f,

0.0f, 1.0f,

1.0f, 0.0f,

1.0f, 1.0f,

};

static final float COORD3[] = {

1.0f, -1.0f,

1.0f, 1.0f,

-1.0f, -1.0f,

-1.0f, 1.0f,

};

static final float TEXTURE_COORD3[] = {

1.0f, 1.0f,

1.0f, 0.0f,

0.0f, 1.0f,

0.0f, 0.0f,

};

static final float COORD4[] = {

1.0f, -1.0f,

1.0f, 1.0f,

-1.0f, -1.0f,

-1.0f, 1.0f,

};

static final float TEXTURE_COORD4[] = {

1.0f, 1.0f,

1.0f, 0.0f,

0.0f, 1.0f,

0.0f, 0.0f,

};

static final float COORD_REVERSE[] = {

1.0f, -1.0f,

1.0f, 1.0f,

-1.0f, -1.0f,

-1.0f, 1.0f,

};

static final float TEXTURE_COORD_REVERSE[] = {

1.0f, 0.0f,

1.0f, 1.0f,

0.0f, 0.0f,

0.0f, 1.0f,

};

static final float COORD_FLIP[] = {

1.0f, -1.0f,

1.0f, 1.0f,

-1.0f, -1.0f,

-1.0f, 1.0f,

};

static final float TEXTURE_COORD_FLIP[] = {

0.0f, 1.0f,

0.0f, 0.0f,

1.0f, 1.0f,

1.0f, 0.0f,

};

private String mVertexShader;

private String mFragmentShader;

private FloatBuffer mCubeBuffer;

private FloatBuffer mTextureCubeBuffer;

protected int mProgId;

protected int mAttribPosition;

protected int mAttribTexCoord;

protected int mUniformTexture;

public Filter() {

this(VERTEX_SHADER, FRAGMENT_SHADER);

}

public Filter(String vertexShader, String fragmentShader) {

mVertexShader = vertexShader;

mFragmentShader = fragmentShader;

}

public void init() {

loadVertex();

initShader();

GLES20.glBlendFunc(GLES20.GL_ONE, GLES20.GL_ONE_MINUS_SRC_ALPHA);

}

public void loadVertex() {

float[] coord = COORD1;

float[] texture_coord = TEXTURE_COORD1;

mCubeBuffer = ByteBuffer.allocateDirect(coord.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer();

mCubeBuffer.put(coord).position(0);

mTextureCubeBuffer = ByteBuffer.allocateDirect(texture_coord.length * 4)

.order(ByteOrder.nativeOrder())

.asFloatBuffer();

mTextureCubeBuffer.put(texture_coord).position(0);

}

public void initShader() {

mProgId = GLHelper.loadProgram(mVertexShader, mFragmentShader);

mAttribPosition = GLES20.glGetAttribLocation(mProgId, "position");

mUniformTexture = GLES20.glGetUniformLocation(mProgId, "inputImageTexture");

mAttribTexCoord = GLES20.glGetAttribLocation(mProgId,

"inputTextureCoordinate");

}

public void drawFrame(int glTextureId) {

if (!GLES20.glIsProgram(mProgId)) {

initShader();

}

GLES20.glUseProgram(mProgId);

mCubeBuffer.position(0);

GLES20.glVertexAttribPointer(mAttribPosition, 2, GLES20.GL_FLOAT, false, 0, mCubeBuffer);

GLES20.glEnableVertexAttribArray(mAttribPosition);

mTextureCubeBuffer.position(0);

GLES20.glVertexAttribPointer(mAttribTexCoord, 2, GLES20.GL_FLOAT, false, 0,

mTextureCubeBuffer);

GLES20.glEnableVertexAttribArray(mAttribTexCoord);

if (glTextureId != GLHelper.NO_TEXTURE) {

GLES20.glActiveTexture(GLES20.GL_TEXTURE0);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, glTextureId);

GLES20.glUniform1i(mUniformTexture, 0);

}

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

GLES20.glDisableVertexAttribArray(mAttribPosition);

GLES20.glDisableVertexAttribArray(mAttribTexCoord);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, 0);

GLES20.glDisable(GLES20.GL_BLEND);

}

}

其中

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

由於openglES本身就是opengl的縮略版,所以能直接畫的形狀就只有三角形,別的復雜的都要由三角形來組成。GLES20.GL_TRIANGLE_STRIP指的就是一種三角形的繪制模式,對應這個頂點數組:

static final float COORD[] = {

-1.0f, -1.0f, //1

1.0f, -1.0f, //2

-1.0f, 1.0f, //3

1.0f, 1.0f, //4

};

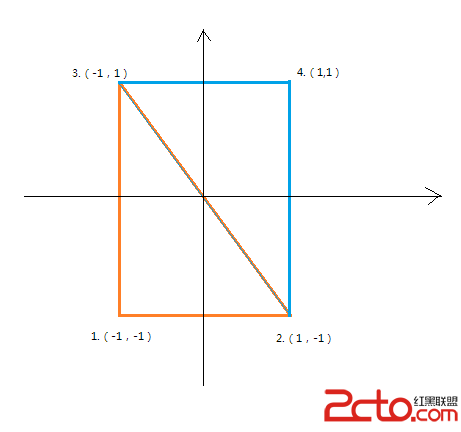

實際繪制的就是頂點1,2,3組成的三角形和頂點2,3,4組成的三角形合並成的一個矩形,如果有更多點,依次類推(比如有5個點,就是1,2,3 2,3,4 3,4,5三個三角形組成的圖案)。如下圖:

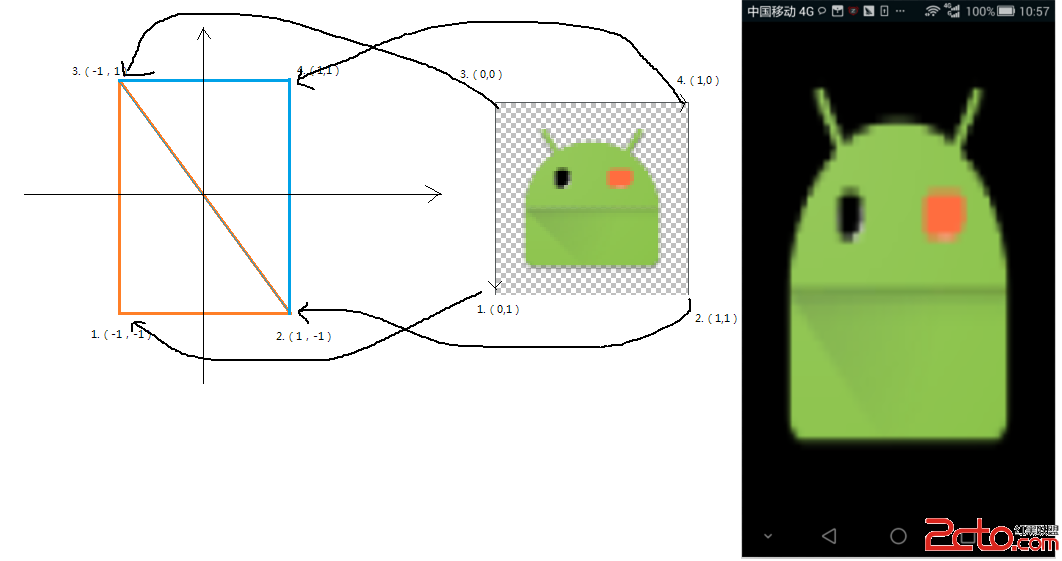

紋理的點和世界坐標的點之間是對應的:

static final float COORD1[] = {

-1.0f, -1.0f,

1.0f, -1.0f,

-1.0f, 1.0f,

1.0f, 1.0f,

};

static final float TEXTURE_COORD1[] = {

0.0f, 1.0f,

1.0f, 1.0f,

0.0f, 0.0f,

1.0f, 0.0f,

};

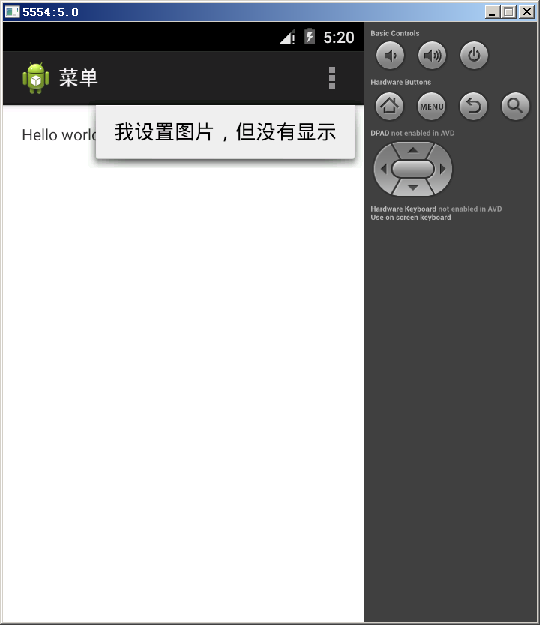

顯示結果如圖:

如圖中箭頭,opengl會把紋理中顏色頂點繪到對應的世界坐標頂點上,中間的點則按一定的規律取個平均值什麼的,所以可見實際顯示的圖被上下拉伸了,因為原圖是1:1,而在該程序裡

GLES20.glViewport(0, 0, width, height);

賦予的顯示區域是高大於寬的(這裡涉及到opengl世界坐標和屏幕坐標的映射,和本文主旨關系不大就不多說了)。

其實也就是只要世界坐標和紋理坐標數組裡的點能夠對的上,順序不是問題

代碼裡的四組坐標的顯示效果都是一樣的:

static final float COORD1[] = {

-1.0f, -1.0f,

1.0f, -1.0f,

-1.0f, 1.0f,

1.0f, 1.0f,

};

static final float TEXTURE_COORD1[] = {

0.0f, 1.0f,

1.0f, 1.0f,

0.0f, 0.0f,

1.0f, 0.0f,

};

static final float COORD2[] = {

-1.0f, 1.0f,

-1.0f, -1.0f,

1.0f, 1.0f,

1.0f, -1.0f,

};

static final float TEXTURE_COORD2[] = {

0.0f, 0.0f,

0.0f, 1.0f,

1.0f, 0.0f,

1.0f, 1.0f,

};

static final float COORD3[] = {

1.0f, -1.0f,

1.0f, 1.0f,

-1.0f, -1.0f,

-1.0f, 1.0f,

};

static final float TEXTURE_COORD3[] = {

1.0f, 1.0f,

1.0f, 0.0f,

0.0f, 1.0f,

0.0f, 0.0f,

};

static final float COORD4[] = {

1.0f, -1.0f,

1.0f, 1.0f,

-1.0f, -1.0f,

-1.0f, 1.0f,

};

static final float TEXTURE_COORD4[] = {

1.0f, 1.0f,

1.0f, 0.0f,

0.0f, 1.0f,

0.0f, 0.0f,

};

不信的可以在這裡都替換了試試:

float[] coord = COORD1;

float[] texture_coord = TEXTURE_COORD1;

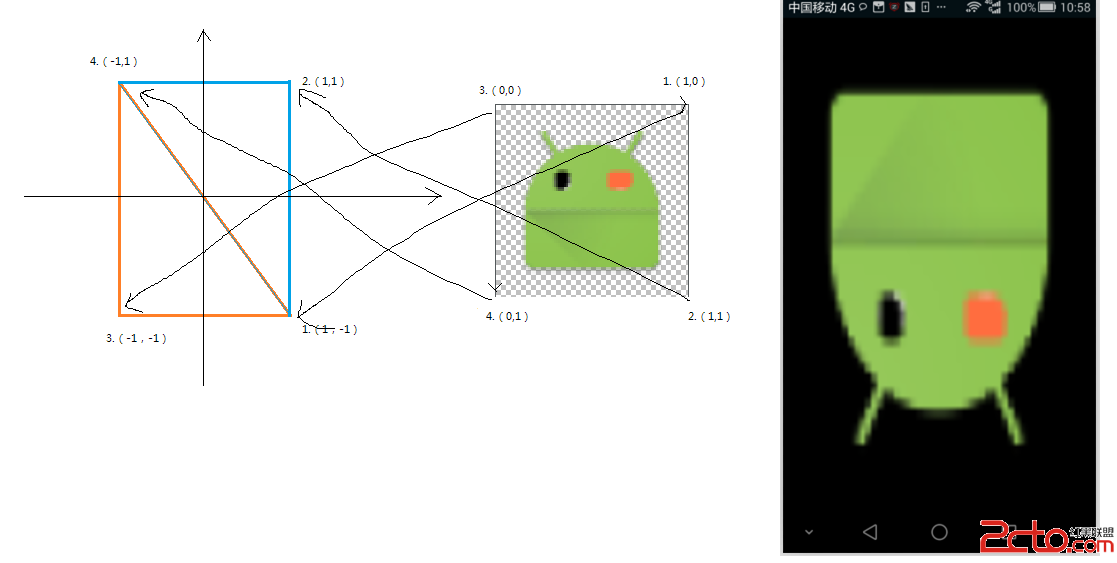

為了加深理解,甚至可以玩點花樣出來,比如這樣

static final float COORD_REVERSE[] = {

1.0f, -1.0f,

1.0f, 1.0f,

-1.0f, -1.0f,

-1.0f, 1.0f,

};

static final float TEXTURE_COORD_REVERSE[] = {

1.0f, 0.0f,

1.0f, 1.0f,

0.0f, 0.0f,

0.0f, 1.0f,

};

。。。。。。。。。。。。。。。。

float[] coord = COORD_REVERSE;

float[] texture_coord = TEXTURE_COORD_REVERSE;

結果如下圖:

https://github.com/yellowcath/GLCoordDemo.git

Android OpenGLES2.0(五)——繪制立方體

Android OpenGLES2.0(五)——繪制立方體

上篇博客中我們提到了OpenGLES中繪制的兩種方法,頂點法和索引法。之前我們所使用的都是頂點法,這次繪制立方體使用索引法來繪制立方體。構建立方體上篇博客講到正方形的繪制

Android SDK Manager國內無法更新的解決方案

Android SDK Manager國內無法更新的解決方案

現在由於GWF,google基本和咱們說咱見了,就給現在在做Android&nbs

11 OptionsMenu 菜單

11 OptionsMenu 菜單

OptionsMenu 選項菜單(系統菜單 )OptionsMenu:系統級別菜單菜單的使用步驟:1. res裡的menu裡添加布局 在布局裡寫菜單項2. 在邏輯代碼中使

Android使用Fragment實現底部導航欄分析

Android使用Fragment實現底部導航欄分析

前言 在做移動開發過程中底部導航欄是十分常見的功能,且市面上見到的做法也有很多種,這篇博文記錄一下使用Fragment實現底部導航欄的功能,算是對這幾天學習Andr