編輯:關於Android編程

前面對Camera2的初始化以及預覽的相關流程進行了詳細分析,本文將會對Camera2的capture(拍照)流程進行分析。

前面分析preview的時候,當預覽成功後,會使能ShutterButton,即可以進行拍照,定位到ShutterButton的監聽事件為onShutterButtonClick方法:

//CaptureModule.java

@Override

public void onShutterButtonClick() {

//Camera未打開

if (mCamera == null) {

return;

}

int countDownDuration = mSettingsManager.getInteger(SettingsManager

.SCOPE_GLOBAL,Keys.KEY_COUNTDOWN_DURATION);

if (countDownDuration > 0) {

// 開始倒計時

mAppController.getCameraAppUI().transitionToCancel();

mAppController.getCameraAppUI().hideModeOptions();

mUI.setCountdownFinishedListener(this);

mUI.startCountdown(countDownDuration);

// Will take picture later via listener callback.

} else {

//即刻拍照

takePictureNow();

}

}

首先,讀取Camera的配置,判斷配置是否需要延時拍照,此處分析不需延時的情況,即調用takePictureNow方法:

//CaptureModule.java

private void takePictureNow() {

if (mCamera == null) {

Log.i(TAG, "Not taking picture since Camera is closed.");

return;

}

//創建Capture會話並開啟會話

CaptureSession session = createAndStartCaptureSession();

//獲取Camera的方向

int orientation = mAppController.getOrientationManager()

.getDeviceOrientation().getDegrees();

//初始化圖片參數,其中this(即CaptureModule)為PictureCallback的實現

PhotoCaptureParameters params = new PhotoCaptureParameters(

session.getTitle(), orientation, session.getLocation(),

mContext.getExternalCacheDir(), this, mPictureSaverCallback,

mHeadingSensor.getCurrentHeading(), mZoomValue, 0);

//裝配Session

decorateSessionAtCaptureTime(session);

//拍照

mCamera.takePicture(params, session);

}

它首先調用createAndStartCaptureSession來創建一個CaptureSession並且啟動會話,這裡並且會進行初始參數的設置,譬如設置CaptureModule(此處實參

為this)為圖片處理的回調(後面再分析):

//CaptureModule.java

private CaptureSession createAndStartCaptureSession() {

//獲取會話時間

long sessionTime = getSessionTime();

//當前位置

Location location = mLocationManager.getCurrentLocation();

//設置picture name

String title = CameraUtil.instance().createJpegName(sessionTime);

//創建會話

CaptureSession session = getServices().getCaptureSessionManager()

.createNewSession(title, sessionTime, location);

//開啟會話

session.startEmpty(new CaptureStats(mHdrPlusEnabled),new Size(

(int) mPreviewArea.width(), (int) mPreviewArea.height()));

return session;

}

首先,獲取會話的相關參數,包括會話時間,拍照的照片名字以及位置信息等,然後調用Session管理來創建CaptureSession,最後將此CaptureSession

啟動。到這裡,會話就創建並啟動了,所以接著分析上面的拍照流程,它會調用OneCameraImpl的takePicture方法來進行拍照:

//OneCameraImpl.java

@Override

public void takePicture(final PhotoCaptureParameters params, final CaptureSession session) {

...

// 除非拍照已經返回,否則就廣播一個未准備好狀態的廣播,即等待本次拍照結束

broadcastReadyState(false);

//創建一個線程

mTakePictureRunnable = new Runnable() {

@Override

public void run() {

//拍照

takePictureNow(params, session);

}

};

//設置回調,此回調後面將分析,它其實就是CaptureModule,它實現了PictureCallback

mLastPictureCallback = params.callback;

mTakePictureStartMillis = SystemClock.uptimeMillis();

//如果需要自動聚焦

if (mLastResultAFState == AutoFocusState.ACTIVE_SCAN) {

mTakePictureWhenLensIsStopped = true;

} else {

//拍照

takePictureNow(params, session);

}

}

在拍照裡,首先廣播一個未准備好的狀態廣播,然後進行拍照的回調設置,並且判斷是否有自動聚焦,如果是則將mTakePictureWhenLensIsStopped 設為ture,

即即刻拍照被停止了,否則則調用OneCameraImpl的takePictureNow方法來發起拍照請求:

//OneCameraImpl.java

public void takePictureNow(PhotoCaptureParameters params, CaptureSession

session) {

long dt = SystemClock.uptimeMillis() - mTakePictureStartMillis;

try {

// 構造JPEG圖片拍照的請求

CaptureRequest.Builder builder = mDevice.createCaptureRequest(

CameraDevice.TEMPLATE_STILL_CAPTURE);

builder.setTag(RequestTag.CAPTURE);

addBaselineCaptureKeysToRequest(builder);

// Enable lens-shading correction for even better DNGs.

if (sCaptureImageFormat == ImageFormat.RAW_SENSOR) {

builder.set(CaptureRequest.STATISTICS_LENS_SHADING_MAP_MODE,

CaptureRequest.STATISTICS_LENS_SHADING_MAP_MODE_ON);

} else if (sCaptureImageFormat == ImageFormat.JPEG) {

builder.set(CaptureRequest.JPEG_QUALITY, JPEG_QUALITY);

.getJpegRotation(params.orientation, mCharacteristics));

}

//用於preview的控件

builder.addTarget(mPreviewSurface);

//用於圖片顯示的控件

builder.addTarget(mCaptureImageReader.getSurface());

CaptureRequest request = builder.build();

if (DEBUG_WRITE_CAPTURE_DATA) {

final String debugDataDir = makeDebugDir(params.debugDataFolder,

"normal_capture_debug");

Log.i(TAG, "Writing capture data to: " + debugDataDir);

CaptureDataSerializer.toFile("Normal Capture", request,

new File(debugDataDir,"capture.txt"));

}

//拍照,mCaptureCallback為回調

mCaptureSession.capture(request, mCaptureCallback, mCameraHandler);

} catch (CameraAccessException e) {

Log.e(TAG, "Could not access camera for still image capture.");

broadcastReadyState(true);

params.callback.onPictureTakingFailed();

return;

}

synchronized (mCaptureQueue) {

mCaptureQueue.add(new InFlightCapture(params, session));

}

}

與preview類似,都是通過CaptureRequest來與Camera進行通信的,通過session的capture來進行拍照,

並設置拍照的回調函數為mCaptureCallback:

//CameraCaptureSessionImpl.java

@Override

public synchronized int capture(CaptureRequest request,CaptureCallback callback,Handler handler)throws CameraAccessException{

...

handler = checkHandler(handler,callback);

return addPendingSequence(mDeviceImpl.capture(request,createCaptureCallbackProxy(handler,callback),mDeviceHandler));

}

代碼與preview中的類似,都是將請求加入到待處理的請求集,現在看CaptureCallback回調:

//OneCameraImpl.java

private final CameraCaptureSession.CaptureCallback mCaptureCallback = new CameraCaptureSession.CaptureCallback(){

@Override

public void onCaptureStarted(CameraCaptureSession session,CaptureRequest request,long timestamp,long frameNumber){

//與preview類似

if(request.getTag() == RequestTag.CAPTURE&&mLastPictureCallback!=null){

mLastPictureCallback.onQuickExpose();

}

}

...

@Override

public void onCaptureCompleted(CameraCaptureSession session,CaptureRequest request,TotalCaptureResult result){

autofocusStateChangeDispatcher(result);

if(result.get(CaptureResult.CONTROL_AF_STATE) == null){

//檢查自動聚焦的狀態

AutoFocusHelper.checkControlAfState(result);

}

...

if(request.getTag() == RequestTag.CAPTURE){

synchronized(mCaptureQueue){

if(mCaptureQueue.getFirst().setCaptureResult(result).isCaptureComplete()){

capture = mCaptureQueue.removeFirst();

}

}

if(capture != null){

//拍照結束

OneCameraImpl.this.onCaptureCompleted(capture);

}

}

super.onCaptureCompleted(session,request,result);

}

...

}

這是Native層在處理請求時,會調用相應的回調,如capture開始時,會回調onCaptureStarted,具體的在preview中有過分析,當拍照結束時,會回調

onCaptureCompleted方法,其中會根據CaptureResult來檢查自動聚焦的狀態,並通過TAG判斷其是Capture動作時,再來看它是否是隊列中的第一個請求,

如果是,則將請求移除,因為請求已經處理成功,最後再調用OneCameraImpl的onCaptureCompleted方法來進行處理:

//OneCameraImpl.java

private void onCaptureCompleted(InFlightCapture capture){

if(isCaptureImageFormat == ImageFormat.RAW_SENSOR){

...

File dngFile = new File(RAW_DIRECTORY,capture.session.getTitle()+".dng");

writeDngBytesAndClose(capture.image,capture.totalCaptureResult,mCharacteristics,dngFile);

}else{

//解析result中的圖片數據

byte[] imageBytes = acquireJpegBytesAndClose(capture.image);

//保存Jpeg圖片

saveJpegPicture(imageBytes,capture.parameters,capture.session,capture.totalCaptureResult);

}

broadcastReadyState(true);

//調用回調

capture.parameters.callback.onPictureTaken(capture.session);

}

如代碼所示,首先,對result中的圖片數據進行了解析,然後調用saveJpegPicture方法將解析得到的圖片數據進行保存,最後再調用

裡面的回調(即CaptureModule,前面在初始化Parameters時說明了,它實現了PictureCallbak接口)的onPictureTaken方法,所以,

接下來先分析saveJpegPicture方法:

//OneCameraImpl.java

private void saveJpegPicture(byte[] jpegData,final PhotoCaptureParameters captureParams,CaptureSession session,CaptureResult result){

...

ListenableFuture> futureUri = session.saveAndFinish(jpegData,width,height,rotation,exif);

Futures.addCallback(futureUri,new FutureCallback>(){

@Override

public void onSuccess(Optional uriOptional){

captureParams.callback.onPictureSaved(mOptional.orNull());

}

@Override

public void onFailure(Throwable throwable){

captureParams.callback.onPictureSaved(null);

}

});

}

它最後會回調onPictureSaved方法來對圖片進行保存,所以需要分析CaptureModule的onPictureSaved方法:

//CaptureModule.java

@Override

public void onPictureSaved(Uri uri){

mAppController.notifyNewMedia(uri);

}

mAppController的實現為CameraActivity,所以分析notifyNewMedia方法:

//CameraActivity.java

@Override

public void notifyNewMedia(Uri uri){

...

if(FilmstripItemUtils.isMimeTypeVideo(mimeType)){

//如果拍攝的是video

sendBroadcast(new Intent(CameraUtil.ACTION_NEW_VIDEO,uri));

newData = mVideoItemFactory.queryContentUri(uri);

...

}else if(FilmstripItemUtils.isMimeTypeImage(mimeType)){

//如果是拍攝圖片

CameraUtil.broadcastNewPicture(mAppContext,uri);

newData = mPhotoItemFactory.queryCotentUri(uri);

...

}else{

return;

}

new AsyncTask(){

@Override

protected FilmstripItem doInBackground(FilmstripItem... Params){

FilmstripItem data = params[0];

MetadataLoader.loadMetadata(getAndroidContet(),data);

return data;

}

...

}

}

由代碼可知,這裡有兩種數據的處理,一種是video,另一種是image。而我們這裡分析的是capture圖片數據,所以首先會根據在回調函數

傳入的參數Uri和PhotoItemFactory來查詢到相應的拍照數據,然後再開啟一個異步的Task來對此數據進行處理,即通過MetadataLoader的

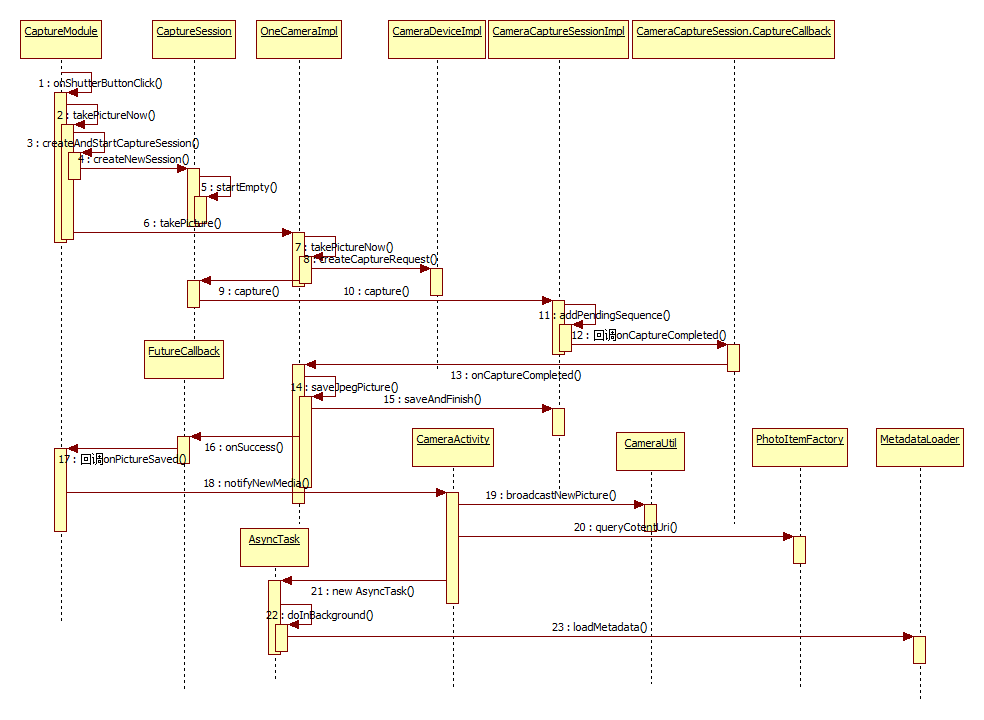

loadMetadata來加載數據,並返回。至此,capture的流程就基本分析結束了,下面將給出capture流程的整個過程中的時序圖:

安卓自定義控件——標題欄的復用

安卓自定義控件——標題欄的復用

一貫作風,先看效果圖,再實現 編寫自定義屬性文件atts.xml,自定義屬性中涉及到的屬性有左右兩邊的button的背景圖,中間標題的內容,字體大小,字體顏色。

Android_AsyncTask

Android_AsyncTask

一.AsyncTask的簡介在Android中實現異步任務機制有兩種方式,Handler和AsyncTask。Handler模式需要為每一個任務創建一個新的線程,任務完成

Android編程之ProgressBar圓形進度條顏色設置方法

Android編程之ProgressBar圓形進度條顏色設置方法

本文實例講述了Android ProgressBar圓形進度條顏色設置方法。分享給大家供大家參考,具體如下:你是不是還在為設置進度條的顏色而煩惱呢……別著急,且看如下如何

Android動畫--Activity界面180度翻轉

Android動畫--Activity界面180度翻轉

這個動畫效果是把Activity當做一張紙,正反面都有內容,且當點擊正反面的任何一個翻轉按鈕,Activity都會以屏幕中心為翻轉中心點(Z軸的翻轉中心點可以自由設定),