編輯:關於Android編程

這要從frameworks/native/cmds/servicemanager/service_manager.c:347的main函數說起,該文件編譯後生成servicemanager。

int main(int argc, char **argv)

{

struct binder_state *bs;

bs = binder_open(128*1024); // 打開/dev/binder文件,並映射到內存

if (!bs) {

ALOGE("failed to open binder driver\n");

return -1;

}

//向/dev/binder寫入BINDER_SET_CONTEXT_MGR命令

if (binder_become_context_manager(bs)) {

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

selinux_enabled = is_selinux_enabled();

sehandle = selinux_android_service_context_handle();

selinux_status_open(true);

if (selinux_enabled > 0) {

if (sehandle == NULL) {

ALOGE("SELinux: Failed to acquire sehandle. Aborting.\n");

abort();

}

if (getcon(&service_manager_context) != 0) {

ALOGE("SELinux: Failed to acquire service_manager context. Aborting.\n");

abort();

}

}

union selinux_callback cb;

cb.func_audit = audit_callback;

selinux_set_callback(SELINUX_CB_AUDIT, cb);

cb.func_log = selinux_log_callback;

selinux_set_callback(SELINUX_CB_LOG, cb);

binder_loop(bs, svcmgr_handler);

return 0;

}接下來遇到se_xxx相關的數據結構和函數,未來我們還會遇到。他們是Android系統提供的安全機制,負責管理對資源的安全訪問控制,通常只是回答某個資源是否有權限訪問,而不會干涉業務邏輯,因此我們可以完全忽略。重點在binder_loop(…),如下:

frameworks/native/cmds/servicemanager/binder.c:372

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}它循環向/dev/binder讀寫內容,然後對讀到的數據做解析,再深入binder_parse(…)

frameworks/native/cmds/servicemanager/binder.c:204

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

#if TRACE

fprintf(stderr,"%s:\n", cmd_name(cmd));

#endif

switch(cmd) {

case BR_NOOP:

break;

case BR_TRANSACTION_COMPLETE:

break;

case BR_INCREFS:

case BR_ACQUIRE:

case BR_RELEASE:

case BR_DECREFS:

#if TRACE

fprintf(stderr," %p, %p\n", (void *)ptr, (void *)(ptr + sizeof(void *)));

#endif

ptr += sizeof(struct binder_ptr_cookie);

break;

case BR_TRANSACTION: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) {

ALOGE("parse: txn too small!\n");

return -1;

}

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

res = func(bs, txn, &msg, &reply);

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

}

ptr += sizeof(*txn);

break;

}

case BR_REPLY: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) {

ALOGE("parse: reply too small!\n");

return -1;

}

binder_dump_txn(txn);

if (bio) {

bio_init_from_txn(bio, txn);

bio = 0;

} else {

/* todo FREE BUFFER */

}

ptr += sizeof(*txn);

r = 0;

break;

}

case BR_DEAD_BINDER: {

struct binder_death *death = (struct binder_death *)(uintptr_t) *(binder_uintptr_t *)ptr;

ptr += sizeof(binder_uintptr_t);

death->func(bs, death->ptr);

break;

}

case BR_FAILED_REPLY:

r = -1;

break;

case BR_DEAD_REPLY:

r = -1;

break;

default:

ALOGE("parse: OOPS %d\n", cmd);

return -1;

}

}

return r;

}重點在case BR_TRANSACTION裡,它接收到的txn正是客戶端發出的tr。首先初始化好reply數據結構

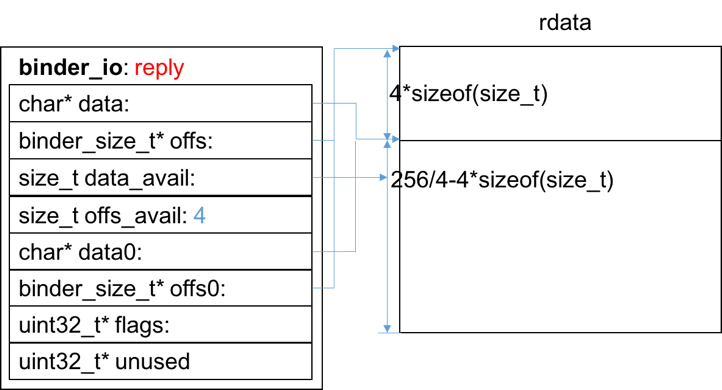

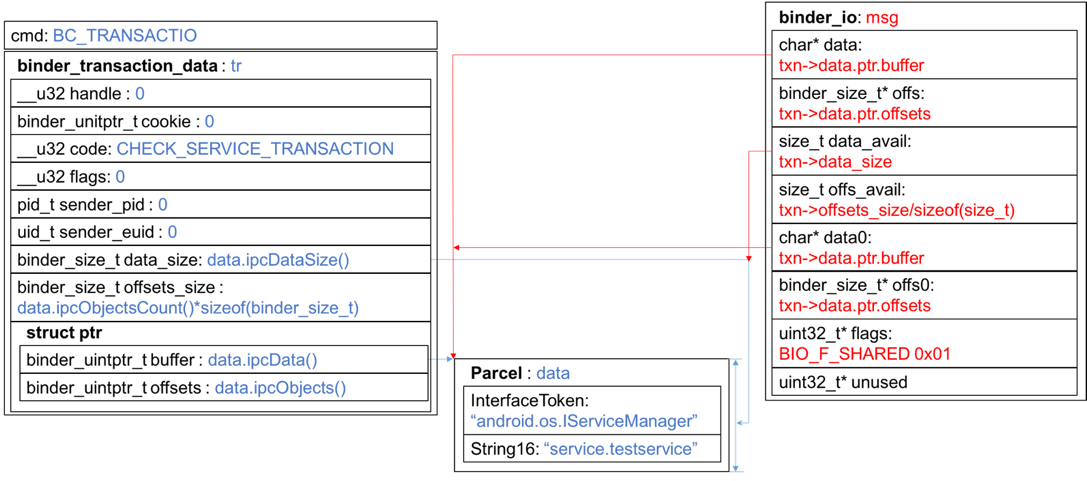

然後初始化msg,其中藍色部分是客戶端組織的數據,紅色部分是ServiceManager端組織的數據:

接下來執行func(…),這是一個函數指針,通過參數傳進來,向上追溯binder_loop(…) – main(…)找到該函數指針的實參是svcmgr_handler

frameworks/native/cmds/servicemanager/service_manager.c:244

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

size_t len;

uint32_t handle;

uint32_t strict_policy;

int allow_isolated;

//ALOGI("target=%p code=%d pid=%d uid=%d\n",

// (void*) txn->target.ptr, txn->code, txn->sender_pid, txn->sender_euid);

if (txn->target.ptr != BINDER_SERVICE_MANAGER)

return -1;

if (txn->code == PING_TRANSACTION)

return 0;

// Equivalent to Parcel::enforceInterface(), reading the RPC

// header with the strict mode policy mask and the interface name.

// Note that we ignore the strict_policy and don't propagate it

// further (since we do no outbound RPCs anyway).

// 從客戶端發來的Parcel數據中取出InterfaceToken

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

// svcmgr_id就是android.os.IserviceManager,定義在service_manager.c:164

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

fprintf(stderr,"invalid id %s\n", str8(s, len));

return -1;

}

if (sehandle && selinux_status_updated() > 0) {

struct selabel_handle *tmp_sehandle = selinux_android_service_context_handle();

if (tmp_sehandle) {

selabel_close(sehandle);

sehandle = tmp_sehandle;

}

}

switch(txn->code) {

case SVC_MGR_GET_SERVICE:

case SVC_MGR_CHECK_SERVICE:

s = bio_get_string16(msg, &len); // 取出Parcel中的"service.testservice"字串

if (s == NULL) {

return -1;

}

handle = do_find_service(bs, s, len, txn->sender_euid, txn->sender_pid);

if (!handle)

break;

bio_put_ref(reply, handle);

return 0;

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

handle = bio_get_ref(msg);

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

if (do_add_service(bs, s, len, handle, txn->sender_euid,

allow_isolated, txn->sender_pid))

return -1;

break;

case SVC_MGR_LIST_SERVICES: {

uint32_t n = bio_get_uint32(msg);

if (!svc_can_list(txn->sender_pid)) {

ALOGE("list_service() uid=%d - PERMISSION DENIED\n",

txn->sender_euid);

return -1;

}

si = svclist;

while ((n-- > 0) && si)

si = si->next;

if (si) {

bio_put_string16(reply, si->name);

return 0;

}

return -1;

}

default:

ALOGE("unknown code %d\n", txn->code);

return -1;

}

bio_put_uint32(reply, 0);

return 0;

}繼續找do_find_service(…),frameworks/native/cmds/servicemanager/service_manager.c:170

uint32_t do_find_service(struct binder_state *bs, const uint16_t *s, size_t len, uid_t uid, pid_t spid)

{

struct svcinfo *si = find_svc(s, len); // 重點在這裡

if (!si || !si->handle) {

return 0;

}

if (!si->allow_isolated) {

// If this service doesn't allow access from isolated processes,

// then check the uid to see if it is isolated.

uid_t appid = uid % AID_USER;

if (appid >= AID_ISOLATED_START && appid <= AID_ISOLATED_END) {

return 0;

}

}

if (!svc_can_find(s, len, spid)) {

return 0;

}

return si->handle;

}再到frameworks/native/cmds/servicemanager/service_manager.c:140

struct svcinfo *find_svc(const uint16_t *s16, size_t len)

{

struct svcinfo *si;

for (si = svclist; si; si = si->next) {

if ((len == si->len) &&

!memcmp(s16, si->name, len * sizeof(uint16_t))) {

return si;

}

}

return NULL;

}終於找到了盡頭,svclist是一個鏈表,ServiceManager在收到checkService請求後,根據service name遍歷svclist,返回命中的節點。之後再一路回到調用的原點:find_svc -> do_find_service,在這裡它返回的是節點的handle成員變量。節點的數據類型定義在frameworks/native/cmds/servicemanager/service_manager.c:128

struct svcinfo

{

struct svcinfo *next;

uint32_t handle;

struct binder_death death;

int allow_isolated;

size_t len;

uint16_t name[0];

};從數據類型上來看,我們只能知道handle是一個整形數字,它是怎麼來的?肯定是服務端先來這裡注冊的,然後ServiceManager把節點中的信息緩存到svclist鏈表裡去,等待客戶端過來請求,就把handle返回給客戶端。

繼續向調用原點返回,從do_find_service –> svcmgr_handle

frameworks/native/cmds/servicemanager/service_manager.c:296

handle = do_find_service(bs, s, len, txn->sender_euid, txn->sender_pid);

if (!handle)

break;

bio_put_ref(reply, handle);

return 0;svcmgr_handle得到handle後,調用bio_put_ref把它塞到reply裡。然後svcmgr_handle -> binder_parse,後者調用binder_send_reply把reply發送出去。這樣ServiceManager就完成了一次checkService的響應。

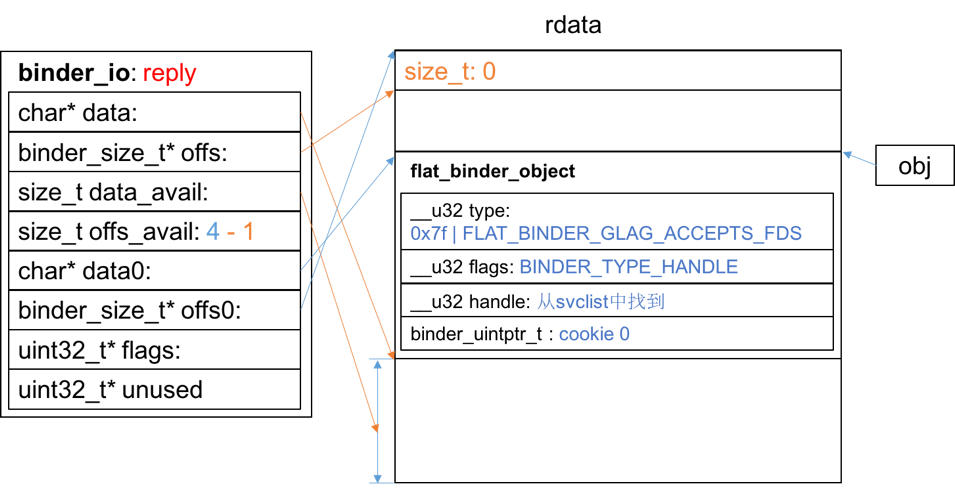

不過還是有一些細節需要弄清楚,我們先回到svcmgr_handle的bio_put_ref(…)函數,看看他是怎麼組織reply的,frameworks/native/cmds/servicemanager/binder.c:505

void bio_put_ref(struct binder_io *bio, uint32_t handle)

{

struct flat_binder_object *obj;

if (handle)

obj = bio_alloc_obj(bio);

else

obj = bio_alloc(bio, sizeof(*obj));

if (!obj)

return;

obj->flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

obj->type = BINDER_TYPE_HANDLE;

obj->handle = handle;

obj->cookie = 0;

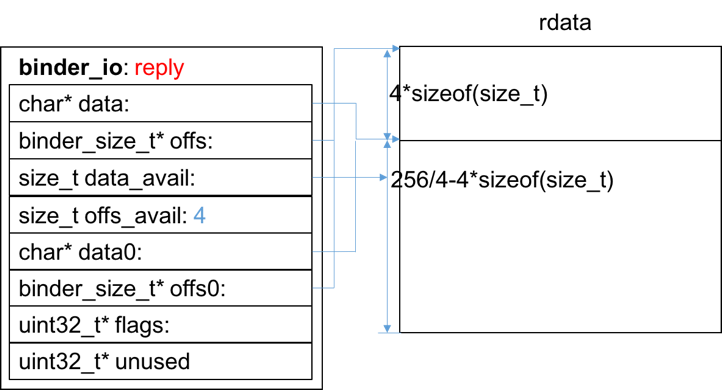

}還記得reply吧?上文在干活之前給它初始化成這樣:

接下來進入bio_alloc_obj(…),frameworks/native/cmds/servicemanager/binder.c:468

static struct flat_binder_object *bio_alloc_obj(struct binder_io *bio)

{

struct flat_binder_object *obj;

obj = bio_alloc(bio, sizeof(*obj));

if (obj && bio->offs_avail) {

bio->offs_avail--; // 它記錄offs區域還有多少容量

// offs區域是一個size_t型數組,每個元素記錄data區域中object相對於data0的偏移

*bio->offs++ = ((char*) obj) - ((char*) bio->data0);

return obj;

}

bio->flags |= BIO_F_OVERFLOW;

return NULL;

}繼續到bio_alloc(…),frameworks/native/cmds/servicemanager/binder.c:437

static void *bio_alloc(struct binder_io *bio, size_t size)

{ // size=sizeof(flat_binder_object)

size = (size + 3) & (~3);

if (size > bio->data_avail) { // 溢出判斷

bio->flags |= BIO_F_OVERFLOW;

return NULL;

} else { // 主干在這,原來是從bio->data中分配出的空間

void *ptr = bio->data;

bio->data += size;

bio->data_avail -= size;

return ptr;

}

}到bio_put_ref(…)函數返回時,他組織成的數據結構如下,我把被修改過的成員標橙色了:

binder_io只是一個數據索引,具體的數據是放在rdata中的,rdata又分兩個區域:1、object指針索引區;2、數據區。數據區存放有基本數據類型,如int、string;也有抽象數據類型,如flat_binder_object。object指針索引區記錄數據區中每一個抽象數據類型的偏移量。binder_io則記錄rdata區域每個部分的起始位置、當前棧頂位置和所剩空間。

svcmgr_handle(…)調用bio_put_ref(…)組織完reply數據之後就返回到binder_parser(…),然後調用binder_sendbinder_parse_raply(…)

frameworks/native/cmds/servicemanager/binder.c:245

res = func(bs, txn, &msg, &reply);

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);svcmgr_handle的返回值res為0,表示成功,該值被傳入binder_send_reply(…)。一並被傳入的還有txn的數據成員data.ptr.buffer,這是從客戶端發來的請求數據,繼續進入函數

frameworks/native/cmds/servicemanager/binder.c:170

void binder_send_reply(struct binder_state *bs,

struct binder_io *reply,

binder_uintptr_t buffer_to_free,

int status)

{ // status=0

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

data.cmd_reply = BC_REPLY;

data.txn.target.ptr = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

data.txn.flags = TF_STATUS_CODE;

data.txn.data_size = sizeof(int);

data.txn.offsets_size = 0;

data.txn.data.ptr.buffer = (uintptr_t)&status;

data.txn.data.ptr.offsets = 0;

} else {

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offsets_size = ((char*) reply->offs) - ((char*) reply->offs0);

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

binder_write(bs, &data, sizeof(data));

}這是在組織完整的響應數據。把完整的數據描繪出來如下,真是一盤大棋!客戶端組織的數據用藍色標出,ServiceManager組織的數據用紅色標出。從圖上可以清晰地看出原來reply並沒有打到響應數據包裡,只是作中間緩存之用。

android 顯示gif圖片實例詳解

android 顯示gif圖片實例詳解

在android中不支持gif格式的圖片,但是由於我希望在我的程序中剛剛加載的時候有一個小人在跑步表示正在加載。而這個小人跑就是一個gif圖片。也就是希望程序一啟動時就加

Android WebView線性進度條實例詳解

Android WebView線性進度條實例詳解

推薦閱讀:Android Webview添加網頁加載進度條實例詳解先給大家展示下效果圖:這個效果圖大家一看就懂,在生活經常見到1.wevbview_progressbar

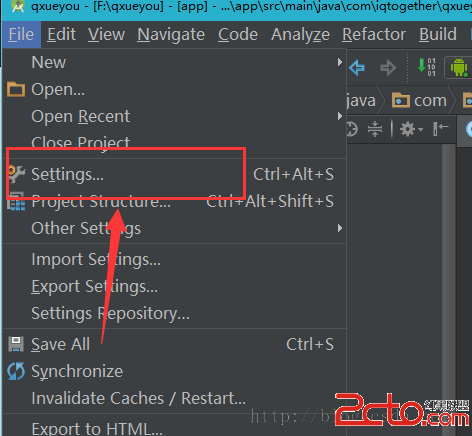

Android Studio添加Parcelable序列化小工具(快速提高開發效率)

Android Studio添加Parcelable序列化小工具(快速提高開發效率)

Android Studio添加Parcelable序列化小工具(快速提高開發效率)Android Studio是google專門為開發Android提供的開發工具,在它

GeekBand第十一周筆記

GeekBand第十一周筆記

本周的主要內容介紹Gradle,NDK,管理依賴和Git等一、GradleGradle是一個基於Apache Ant和Apache Maven概念的項目自動化建構工具。它