圖片的內存緩存實現

Image-Loader庫有一個較完整的內存緩存實現,使用者可以根據需要選擇已經實現的策略,也可以定制自己項目中需要的策略。

內存緩存實現代碼在memory和memory.impl這兩個包中,前者就是規范視圖,後者是實現視圖。memory包中有MemoryCacheAware接口和BaseMemoryCache和LimitedMemoryCache兩個抽象類,加上memory.impl包中的WeakMemoryCache類。

MemoryCacheAware

MemoryCacheAware接口以泛型方式定義了實現緩存所需的基礎規約,包括寫緩存、讀緩存、刪除緩存和遍歷緩存等:

復制代碼

/**

* Interface for memory cache

*

* @author Sergey Tarasevich (nostra13[at]gmail[dot]com)

* @since 1.0.0

*/

public interface MemoryCacheAware<K, V> {

/**

* Puts value into cache by key

*

* @return <b>true</b> - if value was put into cache successfully, <b>false</b> - if value was <b>not</b> put into

* cache

*/

boolean put(K key, V value);

/** Returns value by key. If there is no value for key then null will be returned. */

V get(K key);

/** Removes item by key */

void remove(K key);

/** Returns all keys of cache */

Collection<K> keys();

/** Remove all items from cache */

void clear();

}

復制代碼

內存緩存依然使用哈希表來實現:

/** Stores not strong references to objects */

private final Map<K, Reference<V>> softMap = Collections.synchronizedMap(new HashMap<K, Reference<V>>());

BaseMemoryCache implements MemoryCacheAware

復制代碼

/**

* Base memory cache. Implements common functionality for memory cache. Provides object references (

* {@linkplain Reference not strong}) storing.

*

* @author Sergey Tarasevich (nostra13[at]gmail[dot]com)

* @since 1.0.0

*/

public abstract class BaseMemoryCache<K, V> implements MemoryCacheAware<K, V> {

/** Stores not strong references to objects */

private final Map<K, Reference<V>> softMap = Collections.synchronizedMap(new HashMap<K, Reference<V>>());

@Override

public V get(K key) {

V result = null;

Reference<V> reference = softMap.get(key);

if (reference != null) {

result = reference.get();

}

return result;

}

@Override

public boolean put(K key, V value) {

softMap.put(key, createReference(value));

return true;

}

@Override

public void remove(K key) {

softMap.remove(key);

}

@Override

public Collection<K> keys() {

synchronized (softMap) {

return new HashSet<K>(softMap.keySet());

}

}

@Override

public void clear() {

softMap.clear();

}

/** Creates {@linkplain Reference not strong} reference of value */

protected abstract Reference<V> createReference(V value);

}

復制代碼

基本上就是HashMap的操作,由於具體Reference的實現需要看到底用的哪種引用,因此,這裡將createReference定義成抽象函數,讓BaseMemoryCache的子類去定制實現。

WeakMemoryCache extends BaseMemoryCache

BaseMemoryCache的一個最簡單的子類實現是WeakMemoryCache類,它僅僅是實現了createReference抽象方法,返回Bitmap對象的弱引用:

public class WeakMemoryCache extends BaseMemoryCache<String, Bitmap> {

@Override

protected Reference<Bitmap> createReference(Bitmap value) {

return new WeakReference<Bitmap>(value);

}

}

LimitedMemoryCache extends BaseMemoryCache

BaseMemoryCache的另一個子類是LimitedMemoryCache,它也是抽象類,定義了實現內存緩存限制策略的公共操作。既然要限制緩存大小,那麼首先需要定義默認的緩存最大值,同時需要維護一個表示當前緩存已占用大小的變量,考慮到多線程問題,這個變量值得增減必須保證是原子的,因此,該變量類型選用AtomicInteger。如下所示:

private static final int MAX_NORMAL_CACHE_SIZE_IN_MB = 16;

private static final int MAX_NORMAL_CACHE_SIZE = MAX_NORMAL_CACHE_SIZE_IN_MB * 1024 * 1024;

private final int sizeLimit;

private final AtomicInteger cacheSize;

同時,為了計算已添加到緩存中的數據大小,需要新增一個指向強引用的數據結構來進行記錄,而不是使用BaseMemoryCache中的softMap哈希表,因為softMap中存放的是Reference類,裡面的數據可能會被GC回收,用來統計已占用大小時不准確。指向數據強引用的數據結構選用LinkedList,定義如下,同樣采用線程安全版本。

/**

* Contains strong references to stored objects. Each next object is added last. If hard cache size will exceed

* limit then first object is deleted (but it continue exist at {@link #softMap} and can be collected by GC at any

* time)

*/

private final List<V> hardCache = Collections.synchronizedList(new LinkedList<V>());

往LimitedMemoryCache中添加數據時,首先要計算出當前這個數據的大小(getSize()),然後加上已占用緩存大小後,跟緩存最大值相比較,超過緩存最大值時,就需要根據緩存清理策略(removeNext())刪除以前的一些數據,直到添加數據後,總占用大小在最大值之內為止。上面提到的getSize()函數和removeNext函數需要子類定制,所以定義成了抽象函數。這是典型的模板方法模式的應用,模板方法模式定義一個操作中算法的步驟,而將一些步驟延遲到子類中。模板方法使得子類可以不改變一個算法的結構即可重定義該算法的某些特定步驟。下面的put函數中實現的就是算法的步驟,而getSize()和removeNext()函數則是子類可重定義的特定步驟。相關代碼如下所示:

復制代碼

@Override

public boolean put(K key, V value) {

boolean putSuccessfully = false;

// Try to add value to hard cache

int valueSize = getSize(value);

int sizeLimit = getSizeLimit();

int curCacheSize = cacheSize.get();

if (valueSize < sizeLimit) {

while (curCacheSize + valueSize > sizeLimit) {

V removedValue = removeNext();

if (hardCache.remove(removedValue)) {

curCacheSize = cacheSize.addAndGet(-getSize(removedValue));

}

}

hardCache.add(value);

cacheSize.addAndGet(valueSize);

putSuccessfully = true;

}

// Add value to soft cache

super.put(key, value);

return putSuccessfully;

}

protected abstract int getSize(V value);

protected abstract V removeNext();

復制代碼

FIFOLimitedMemoryCache extends LimitedMemoryCache

FIFO算法意思是在緩存超過最大值時,緩存清理策略是將最老的數據清理掉,因此使用隊列這樣的數據結構就可以很好的實現,在Java中,LinkedList類可以實現這樣的功能。

private final List<Bitmap> queue = Collections.synchronizedList(new LinkedList<Bitmap>());

而父類LimitedMemoryCache中也定義了類似的數據結構hardCache專門用於計算緩存的數據大小,只不過它是私有的,子類不能使用。但這樣就會造成在FIFOLimitedMemoryCache類中存在兩個類似的數據結構,內存占用會變大,事實上,FIFOLimitedMemoryCache中的queue完全可以直接使用父類的hardCache,作者之所以沒有直接使用,應該是考慮到代碼整體類層次結構清晰的問題,畢竟hardCache專門是用於計算緩存大小的,子類實現方式很多種,很多子類並不需要直接使用hardCache,所以單獨一個FIFOLimitedMemoryCache以空間來換取代碼結構的清晰也是可以理解的。寫緩存和清緩存代碼如下:

復制代碼

@Override

public boolean put(String key, Bitmap value) {

if (super.put(key, value)) {

queue.add(value);

return true;

} else {

return false;

}

}

@Override

public void remove(String key) {

Bitmap value = super.get(key);

if (value != null) {

queue.remove(value);

}

super.remove(key);

}

@Override

public void clear() {

queue.clear();

super.clear();

}

復制代碼

前面說到的父類總共有3個抽象函數,需要子類予以實現,代碼如下:

復制代碼

@Override

protected int getSize(Bitmap value) {

return value.getRowBytes() * value.getHeight();

}

@Override

protected Bitmap removeNext() {

return queue.remove(0);

}

@Override

protected Reference<Bitmap> createReference(Bitmap value) {

return new WeakReference<Bitmap>(value);

}

復制代碼

getSize函數中可以看到計算Bitmap占用字節大小的典型方法。removeNext函數中移除隊列queue頭部的數據,這個數據是最先進入隊列的數據。而Bitmap的Reference使用的是弱引用,它和軟引用的區別是,弱引用對象擁有更短暫的生命周期,在垃圾回收器線程掃描它所管轄的內存區域過程中,只要發現只具有弱引用的對象,那麼不管當前內存空間是否足夠,都會回收弱引用對象占用的內存。這樣可以更好的防止出現OutOfMemoryError錯誤。

LargestLimitedMemoryCache extends LimitedMemoryCache

當緩存超出最大值限制時,清理策略是將占用空間最大的數據清理掉。因此,需要一個維護Bitmap對象到它占用大小的映射,這裡使用的還是HashMap:

/**

* Contains strong references to stored objects (keys) and last object usage date (in milliseconds). If hard cache

* size will exceed limit then object with the least frequently usage is deleted (but it continue exist at

* {@link #softMap} and can be collected by GC at any time)

*/

private final Map<Bitmap, Integer> valueSizes = Collections.synchronizedMap(new HashMap<Bitmap, Integer>());

同理,關鍵代碼還是在父類幾個抽象函數的實現,其中getSize()和createReference()函數實現和FIFOLimitedMemoryCache類一樣,removeNext函數實現如下:

復制代碼

@Override

protected Bitmap removeNext() {

Integer maxSize = null;

Bitmap largestValue = null;

Set<Entry<Bitmap, Integer>> entries = valueSizes.entrySet();

synchronized (valueSizes) {

for (Entry<Bitmap, Integer> entry : entries) {

if (largestValue == null) {

largestValue = entry.getKey();

maxSize = entry.getValue();

} else {

Integer size = entry.getValue();

if (size > maxSize) {

maxSize = size;

largestValue = entry.getKey();

}

}

}

}

valueSizes.remove(largestValue);

return largestValue;

}

復制代碼

基本原理是遍歷valueSizes這個哈希表,比較並得到占用空間最大的Bitmap對象,然後刪除它即可。這裡要注意的一點是遍歷時要加入synchronized同步機制。

LRULimitedMemoryCache extends LimitedMemoryCache

LRU即Least Recently Used,緩存清理策略是將最近最少使用的Bitmap對象刪除掉。按Java中剛好有這樣一個數據結構可以實現這個策略,它就是LinkedHashMap類。

private static final int INITIAL_CAPACITY = 10;

private static final float LOAD_FACTOR = 1.1f;

/** Cache providing Least-Recently-Used logic */

private final Map<String, Bitmap> lruCache = Collections.synchronizedMap(new LinkedHashMap<String, Bitmap>(INITIAL_CAPACITY, LOAD_FACTOR, true));

LinkedHashMap是HashMap的子類,注意它的構造函數第三個參數accessOrder,它定義了LinkedHashMap的排序模式,accessOrder為true時,表示LinkedHashMap中數據排序按照訪問的順序,當為false時,表示數據排序按照數據插入的順序。而我們要實現LRU,就需要把accessOrder設置為true,同時,在讀緩存時不能像FIFOLimitedMemoryCache類和LargestLimitedMemoryCache類一樣使用父類BaseMemoryCache的get方法,而是應該重寫該方法如下所示:

@Override

public Bitmap get(String key) {

lruCache.get(key); // call "get" for LRU logic

return super.get(key);

}

當accessOrder設置為true時,LinkedHashMap就維護了記錄的訪問順序,這時使用Iterator 遍歷LinkedHashMap時,先得到的記錄肯定就是最近最不常使用的那個記錄了,LRU算法水到渠成:

復制代碼

@Override

protected Bitmap removeNext() {

Bitmap mostLongUsedValue = null;

synchronized (lruCache) {

Iterator<Entry<String, Bitmap>> it = lruCache.entrySet().iterator();

if (it.hasNext()) {

Entry<String, Bitmap> entry = it.next();

mostLongUsedValue = entry.getValue();

it.remove();

}

}

return mostLongUsedValue;

}

復制代碼

UsingFreqLimitedMemoryCache extends LimitedMemoryCache

和LargestLimitedMemoryCache類實現類似,只不過一個是將占用空間最大的記錄剔除,一個是將訪問次數最少的記錄剔除,所用數據結構自然類似:

/**

* Contains strong references to stored objects (keys) and last object usage date (in milliseconds). If hard cache

* size will exceed limit then object with the least frequently usage is deleted (but it continue exist at

* {@link #softMap} and can be collected by GC at any time)

*/

private final Map<Bitmap, Integer> usingCounts = Collections.synchronizedMap(new HashMap<Bitmap, Integer>());

因為要記錄訪問次數,所以需要重寫父類的get方法,每訪問一次,就增加計數值:

復制代碼

@Override

public Bitmap get(String key) {

Bitmap value = super.get(key);

// Increment usage count for value if value is contained in hardCahe

if (value != null) {

Integer usageCount = usingCounts.get(value);

if (usageCount != null) {

usingCounts.put(value, usageCount + 1);

}

}

return value;

}

復制代碼

removeNext函數實現原理是遍歷usingCounts哈希表中的記錄,比較它們的訪問次數,並選取訪問次數最少的一個刪除之,代碼如下所示,同LargestLimitedMemoryCache類,要注意使用synchronized關鍵字同步保護。

復制代碼

@Override

protected Bitmap removeNext() {

Integer minUsageCount = null;

Bitmap leastUsedValue = null;

Set<Entry<Bitmap, Integer>> entries = usingCounts.entrySet();

synchronized (usingCounts) {

for (Entry<Bitmap, Integer> entry : entries) {

if (leastUsedValue == null) {

leastUsedValue = entry.getKey();

minUsageCount = entry.getValue();

} else {

Integer lastValueUsage = entry.getValue();

if (lastValueUsage < minUsageCount) {

minUsageCount = lastValueUsage;

leastUsedValue = entry.getKey();

}

}

}

}

usingCounts.remove(leastUsedValue);

return leastUsedValue;

}

復制代碼

FuzzyKeyMemoryCache implements MemoryCacheAware

在之前實現內存緩存的映射時,是一個key對應一個value,而這個裝飾者類提供的額外功能是:允許多個key對應同一個value,後面插入的key-value對會覆蓋以前存在的key-value對。這個類主要用於Image-Loader庫內部使用。在FuzzyKeyMemoryCache類的實現中,使用Comparator類實現多個key是否相等的判斷,核心代碼在put函數中:

復制代碼

/**

* Decorator for {@link MemoryCacheAware}. Provides special feature for cache: some different keys are considered as

* equals (using {@link Comparator comparator}). And when you try to put some value into cache by key so entries with

* "equals" keys will be removed from cache before.<br />

* <b>NOTE:</b> Used for internal needs. Normally you don't need to use this class.

*

* @author Sergey Tarasevich (nostra13[at]gmail[dot]com)

* @since 1.0.0

*/

public class FuzzyKeyMemoryCache<K, V> implements MemoryCacheAware<K, V> {

private final MemoryCacheAware<K, V> cache;

private final Comparator<K> keyComparator;

public FuzzyKeyMemoryCache(MemoryCacheAware<K, V> cache, Comparator<K> keyComparator) {

this.cache = cache;

this.keyComparator = keyComparator;

}

@Override

public boolean put(K key, V value) {

// Search equal key and remove this entry

synchronized (cache) {

K keyToRemove = null;

for (K cacheKey : cache.keys()) {

if (keyComparator.compare(key, cacheKey) == 0) {

keyToRemove = cacheKey;

break;

}

}

if (keyToRemove != null) {

cache.remove(keyToRemove);

}

}

return cache.put(key, value);

}

@Override

public V get(K key) {

return cache.get(key);

}

@Override

public void remove(K key) {

cache.remove(key);

}

@Override

public void clear() {

cache.clear();

}

@Override

public Collection<K> keys() {

return cache.keys();

}

}

復制代碼

LimitedAgeMemoryCache implements MemoryCacheAware

在前面介紹過的MemoryCacheAware接口實現類中,有時可能需要添加這樣一個額外的功能:當緩存的對象存在超過一定時間時,將其清理掉,LimitedAgeMemoryCache這個裝飾者類就是實現這樣一個功能。首先,實現一個緩存對象key到已存活時間的映射表,在獲取緩存對象時,判斷該對象是否超過最大存活時間,如果是則將其移除。代碼如下所示:

復制代碼

/**

* Decorator for {@link MemoryCacheAware}. Provides special feature for cache: if some cached object age exceeds defined

* value then this object will be removed from cache.

*

* @author Sergey Tarasevich (nostra13[at]gmail[dot]com)

* @see MemoryCacheAware

* @since 1.3.1

*/

public class LimitedAgeMemoryCache<K, V> implements MemoryCacheAware<K, V> {

private final MemoryCacheAware<K, V> cache;

private final long maxAge;

private final Map<K, Long> loadingDates = Collections.synchronizedMap(new HashMap<K, Long>());

/**

* @param cache Wrapped memory cache

* @param maxAge Max object age <b>(in seconds)</b>. If object age will exceed this value then it'll be removed from

* cache on next treatment (and therefore be reloaded).

*/

public LimitedAgeMemoryCache(MemoryCacheAware<K, V> cache, long maxAge) {

this.cache = cache;

this.maxAge = maxAge * 1000; // to milliseconds

}

@Override

public boolean put(K key, V value) {

boolean putSuccesfully = cache.put(key, value);

if (putSuccesfully) {

loadingDates.put(key, System.currentTimeMillis());

}

return putSuccesfully;

}

@Override

public V get(K key) {

Long loadingDate = loadingDates.get(key);

if (loadingDate != null && System.currentTimeMillis() - loadingDate > maxAge) {

cache.remove(key);

loadingDates.remove(key);

}

return cache.get(key);

}

@Override

public void remove(K key) {

cache.remove(key);

loadingDates.remove(key);

}

@Override

public Collection<K> keys() {

return cache.keys();

}

@Override

public void clear() {

cache.clear();

loadingDates.clear();

}

}

Android屏幕適配規則

Android屏幕適配規則

Android自定義View實現打字機效果

Android自定義View實現打字機效果

Android 解決使用SearchView時軟鍵盤不支持actionSearch的問題

Android 解決使用SearchView時軟鍵盤不支持actionSearch的問題

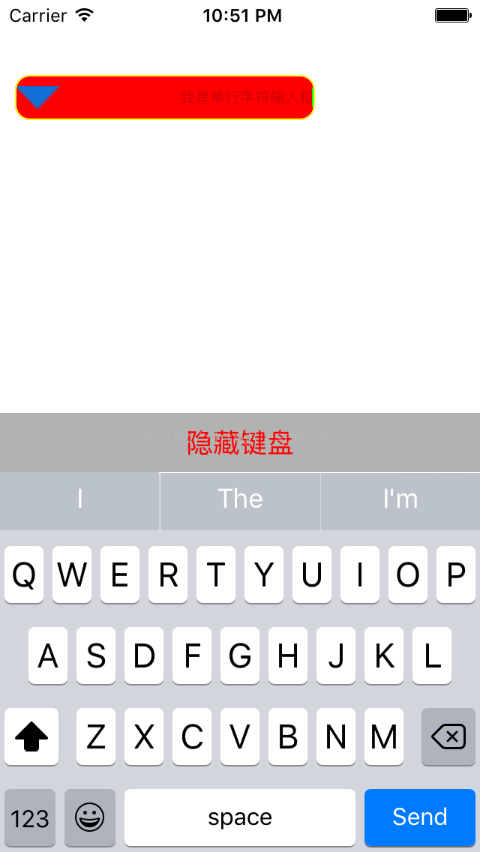

UITextField的使用

UITextField的使用