編輯:關於Android編程

通過上一篇,程序基本是可以運行了,調試後發現很多問題,現在來慢慢解決

1.jni修改解碼後的長寬

修改Decoding接口,修改為如下:

JNIEXPORT jint JNICALL Java_com_dao_iclient_FfmpegIF_Decoding(JNIEnv * env, jclass obj,const jbyteArray pSrcData,const jint DataLen,const jbyteArray pDeData,const jint dwidth,const jint dheight)

{

//LOGI("Decoding");

int frameFinished;

int i,j;

int consumed_bytes;

jbyte * Buf = (jbyte*)(*env)->GetByteArrayElements(env, pSrcData, 0);

jbyte * Pixel= (jbyte*)(*env)->GetByteArrayElements(env, pDeData, 0);

avpkt.data = Buf;

avpkt.size = DataLen;

consumed_bytes=avcodec_decode_video2(pCodecCtx,pFrame,&frameFinished,&avpkt);

//av_free_packet(&avpkt);

if(frameFinished) {

picture=avcodec_alloc_frame();

avpicture_fill((AVPicture *) picture, (uint8_t *)Pixel, PIX_FMT_RGB565,dwidth,dheight);

swsctx = sws_getContext(pCodecCtx->width,pCodecCtx->height, pCodecCtx->pix_fmt, dwidth, dheight,PIX_FMT_RGB565, SWS_BICUBIC, NULL, NULL, NULL);

sws_scale(swsctx,(const uint8_t* const*)pFrame->data,pFrame->linesize,0,pCodecCtx->height,picture->data,picture->linesize);

}

(*env)->ReleaseByteArrayElements(env, pSrcData, Buf, 0);

(*env)->ReleaseByteArrayElements(env, pDeData, Pixel, 0);

return consumed_bytes;

}修改FfmpegIF中Decoding函數定義

static public native int Decoding(byte[] in,int datalen,byte[] out,int dwidth,int dheight);

package com.dao.iclient;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.Socket;

import java.nio.ByteBuffer;

import android.app.Activity;

import android.graphics.Bitmap;

import android.graphics.Bitmap.Config;

import android.os.Bundle;

import android.os.Handler;

import android.util.DisplayMetrics;

import android.view.Menu;

import android.view.Window;

import android.view.WindowManager;

import android.widget.ImageView;

public class IcoolClient extends Activity {

private Socket socket;

private ByteBuffer buffer;

private ByteBuffer Imagbuf;

//net package

private static short type = 0;

private static int packageLen = 0;

private static int sendDeviceID = 0;

private static int revceiveDeviceID = 0;

private static short sendDeviceType = 0;

private static int dataIndex = 0;

private static int dataLen = 0;

private static int frameNum = 0;

private static int commType = 0;

//size

private static int packagesize;

private OutputStream outputStream=null;

private InputStream inputStream=null;

private Bitmap VideoBit;

private ImageView mImag;

private byte[] mout;

private Handler mHandler;

private int swidth = 0;

private int sheight = 0;

private int dwidth = 0;

private int dheight = 0;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

requestWindowFeature(Window.FEATURE_NO_TITLE);

getWindow().setFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN,

WindowManager.LayoutParams.FLAG_FULLSCREEN);

setContentView(R.layout.activity_icool_client);

mImag = (ImageView)findViewById(R.id.mimg);

packagesize = 7 * 4 + 2 * 2;

buffer = ByteBuffer.allocate(packagesize);

swidth = 640;

sheight = 480;

FfmpegIF.DecodeInit(swidth, sheight);

DisplayMetrics metric = new DisplayMetrics();

getWindowManager().getDefaultDisplay().getMetrics(metric);

dwidth = metric.widthPixels;

dheight = metric.heightPixels;

VideoBit = Bitmap.createBitmap(dwidth, dheight, Config.RGB_565);

mout = new byte[dwidth * dheight * 2];

Imagbuf = ByteBuffer.wrap(mout);

mHandler = new Handler();

new StartThread().start();

}

final Runnable mUpdateUI = new Runnable() {

@Override

public void run() {

// TODO Auto-generated method stub

//ByteBuffer Imagbuf = ByteBuffer.wrap(mout);

VideoBit.copyPixelsFromBuffer(Imagbuf);

mImag.setImageBitmap(VideoBit);

Imagbuf.clear();

}

};

class StartThread extends Thread {

@Override

public void run() {

// TODO Auto-generated method stub

//super.run();

int datasize;

try {

socket = new Socket("192.168.1.21", 8888);

SendCom(FfmpegIF.VIDEO_COM_STOP);

SendCom(FfmpegIF.VIDEO_COM_START);

inputStream = socket.getInputStream();

while(true) {

byte[] Rbuffer = new byte[packagesize];

inputStream.read(Rbuffer);

datasize = getDataL(Rbuffer);

if(datasize > 0) {

byte[] Data = new byte[datasize];

int size;

int readsize = 0;

do {

size = inputStream.read(Data,readsize,(datasize - readsize));

readsize += size;

}while(readsize != datasize);

//byte[] mout = new byte[dwidth * dheight * 2];

FfmpegIF.Decoding(Data, readsize, mout,dwidth,dheight);

//Imagbuf = ByteBuffer.wrap(mout);

mHandler.post(mUpdateUI);

SendCom(FfmpegIF.VIDEO_COM_ACK);

}

}

}catch (IOException e) {

e.printStackTrace();

}

}

}

public void SendCom(int comtype) {

byte[] Bbuffer = new byte[packagesize];

try {

outputStream = socket.getOutputStream();

type = FfmpegIF.TYPE_MODE_COM;

packageLen = packagesize;

commType = comtype;

putbuffer();

Bbuffer = buffer.array();

outputStream.write(Bbuffer);

//System.out.println("send done");

} catch (IOException e) {

e.printStackTrace();

}

}

public void putbuffer(){

buffer.clear();

buffer.put(ShorttoByteArray(type));

buffer.put(InttoByteArray(packageLen));

buffer.put(InttoByteArray(sendDeviceID));

buffer.put(InttoByteArray(revceiveDeviceID));

buffer.put(ShorttoByteArray(sendDeviceType));

buffer.put(InttoByteArray(dataIndex));

buffer.put(InttoByteArray(dataLen));

buffer.put(InttoByteArray(frameNum));

buffer.put(InttoByteArray(commType));

}

private static byte[] ShorttoByteArray(short n) {

byte[] b = new byte[2];

b[0] = (byte) (n & 0xff);

b[1] = (byte) (n >> 8 & 0xff);

return b;

}

private static byte[] InttoByteArray(int n) {

byte[] b = new byte[4];

b[0] = (byte) (n & 0xff);

b[1] = (byte) (n >> 8 & 0xff);

b[2] = (byte) (n >> 16 & 0xff);

b[3] = (byte) (n >> 24 & 0xff);

return b;

}

public short getType(byte[] tpbuffer){

short gtype = (short) ((short)tpbuffer[0] + (short)(tpbuffer[1] << 8));

return gtype;

}

public int getPakL(byte[] pkbuffer){

int gPackageLen = ((int)(pkbuffer[2]) & 0xff) | ((int)(pkbuffer[3] & 0xff) << 8) | ((int)(pkbuffer[4] & 0xff) << 16) | ((int)(pkbuffer[5] & 0xff) << 24);

return gPackageLen;

}

public int getDataL(byte[] getbuffer){

int gDataLen = (((int)(getbuffer[20] & 0xff)) | ((int)(getbuffer[21] & 0xff) << 8) | ((int)(getbuffer[22] & 0xff) << 16) | ((int)(getbuffer[23] & 0xff) << 24));

return gDataLen;

}

public int getFrameN(byte[] getbuffer){

int getFrameN = (int)(((int)(getbuffer[24])) + ((int)(getbuffer[25]) << 8) + ((int)(getbuffer[26]) << 16) + ((int)(getbuffer[27]) << 24));

return getFrameN;

}

/*

private void byte2hex(byte [] buffer) {

String h = "";

for(int i = 0; i < buffer.length; i++){

String temp = Integer.toHexString(buffer[i] & 0xFF);

if(temp.length() == 1){

temp = "0" + temp;

}

h = h + " "+ temp;

}

System.out.println(h);

}

*/

/*

@Override

protected void onResume() {

// TODO Auto-generated method stub

if(getRequestedOrientation()!=ActivityInfo.SCREEN_ORIENTATION_LANDSCAPE)

setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_LANDSCAPE);

super.onResume();

}

*/

@Override

public boolean onCreateOptionsMenu(Menu menu) {

// Inflate the menu; this adds items to the action bar if it is present.

getMenuInflater().inflate(R.menu.icool_client, menu);

return true;

}

}

增加原圖片寬高,把目標圖片寬高設置為屏幕的寬高,同時修改Mainfest.xml為橫屏顯示

在Activity中加放如下參數:

android:screenOrientation="landscape"在mUpdateUI中增加了

Imagbuf.clear();解決Imagebuf溢出的問題

修改StartThread中InputStream.read流程

============================================

作者:hclydao

http://blog.csdn.net/hclydao

版權沒有,但是轉載請保留此段聲明

============================================

Android官方文檔之App Components(Fragments)

Android官方文檔之App Components(Fragments)

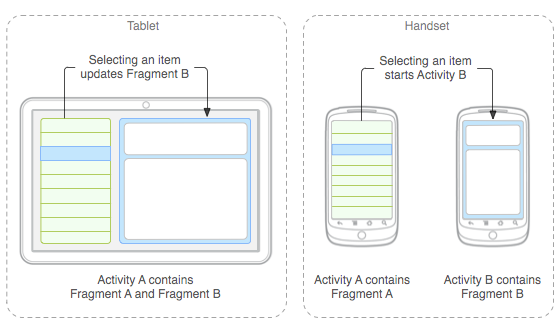

Fragment是Android API中的一個類,它代表Activity中的一部分界面;您可以在一個Activity界面中使用多個Fragment,或者在多個Activ

Android利用WindowManager生成懸浮按鈕及懸浮菜單

Android利用WindowManager生成懸浮按鈕及懸浮菜單

簡介本文模仿實現的是360手機衛士基礎效果,同時後續會補充一些WindowManager的原理知識。 整體思路360手機衛士的內存球其實就是一個沒有畫面的應用程序,整個應

android中圖片翻頁效果簡單的實現方法

android中圖片翻頁效果簡單的實現方法

復制代碼 代碼如下:public class PageWidget extends View { private Bitmap for

Android自定義View實現通訊錄字母索引(仿微信通訊錄)

Android自定義View實現通訊錄字母索引(仿微信通訊錄)

一、效果:我們看到很多軟件的通訊錄在右側都有一個字母索引功能,像微信,小米通訊錄,QQ,還有美團選擇地區等等。這裡我截了一張美團選擇城市的圖片來看看;我們今天就來實現圖片