編輯:關於Android編程

在上一章Android本地視頻播放器開發--SDL編譯編譯中編譯出sdl的支持庫,當時我們使用的2.0,但是有些api被更改了,所以在以下的使用者中我們使用SDL1.3的庫,這個庫我會傳上源碼以及編譯出的庫,接下來這張我們使用ffmpeg解碼視頻文件中的視頻幀同時使用SDL去顯示。

1、Decodec_Video.c 這是我視頻解碼的文件,其中內容如下:

[cpp]

#include <stdio.h>

#include <android/log.h>

#ifdef __MINGW32__

#undef main /* Prevents SDL from overriding main() */

#endif

#include "../SDL/include/SDL.h"

#include "../SDL/include/SDL_thread.h"

#include "VideoPlayerDecode.h"

#include "../ffmpeg/libavutil/avutil.h"

#include "../ffmpeg/libavcodec/avcodec.h"

#include "../ffmpeg/libavformat/avformat.h"

#include "../ffmpeg/libswscale/swscale.h"

AVFormatContext *pFormatCtx;

int i, videoStream;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame;

AVPacket packet;

int frameFinished;

float aspect_ratio;

static struct SwsContext *img_convert_ctx;

SDL_Surface *screen;

SDL_Overlay *bmp;

SDL_Rect rect;

SDL_Event event;

JNIEXPORT jint JNICALL Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayer

(JNIEnv *env, jclass clz, jstring fileName)

{

const char* local_title = (*env)->GetStringUTFChars(env, fileName, NULL);

av_register_all();//注冊所有支持的文件格式以及編解碼器

if(SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

fprintf(stderr, "Could not initialize SDL - %s\n", SDL_GetError());

exit(1);

}

if(avformat_open_input(&pFormatCtx, local_title, NULL, NULL) != 0)

return -1;

if(avformat_find_stream_info(pFormatCtx, NULL) < 0)

return -1;

av_dump_format(pFormatCtx, -1, local_title, 0);

videoStream=-1;

for(i=0; i<pFormatCtx->nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO) {

videoStream=i;

break;

}

if(videoStream==-1)

return -1; // Didn't find a video stream

// Get a pointer to the codec context for the video stream

pCodecCtx=pFormatCtx->streams[videoStream]->codec;

// Find the decoder for the video stream

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL) {

fprintf(stderr, "Unsupported codec!\n");

return -1; // Codec not found

}

if(avcodec_open2(pCodecCtx, pCodec, NULL) < 0)return -1;

pFrame = avcodec_alloc_frame();

if(pFrame == NULL)return -1;

// Make a screen to put our video

#ifndef __DARWIN__

screen = SDL_SetVideoMode(pCodecCtx->width, pCodecCtx->height, 0, 0);

#else

screen = SDL_SetVideoMode(pCodecCtx->width, pCodecCtx->height, 24, 0);

#endif

if(!screen) {

fprintf(stderr, "SDL: could not set video mode - exiting\n");

exit(1);

}

// Allocate a place to put our YUV image on that screen

bmp = SDL_CreateYUVOverlay(pCodecCtx->width,

pCodecCtx->height,

SDL_YV12_OVERLAY,

screen);

img_convert_ctx = sws_getContext(pCodecCtx->width,

pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height,

PIX_FMT_RGB24, SWS_BICUBIC, NULL, NULL, NULL);

// Read frames and save first five frames to disk

i=0;

while(av_read_frame(pFormatCtx, &packet)>=0) {

// Is this a packet from the video stream?

if(packet.stream_index==videoStream) {

avcodec_decode_video2(pCodecCtx, pFrame, &frameFinished, &packet);

// Did we get a video frame?

if(frameFinished) {

SDL_LockYUVOverlay(bmp);

AVPicture *pict;

pict->data[0] = bmp->pixels[0];

pict->data[1] = bmp->pixels[2];

pict->data[2] = bmp->pixels[1];

pict->linesize[0] = bmp->pitches[0];

pict->linesize[1] = bmp->pitches[2];

pict->linesize[2] = bmp->pitches[1];

sws_scale(img_convert_ctx, pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pict->data, pict->linesize);

SDL_UnlockYUVOverlay(bmp);

rect.x = 0;

rect.y = 0;

rect.w = pCodecCtx->width;

rect.h = pCodecCtx->height;

SDL_DisplayYUVOverlay(bmp, &rect);

}

}

// Free the packet that was allocated by av_read_frame

av_free_packet(&packet);

SDL_PollEvent(&event);

switch(event.type) {

case SDL_QUIT:

SDL_Quit();

exit(0);

break;

default:

break;

}

}

// Free the YUV frame

av_free(pFrame);

// Close the codec

avcodec_close(pCodecCtx);

// Close the video file

av_close_input_file(pFormatCtx);

}

#include <stdio.h>

#include <android/log.h>

#ifdef __MINGW32__

#undef main /* Prevents SDL from overriding main() */

#endif

#include "../SDL/include/SDL.h"

#include "../SDL/include/SDL_thread.h"

#include "VideoPlayerDecode.h"

#include "../ffmpeg/libavutil/avutil.h"

#include "../ffmpeg/libavcodec/avcodec.h"

#include "../ffmpeg/libavformat/avformat.h"

#include "../ffmpeg/libswscale/swscale.h"

AVFormatContext *pFormatCtx;

int i, videoStream;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame;

AVPacket packet;

int frameFinished;

float aspect_ratio;

static struct SwsContext *img_convert_ctx;

SDL_Surface *screen;

SDL_Overlay *bmp;

SDL_Rect rect;

SDL_Event event;

JNIEXPORT jint JNICALL Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayer

(JNIEnv *env, jclass clz, jstring fileName)

{

const char* local_title = (*env)->GetStringUTFChars(env, fileName, NULL);

av_register_all();//注冊所有支持的文件格式以及編解碼器

if(SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

fprintf(stderr, "Could not initialize SDL - %s\n", SDL_GetError());

exit(1);

}

if(avformat_open_input(&pFormatCtx, local_title, NULL, NULL) != 0)

return -1;

if(avformat_find_stream_info(pFormatCtx, NULL) < 0)

return -1;

av_dump_format(pFormatCtx, -1, local_title, 0);

videoStream=-1;

for(i=0; i<pFormatCtx->nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO) {

videoStream=i;

break;

}

if(videoStream==-1)

return -1; // Didn't find a video stream

// Get a pointer to the codec context for the video stream

pCodecCtx=pFormatCtx->streams[videoStream]->codec;

// Find the decoder for the video stream

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL) {

fprintf(stderr, "Unsupported codec!\n");

return -1; // Codec not found

}

if(avcodec_open2(pCodecCtx, pCodec, NULL) < 0)return -1;

pFrame = avcodec_alloc_frame();

if(pFrame == NULL)return -1;

// Make a screen to put our video

#ifndef __DARWIN__

screen = SDL_SetVideoMode(pCodecCtx->width, pCodecCtx->height, 0, 0);

#else

screen = SDL_SetVideoMode(pCodecCtx->width, pCodecCtx->height, 24, 0);

#endif

if(!screen) {

fprintf(stderr, "SDL: could not set video mode - exiting\n");

exit(1);

}

// Allocate a place to put our YUV image on that screen

bmp = SDL_CreateYUVOverlay(pCodecCtx->width,

pCodecCtx->height,

SDL_YV12_OVERLAY,

screen);

img_convert_ctx = sws_getContext(pCodecCtx->width,

pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height,

PIX_FMT_RGB24, SWS_BICUBIC, NULL, NULL, NULL);

// Read frames and save first five frames to disk

i=0;

while(av_read_frame(pFormatCtx, &packet)>=0) {

// Is this a packet from the video stream?

if(packet.stream_index==videoStream) {

avcodec_decode_video2(pCodecCtx, pFrame, &frameFinished, &packet);

// Did we get a video frame?

if(frameFinished) {

SDL_LockYUVOverlay(bmp);

AVPicture *pict;

pict->data[0] = bmp->pixels[0];

pict->data[1] = bmp->pixels[2];

pict->data[2] = bmp->pixels[1];

pict->linesize[0] = bmp->pitches[0];

pict->linesize[1] = bmp->pitches[2];

pict->linesize[2] = bmp->pitches[1];

sws_scale(img_convert_ctx, pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pict->data, pict->linesize);

SDL_UnlockYUVOverlay(bmp);

rect.x = 0;

rect.y = 0;

rect.w = pCodecCtx->width;

rect.h = pCodecCtx->height;

SDL_DisplayYUVOverlay(bmp, &rect);

}

}

// Free the packet that was allocated by av_read_frame

av_free_packet(&packet);

SDL_PollEvent(&event);

switch(event.type) {

case SDL_QUIT:

SDL_Quit();

exit(0);

break;

default:

break;

}

}

// Free the YUV frame

av_free(pFrame);

// Close the codec

avcodec_close(pCodecCtx);

// Close the video file

av_close_input_file(pFormatCtx);

}

2、編譯結果如下:

[cpp]

root@zhangjie:/Graduation/jni# ndk-build

Install : libSDL.so => libs/armeabi/libSDL.so

Install : libffmpeg-neon.so => libs/armeabi/libffmpeg-neon.so

Compile arm : ffmpeg-test-neon <= Decodec_Video.c

/Graduation/jni/jniffmpeg/Decodec_Video.c: In function 'Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayer':

/Graduation/jni/jniffmpeg/Decodec_Video.c:106:1: warning: passing argument 2 of 'sws_scale' from incompatible pointer type [enabled by default]

/Graduation/jni/jniffmpeg/../ffmpeg/libswscale/swscale.h:237:5: note: expected 'uint8_t const * const*' but argument is of type 'uint8_t **'

/Graduation/jni/jniffmpeg/Decodec_Video.c:137:2: warning: 'av_close_input_file' is deprecated (declared at /Graduation/jni/jniffmpeg/../ffmpeg/libavformat/avformat.h:1533) [-Wdeprecated-declarations]

SharedLibrary : libffmpeg-test-neon.so

Install : libffmpeg-test-neon.so => libs/armeabi/libffmpeg-test-neon.so

root@zhangjie:/Graduation/jni# ndk-build

Install : libSDL.so => libs/armeabi/libSDL.so

Install : libffmpeg-neon.so => libs/armeabi/libffmpeg-neon.so

Compile arm : ffmpeg-test-neon <= Decodec_Video.c

/Graduation/jni/jniffmpeg/Decodec_Video.c: In function 'Java_com_zhangjie_graduation_videopalyer_jni_VideoPlayerDecode_VideoPlayer':

/Graduation/jni/jniffmpeg/Decodec_Video.c:106:1: warning: passing argument 2 of 'sws_scale' from incompatible pointer type [enabled by default]

/Graduation/jni/jniffmpeg/../ffmpeg/libswscale/swscale.h:237:5: note: expected 'uint8_t const * const*' but argument is of type 'uint8_t **'

/Graduation/jni/jniffmpeg/Decodec_Video.c:137:2: warning: 'av_close_input_file' is deprecated (declared at /Graduation/jni/jniffmpeg/../ffmpeg/libavformat/avformat.h:1533) [-Wdeprecated-declarations]

SharedLibrary : libffmpeg-test-neon.so

Install : libffmpeg-test-neon.so => libs/armeabi/libffmpeg-test-neon.so3、SDL1.3源碼

4、之前在Android本地視頻播放器開發--NDK編譯FFmpeg中沒有添加swscale功能,所以需要重新編譯ffmpeg,其腳本如下:

[plain]

NDK=/opt/android-ndk-r8d

PLATFORM=$NDK/platforms/android-8/arch-arm/

PREBUILT=$NDK/toolchains/arm-linux-androideabi-4.4.3/prebuilt/linux-x86

LOCAL_ARM_NEON=true

CPU=armv7-a

OPTIMIZE_CFLAGS="-mfloat-abi=softfp -mfpu=neon -marm -mcpu=cortex-a8"

PREFIX=./android/$CPU

./configure --target-os=linux \

--prefix=$PREFIX \

--enable-cross-compile \

--arch=arm \

--enable-nonfree \

--enable-asm \

--cpu=cortex-a8 \

--enable-neon \

--cc=$PREBUILT/bin/arm-linux-androideabi-gcc \

--cross-prefix=$PREBUILT/bin/arm-linux-androideabi- \

--nm=$PREBUILT/bin/arm-linux-androideabi-nm \

--sysroot=$PLATFORM \

--extra-cflags=" -O3 -fpic -DANDROID -DHAVE_SYS_UIO_H=1 $OPTIMIZE_CFLAGS " \

--disable-shared \

--enable-static \

--extra-ldflags="-Wl,-rpath-link=$PLATFORM/usr/lib -L$PLATFORM/usr/lib -nostdlib -lc -lm -ldl -llog" \

--disable-ffmpeg \

--disable-ffplay \

--disable-ffprobe \

--disable-ffserver \

--disable-encoders \

--enable-avformat \

--disable-optimizations \

--disable-doc \

--enable-pthreads \

--disable-yasm \

--enable-zlib \

--enable-pic \

--enable-small

#make clean

make -j4 install

$PREBUILT/bin/arm-linux-androideabi-ar d libavcodec/libavcodec.a inverse.o

$PREBUILT/bin/arm-linux-androideabi-ld -rpath-link=$PLATFORM/usr/lib -L$PLATFORM/usr/lib -soname libffmpeg-neon.so -shared -nostdlib -z noexecstack -Bsymbolic --whole-archive --no-undefined -o $PREFIX/libffmpeg-neon.so libavcodec/libavcodec.a libavformat/libavformat.a libavutil/libavutil.a libavfilter/libavfilter.a libswresample/libswresample.a libswscale/libswscale.a libavdevice/libavdevice.a -lc -lm -lz -ldl -llog --warn-once --dynamic-linker=/system/bin/linker $PREBUILT/lib/gcc/arm-linux-androideabi/4.4.3/libgcc.a

NDK=/opt/android-ndk-r8d

PLATFORM=$NDK/platforms/android-8/arch-arm/

PREBUILT=$NDK/toolchains/arm-linux-androideabi-4.4.3/prebuilt/linux-x86

LOCAL_ARM_NEON=true

CPU=armv7-a

OPTIMIZE_CFLAGS="-mfloat-abi=softfp -mfpu=neon -marm -mcpu=cortex-a8"

PREFIX=./android/$CPU

./configure --target-os=linux \

--prefix=$PREFIX \

--enable-cross-compile \

--arch=arm \

--enable-nonfree \

--enable-asm \

--cpu=cortex-a8 \

--enable-neon \

--cc=$PREBUILT/bin/arm-linux-androideabi-gcc \

--cross-prefix=$PREBUILT/bin/arm-linux-androideabi- \

--nm=$PREBUILT/bin/arm-linux-androideabi-nm \

--sysroot=$PLATFORM \

--extra-cflags=" -O3 -fpic -DANDROID -DHAVE_SYS_UIO_H=1 $OPTIMIZE_CFLAGS " \

--disable-shared \

--enable-static \

--extra-ldflags="-Wl,-rpath-link=$PLATFORM/usr/lib -L$PLATFORM/usr/lib -nostdlib -lc -lm -ldl -llog" \

--disable-ffmpeg \

--disable-ffplay \

--disable-ffprobe \

--disable-ffserver \

--disable-encoders \

--enable-avformat \

--disable-optimizations \

--disable-doc \

--enable-pthreads \

--disable-yasm \

--enable-zlib \

--enable-pic \

--enable-small

#make clean

make -j4 install

$PREBUILT/bin/arm-linux-androideabi-ar d libavcodec/libavcodec.a inverse.o

$PREBUILT/bin/arm-linux-androideabi-ld -rpath-link=$PLATFORM/usr/lib -L$PLATFORM/usr/lib -soname libffmpeg-neon.so -shared -nostdlib -z noexecstack -Bsymbolic --whole-archive --no-undefined -o $PREFIX/libffmpeg-neon.so libavcodec/libavcodec.a libavformat/libavformat.a libavutil/libavutil.a libavfilter/libavfilter.a libswresample/libswresample.a libswscale/libswscale.a libavdevice/libavdevice.a -lc -lm -lz -ldl -llog --warn-once --dynamic-linker=/system/bin/linker $PREBUILT/lib/gcc/arm-linux-androideabi/4.4.3/libgcc.a

Android Service詳解及示例代碼

Android Service詳解及示例代碼

Android Service 詳細介紹:1、Service的概念 2、Service的生命周期 3、實例:控制音樂播放的Service一、Service的概念Servi

Android中利用SurfaceView制作抽獎轉盤的全流程攻略

Android中利用SurfaceView制作抽獎轉盤的全流程攻略

一、概述今天給大家帶來SurfaceView的一個實戰案例,話說自定義View也是各種寫,一直沒有寫過SurfaceView,這個玩意是什麼東西?什麼時候用比較好呢?可以

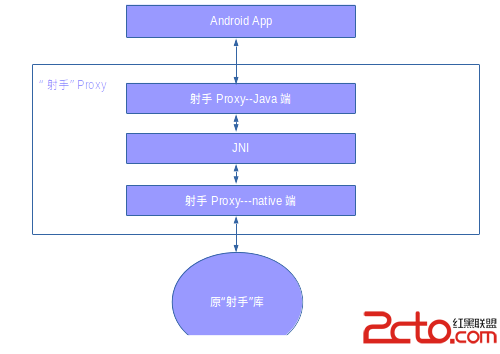

Android實戰技巧之四十五:復用原有C代碼的方案

Android實戰技巧之四十五:復用原有C代碼的方案

任務描述有一套C寫的代號為“Shooter”的核心算法庫可以解決我們面臨的一些問題,只是這個庫一直用在其他平台。我們現在的任務是將其復用到Andr

Android編程實現3D旋轉效果實例

Android編程實現3D旋轉效果實例

本文實例講述了Android編程實現3D旋轉效果的方法。分享給大家供大家參考,具體如下:下面的示例是在Android中實現圖片3D旋轉的效果。實現3D效果一般使用Open