編輯:關於Android編程

Android Camera數據流完整分析

之前已經有很多文章一直在講述Android Camera,這裡也算是進行以下總結

我們依舊從camera 的打開開始,逐步看看camera的數據流向,內存分配,首先打開camera的第一步,實例化camera類,onCreate被調用,在這個方法中到底做了些什麼事情,也在這裡做一下總結:

1.實例化FocusManager

2.開啟一個CameraOpenThread用於打開camera的全部過程,mCameraOpenThread.start();

3.實例化PreferenceInflater,初始化一些參數

4.實例化TouchManager

5.實例化RotateDialogController等等等

重點是下面幾行代碼,之前已經不止一次提到,不在多說了

[html]

// don't set mSurfaceHolder here. We have it set ONLY within

// surfaceChanged / surfaceDestroyed, other parts of the code

// assume that when it is set, the surface is also set.

SurfaceView preview = (SurfaceView) findViewById(R.id.camera_preview);

SurfaceHolder holder = preview.getHolder();

holder.addCallback(this);

// don't set mSurfaceHolder here. We have it set ONLY within

// surfaceChanged / surfaceDestroyed, other parts of the code

// assume that when it is set, the surface is also set.

SurfaceView preview = (SurfaceView) findViewById(R.id.camera_preview);

SurfaceHolder holder = preview.getHolder();

holder.addCallback(this);另外這個方法中,還開啟了一個startPreviewThread用於開始preview過程,初始化完成之後就開啟preview功能

[html]

Thread mCameraPreviewThread = new Thread(new Runnable() {

public void run() {

initializeCapabilities();

startPreview(true);

}

});

Thread mCameraPreviewThread = new Thread(new Runnable() {

public void run() {

initializeCapabilities();

startPreview(true);

}

});這個線程進行初始化之後就調用了app層的startPreview方法,這裡開始了萬裡長征的第一步

這裡還是花一點時間說說camera的打開過程,從app調用到的open方法過程這之前的文章中已經講過,在底層的open方法中完成以下工作

1.為上層調用注冊調用接口

2.實例化hal層,並初始化,這個hal層初始化的過程同樣之前的文章中已經詳細講過,這個初始化過程非常重要,直接影響著之後的操作

回到我們的startPreview,一步一步看看他的走向

app層---------------------------做一些基本初始化,最重要的就是setPreviewwindow這個方法了,之前也已經詳細分析過了,然後調用frameworks層的方法

frameworks層 ---------------這裡只是定義了startPreview這個方式,通過JNI層調用

JNI層---------------------------這裡作為一個中間站,不做任何處理,轉去調用下一層實現

camera client層-------------同樣可以理解為一個中轉站,通過binder機制接著往下走

camera server層------------初始化顯示窗口屬性,調用下一層

hardware interface層------轉調camerahal_module中的方法

camera hal層----------------這個hal層的startPreview實現是整個preview過程中最為重要的部分,下面再說明

下面就是通過hal層分發命令通過V4LCameraAdapter或者OMXCameraAdapter與kernel driver進行交互

我們看一下server層的調用,相對較重要

[html]

status_t CameraService::Client::startPreview() {

LOG1("startPreview (pid %d)", getCallingPid());

return startCameraMode(<SPAN style="BACKGROUND-COLOR: rgb(51,255,51)">CAMERA_PREVIEW_MODE</SPAN>);

}

status_t CameraService::Client::startPreview() {

LOG1("startPreview (pid %d)", getCallingPid());

return startCameraMode(CAMERA_PREVIEW_MODE);

}[html] view plaincopyprint?// start preview or recording

status_t CameraService::Client::startCameraMode(camera_mode mode) {

LOG1("startCameraMode(%d)", mode);

Mutex::Autolock lock(mLock);

status_t result = checkPidAndHardware();

if (result != NO_ERROR) return result;

switch(mode) {

case CAMERA_PREVIEW_MODE:

if (mSurface == 0 && mPreviewWindow == 0) {

LOG1("mSurface is not set yet.");

// still able to start preview in this case.

}

<SPAN style="BACKGROUND-COLOR: rgb(51,255,51)">return startPreviewMode();</SPAN>

case CAMERA_RECORDING_MODE:

if (mSurface == 0 && mPreviewWindow == 0) {

LOGE("mSurface or mPreviewWindow must be set before startRecordingMode.");

return INVALID_OPERATION;

}

return startRecordingMode();

default:

return UNKNOWN_ERROR;

}

}

// start preview or recording

status_t CameraService::Client::startCameraMode(camera_mode mode) {

LOG1("startCameraMode(%d)", mode);

Mutex::Autolock lock(mLock);

status_t result = checkPidAndHardware();

if (result != NO_ERROR) return result;

switch(mode) {

case CAMERA_PREVIEW_MODE:

if (mSurface == 0 && mPreviewWindow == 0) {

LOG1("mSurface is not set yet.");

// still able to start preview in this case.

}

return startPreviewMode();

case CAMERA_RECORDING_MODE:

if (mSurface == 0 && mPreviewWindow == 0) {

LOGE("mSurface or mPreviewWindow must be set before startRecordingMode.");

return INVALID_OPERATION;

}

return startRecordingMode();

default:

return UNKNOWN_ERROR;

}

}[html] view plaincopyprint?status_t CameraService::Client::startPreviewMode() {

LOG1("startPreviewMode");

status_t result = NO_ERROR;

// if preview has been enabled, nothing needs to be done

if (mHardware->previewEnabled()) {

return NO_ERROR;

}

if (mPreviewWindow != 0) {

native_window_set_scaling_mode(mPreviewWindow.get(),

NATIVE_WINDOW_SCALING_MODE_SCALE_TO_WINDOW);

native_window_set_buffers_transform(mPreviewWindow.get(),

mOrientation);

}

#ifdef OMAP_ENHANCEMENT

disableMsgType(CAMERA_MSG_COMPRESSED_BURST_IMAGE);

#endif

mHardware->setPreviewWindow(mPreviewWindow);

result = mHardware->startPreview();

return result;

}

status_t CameraService::Client::startPreviewMode() {

LOG1("startPreviewMode");

status_t result = NO_ERROR;

// if preview has been enabled, nothing needs to be done

if (mHardware->previewEnabled()) {

return NO_ERROR;

}

if (mPreviewWindow != 0) {

native_window_set_scaling_mode(mPreviewWindow.get(),

NATIVE_WINDOW_SCALING_MODE_SCALE_TO_WINDOW);

native_window_set_buffers_transform(mPreviewWindow.get(),

mOrientation);

}

#ifdef OMAP_ENHANCEMENT

disableMsgType(CAMERA_MSG_COMPRESSED_BURST_IMAGE);

#endif

mHardware->setPreviewWindow(mPreviewWindow);

result = mHardware->startPreview();

return result;

}

在這個startPreviewMode方法中,同樣對previewWindow進行了著重的初始化,這個window一直都是非常重要的,這裡我一直沒有說到setPreviewwindow這個方法的調用,只是因為這片文章的重點不在這裡,但是不代表你可以小視他

這裡我們把重點方法hal層中,在hal層的startPreview方法中,首先調用preview之前的initialize方法完成一些很重要的初始化過程,這裡其實我學習的不是很透徹,僅個人思路,主要以下兩個過程重點關注

1.申請preview buffers

2.hal向camera adapter發送CAMERA_USE_BUFFERS_PREVIEW命令

hal層的sendCommand方法最終會調用OMXCameraAdapter的UseBuffers方法,這個方法使OMXCameraAdapter的組件接口與傳入的camerabuffer綁定起來

[html]

status_t OMXCameraAdapter::UseBuffersPreview(CameraBuffer * bufArr, int num)

{

status_t ret = NO_ERROR;

OMX_ERRORTYPE eError = OMX_ErrorNone;

int tmpHeight, tmpWidth;

LOG_FUNCTION_NAME;

if(!bufArr)

{

CAMHAL_LOGEA("NULL pointer passed for buffArr");

LOG_FUNCTION_NAME_EXIT;

return BAD_VALUE;

}

OMXCameraPortParameters * mPreviewData = NULL;

OMXCameraPortParameters *measurementData = NULL;

mPreviewData = &mCameraAdapterParameters.mCameraPortParams[mCameraAdapterParameters.mPrevPortIndex];//<SPAN style="FONT-FAMILY: Arial, Helvetica, sans-serif">mPreviewData 指針指向preview port</SPAN>

measurementData = &mCameraAdapterParameters.mCameraPortParams[mCameraAdapterParameters.mMeasurementPortIndex];

mPreviewData->mNumBufs = num ;

if ( 0 != mUsePreviewSem.Count() )

{

CAMHAL_LOGEB("Error mUsePreviewSem semaphore count %d", mUsePreviewSem.Count());

LOG_FUNCTION_NAME_EXIT;

return NO_INIT;

}

if(mPreviewData->mNumBufs != num)

{

CAMHAL_LOGEA("Current number of buffers doesnt equal new num of buffers passed!");

LOG_FUNCTION_NAME_EXIT;

return BAD_VALUE;

}

mStateSwitchLock.lock();

if ( mComponentState == OMX_StateLoaded ) {

if (mPendingPreviewSettings & SetLDC) {

mPendingPreviewSettings &= ~SetLDC;

ret = setLDC(mIPP);

if ( NO_ERROR != ret ) {

CAMHAL_LOGEB("setLDC() failed %d", ret);

}

}

if (mPendingPreviewSettings & SetNSF) {

mPendingPreviewSettings &= ~SetNSF;

ret = setNSF(mIPP);

if ( NO_ERROR != ret ) {

CAMHAL_LOGEB("setNSF() failed %d", ret);

}

}

if (mPendingPreviewSettings & SetCapMode) {

mPendingPreviewSettings &= ~SetCapMode;

ret = setCaptureMode(mCapMode);

if ( NO_ERROR != ret ) {

CAMHAL_LOGEB("setCaptureMode() failed %d", ret);

}

}

if(mCapMode == OMXCameraAdapter::VIDEO_MODE) {

if (mPendingPreviewSettings & SetVNF) {

mPendingPreviewSettings &= ~SetVNF;

ret = enableVideoNoiseFilter(mVnfEnabled);

if ( NO_ERROR != ret){

CAMHAL_LOGEB("Error configuring VNF %x", ret);

}

}

if (mPendingPreviewSettings & SetVSTAB) {

mPendingPreviewSettings &= ~SetVSTAB;

ret = enableVideoStabilization(mVstabEnabled);

if ( NO_ERROR != ret) {

CAMHAL_LOGEB("Error configuring VSTAB %x", ret);

}

}

}

}

ret = setSensorOrientation(mSensorOrientation);

if ( NO_ERROR != ret )

{

CAMHAL_LOGEB("Error configuring Sensor Orientation %x", ret);

mSensorOrientation = 0;

}

if ( mComponentState == OMX_StateLoaded )

{

///Register for IDLE state switch event

ret = RegisterForEvent(mCameraAdapterParameters.mHandleComp,

OMX_EventCmdComplete,

OMX_CommandStateSet,

OMX_StateIdle,

mUsePreviewSem);

if(ret!=NO_ERROR)

{

CAMHAL_LOGEB("Error in registering for event %d", ret);

goto EXIT;

}

///Once we get the buffers, move component state to idle state and pass the buffers to OMX comp using UseBuffer

eError = OMX_SendCommand (mCameraAdapterParameters.mHandleComp ,

OMX_CommandStateSet,

OMX_StateIdle,

NULL);

CAMHAL_LOGDB("OMX_SendCommand(OMX_CommandStateSet) 0x%x", eError);

GOTO_EXIT_IF((eError!=OMX_ErrorNone), eError);

mComponentState = OMX_StateIdle;

}

else

{

///Register for Preview port ENABLE event

ret = RegisterForEvent(mCameraAdapterParameters.mHandleComp,

OMX_EventCmdComplete,

OMX_CommandPortEnable,

mCameraAdapterParameters.mPrevPortIndex,

mUsePreviewSem);

if ( NO_ERROR != ret )

{

CAMHAL_LOGEB("Error in registering for event %d", ret);

goto EXIT;

}

///Enable Preview Port

eError = OMX_SendCommand(mCameraAdapterParameters.mHandleComp,

OMX_CommandPortEnable,

mCameraAdapterParameters.mPrevPortIndex,

NULL);

}

///Configure DOMX to use either gralloc handles or vptrs

OMX_TI_PARAMUSENATIVEBUFFER domxUseGrallocHandles;

OMX_INIT_STRUCT_PTR (&domxUseGrallocHandles, OMX_TI_PARAMUSENATIVEBUFFER);

domxUseGrallocHandles.nPortIndex = mCameraAdapterParameters.mPrevPortIndex;

domxUseGrallocHandles.bEnable = OMX_TRUE;

eError = OMX_SetParameter(mCameraAdapterParameters.mHandleComp,

(OMX_INDEXTYPE)OMX_TI_IndexUseNativeBuffers, &domxUseGrallocHandles);

if(eError!=OMX_ErrorNone)

{

CAMHAL_LOGEB("OMX_SetParameter - %x", eError);

}

GOTO_EXIT_IF((eError!=OMX_ErrorNone), eError);

OMX_BUFFERHEADERTYPE *pBufferHdr;

for(int index=0;index<num;index++) {

OMX_U8 *ptr;

ptr = (OMX_U8 *)camera_buffer_get_omx_ptr (&bufArr[index]);

<SPAN style="BACKGROUND-COLOR: rgb(255,204,0)"> eError = OMX_UseBuffer( mCameraAdapterParameters.mHandleComp,

&pBufferHdr,

mCameraAdapterParameters.mPrevPortIndex,

0,

mPreviewData->mBufSize,

ptr);</SPAN>

if(eError!=OMX_ErrorNone)

{

CAMHAL_LOGEB("OMX_UseBuffer-0x%x", eError);

}

GOTO_EXIT_IF((eError!=OMX_ErrorNone), eError);

pBufferHdr->pAppPrivate = (OMX_PTR)&bufArr[index];

pBufferHdr->nSize = sizeof(OMX_BUFFERHEADERTYPE);

pBufferHdr->nVersion.s.nVersionMajor = 1 ;

pBufferHdr->nVersion.s.nVersionMinor = 1 ;

pBufferHdr->nVersion.s.nRevision = 0 ;

pBufferHdr->nVersion.s.nStep = 0;

<SPAN style="BACKGROUND-COLOR: rgb(51,255,51)">mPreviewData->mBufferHeader[index] = pBufferHdr;這裡組件的屬性結構指針通過bufferheader與申請的camerabuffer綁定到一起了</SPAN>

}

if ( mMeasurementEnabled )

{

for( int i = 0; i < num; i++ )

{

OMX_BUFFERHEADERTYPE *pBufHdr;

OMX_U8 *ptr;

ptr = (OMX_U8 *)camera_buffer_get_omx_ptr (&mPreviewDataBuffers[i]);

eError = OMX_UseBuffer( mCameraAdapterParameters.mHandleComp,

&pBufHdr,

mCameraAdapterParameters.mMeasurementPortIndex,

0,

measurementData->mBufSize,

ptr);

if ( eError == OMX_ErrorNone )

{

pBufHdr->pAppPrivate = (OMX_PTR *)&mPreviewDataBuffers[i];

pBufHdr->nSize = sizeof(OMX_BUFFERHEADERTYPE);

pBufHdr->nVersion.s.nVersionMajor = 1 ;

pBufHdr->nVersion.s.nVersionMinor = 1 ;

pBufHdr->nVersion.s.nRevision = 0 ;

pBufHdr->nVersion.s.nStep = 0;

measurementData->mBufferHeader[i] = pBufHdr;

}

else

{

CAMHAL_LOGEB("OMX_UseBuffer -0x%x", eError);

ret = BAD_VALUE;

break;

}

}

}

CAMHAL_LOGDA("Registering preview buffers");

ret = mUsePreviewSem.WaitTimeout(OMX_CMD_TIMEOUT);

//If somethiing bad happened while we wait

if (mComponentState == OMX_StateInvalid)

{

CAMHAL_LOGEA("Invalid State after Registering preview buffers Exitting!!!");

goto EXIT;

}

if ( NO_ERROR == ret )

{

CAMHAL_LOGDA("Preview buffer registration successfull");

}

else

{

if ( mComponentState == OMX_StateLoaded )

{

ret |= RemoveEvent(mCameraAdapterParameters.mHandleComp,

OMX_EventCmdComplete,

OMX_CommandStateSet,

OMX_StateIdle,

NULL);

}

else

{

ret |= SignalEvent(mCameraAdapterParameters.mHandleComp,

OMX_EventCmdComplete,

OMX_CommandPortEnable,

mCameraAdapterParameters.mPrevPortIndex,

NULL);

}

CAMHAL_LOGEA("Timeout expired on preview buffer registration");

goto EXIT;

}

LOG_FUNCTION_NAME_EXIT;

return (ret | ErrorUtils::omxToAndroidError(eError));

///If there is any failure, we reach here.

///Here, we do any resource freeing and convert from OMX error code to Camera Hal error code

EXIT:

mStateSwitchLock.unlock();

CAMHAL_LOGEB("Exiting function %s because of ret %d eError=%x", __FUNCTION__, ret, eError);

performCleanupAfterError();

CAMHAL_LOGEB("Exiting function %s because of ret %d eError=%x", __FUNCTION__, ret, eError);

LOG_FUNCTION_NAME_EXIT;

return (ret | ErrorUtils::omxToAndroidError(eError));

}

status_t OMXCameraAdapter::UseBuffersPreview(CameraBuffer * bufArr, int num)

{

status_t ret = NO_ERROR;

OMX_ERRORTYPE eError = OMX_ErrorNone;

int tmpHeight, tmpWidth;

LOG_FUNCTION_NAME;

if(!bufArr)

{

CAMHAL_LOGEA("NULL pointer passed for buffArr");

LOG_FUNCTION_NAME_EXIT;

return BAD_VALUE;

}

OMXCameraPortParameters * mPreviewData = NULL;

OMXCameraPortParameters *measurementData = NULL;

mPreviewData = &mCameraAdapterParameters.mCameraPortParams[mCameraAdapterParameters.mPrevPortIndex];//mPreviewData 指針指向preview port

measurementData = &mCameraAdapterParameters.mCameraPortParams[mCameraAdapterParameters.mMeasurementPortIndex];

mPreviewData->mNumBufs = num ;

if ( 0 != mUsePreviewSem.Count() )

{

CAMHAL_LOGEB("Error mUsePreviewSem semaphore count %d", mUsePreviewSem.Count());

LOG_FUNCTION_NAME_EXIT;

return NO_INIT;

}

if(mPreviewData->mNumBufs != num)

{

CAMHAL_LOGEA("Current number of buffers doesnt equal new num of buffers passed!");

LOG_FUNCTION_NAME_EXIT;

return BAD_VALUE;

}

mStateSwitchLock.lock();

if ( mComponentState == OMX_StateLoaded ) {

if (mPendingPreviewSettings & SetLDC) {

mPendingPreviewSettings &= ~SetLDC;

ret = setLDC(mIPP);

if ( NO_ERROR != ret ) {

CAMHAL_LOGEB("setLDC() failed %d", ret);

}

}

if (mPendingPreviewSettings & SetNSF) {

mPendingPreviewSettings &= ~SetNSF;

ret = setNSF(mIPP);

if ( NO_ERROR != ret ) {

CAMHAL_LOGEB("setNSF() failed %d", ret);

}

}

if (mPendingPreviewSettings & SetCapMode) {

mPendingPreviewSettings &= ~SetCapMode;

ret = setCaptureMode(mCapMode);

if ( NO_ERROR != ret ) {

CAMHAL_LOGEB("setCaptureMode() failed %d", ret);

}

}

if(mCapMode == OMXCameraAdapter::VIDEO_MODE) {

if (mPendingPreviewSettings & SetVNF) {

mPendingPreviewSettings &= ~SetVNF;

ret = enableVideoNoiseFilter(mVnfEnabled);

if ( NO_ERROR != ret){

CAMHAL_LOGEB("Error configuring VNF %x", ret);

}

}

if (mPendingPreviewSettings & SetVSTAB) {

mPendingPreviewSettings &= ~SetVSTAB;

ret = enableVideoStabilization(mVstabEnabled);

if ( NO_ERROR != ret) {

CAMHAL_LOGEB("Error configuring VSTAB %x", ret);

}

}

}

}

ret = setSensorOrientation(mSensorOrientation);

if ( NO_ERROR != ret )

{

CAMHAL_LOGEB("Error configuring Sensor Orientation %x", ret);

mSensorOrientation = 0;

}

if ( mComponentState == OMX_StateLoaded )

{

///Register for IDLE state switch event

ret = RegisterForEvent(mCameraAdapterParameters.mHandleComp,

OMX_EventCmdComplete,

OMX_CommandStateSet,

OMX_StateIdle,

mUsePreviewSem);

if(ret!=NO_ERROR)

{

CAMHAL_LOGEB("Error in registering for event %d", ret);

goto EXIT;

}

///Once we get the buffers, move component state to idle state and pass the buffers to OMX comp using UseBuffer

eError = OMX_SendCommand (mCameraAdapterParameters.mHandleComp ,

OMX_CommandStateSet,

OMX_StateIdle,

NULL);

CAMHAL_LOGDB("OMX_SendCommand(OMX_CommandStateSet) 0x%x", eError);

GOTO_EXIT_IF((eError!=OMX_ErrorNone), eError);

mComponentState = OMX_StateIdle;

}

else

{

///Register for Preview port ENABLE event

ret = RegisterForEvent(mCameraAdapterParameters.mHandleComp,

OMX_EventCmdComplete,

OMX_CommandPortEnable,

mCameraAdapterParameters.mPrevPortIndex,

mUsePreviewSem);

if ( NO_ERROR != ret )

{

CAMHAL_LOGEB("Error in registering for event %d", ret);

goto EXIT;

}

///Enable Preview Port

eError = OMX_SendCommand(mCameraAdapterParameters.mHandleComp,

OMX_CommandPortEnable,

mCameraAdapterParameters.mPrevPortIndex,

NULL);

}

///Configure DOMX to use either gralloc handles or vptrs

OMX_TI_PARAMUSENATIVEBUFFER domxUseGrallocHandles;

OMX_INIT_STRUCT_PTR (&domxUseGrallocHandles, OMX_TI_PARAMUSENATIVEBUFFER);

domxUseGrallocHandles.nPortIndex = mCameraAdapterParameters.mPrevPortIndex;

domxUseGrallocHandles.bEnable = OMX_TRUE;

eError = OMX_SetParameter(mCameraAdapterParameters.mHandleComp,

(OMX_INDEXTYPE)OMX_TI_IndexUseNativeBuffers, &domxUseGrallocHandles);

if(eError!=OMX_ErrorNone)

{

CAMHAL_LOGEB("OMX_SetParameter - %x", eError);

}

GOTO_EXIT_IF((eError!=OMX_ErrorNone), eError);

OMX_BUFFERHEADERTYPE *pBufferHdr;

for(int index=0;index<num;index++) {

OMX_U8 *ptr;

ptr = (OMX_U8 *)camera_buffer_get_omx_ptr (&bufArr[index]);

eError = OMX_UseBuffer( mCameraAdapterParameters.mHandleComp,

&pBufferHdr,

mCameraAdapterParameters.mPrevPortIndex,

0,

mPreviewData->mBufSize,

ptr);

if(eError!=OMX_ErrorNone)

{

CAMHAL_LOGEB("OMX_UseBuffer-0x%x", eError);

}

GOTO_EXIT_IF((eError!=OMX_ErrorNone), eError);

pBufferHdr->pAppPrivate = (OMX_PTR)&bufArr[index];

pBufferHdr->nSize = sizeof(OMX_BUFFERHEADERTYPE);

pBufferHdr->nVersion.s.nVersionMajor = 1 ;

pBufferHdr->nVersion.s.nVersionMinor = 1 ;

pBufferHdr->nVersion.s.nRevision = 0 ;

pBufferHdr->nVersion.s.nStep = 0;

mPreviewData->mBufferHeader[index] = pBufferHdr;這裡組件的屬性結構指針通過bufferheader與申請的camerabuffer綁定到一起了

}

if ( mMeasurementEnabled )

{

for( int i = 0; i < num; i++ )

{

OMX_BUFFERHEADERTYPE *pBufHdr;

OMX_U8 *ptr;

ptr = (OMX_U8 *)camera_buffer_get_omx_ptr (&mPreviewDataBuffers[i]);

eError = OMX_UseBuffer( mCameraAdapterParameters.mHandleComp,

&pBufHdr,

mCameraAdapterParameters.mMeasurementPortIndex,

0,

measurementData->mBufSize,

ptr);

if ( eError == OMX_ErrorNone )

{

pBufHdr->pAppPrivate = (OMX_PTR *)&mPreviewDataBuffers[i];

pBufHdr->nSize = sizeof(OMX_BUFFERHEADERTYPE);

pBufHdr->nVersion.s.nVersionMajor = 1 ;

pBufHdr->nVersion.s.nVersionMinor = 1 ;

pBufHdr->nVersion.s.nRevision = 0 ;

pBufHdr->nVersion.s.nStep = 0;

measurementData->mBufferHeader[i] = pBufHdr;

}

else

{

CAMHAL_LOGEB("OMX_UseBuffer -0x%x", eError);

ret = BAD_VALUE;

break;

}

}

}

CAMHAL_LOGDA("Registering preview buffers");

ret = mUsePreviewSem.WaitTimeout(OMX_CMD_TIMEOUT);

//If somethiing bad happened while we wait

if (mComponentState == OMX_StateInvalid)

{

CAMHAL_LOGEA("Invalid State after Registering preview buffers Exitting!!!");

goto EXIT;

}

if ( NO_ERROR == ret )

{

CAMHAL_LOGDA("Preview buffer registration successfull");

}

else

{

if ( mComponentState == OMX_StateLoaded )

{

ret |= RemoveEvent(mCameraAdapterParameters.mHandleComp,

OMX_EventCmdComplete,

OMX_CommandStateSet,

OMX_StateIdle,

NULL);

}

else

{

ret |= SignalEvent(mCameraAdapterParameters.mHandleComp,

OMX_EventCmdComplete,

OMX_CommandPortEnable,

mCameraAdapterParameters.mPrevPortIndex,

NULL);

}

CAMHAL_LOGEA("Timeout expired on preview buffer registration");

goto EXIT;

}

LOG_FUNCTION_NAME_EXIT;

return (ret | ErrorUtils::omxToAndroidError(eError));

///If there is any failure, we reach here.

///Here, we do any resource freeing and convert from OMX error code to Camera Hal error code

EXIT:

mStateSwitchLock.unlock();

CAMHAL_LOGEB("Exiting function %s because of ret %d eError=%x", __FUNCTION__, ret, eError);

performCleanupAfterError();

CAMHAL_LOGEB("Exiting function %s because of ret %d eError=%x", __FUNCTION__, ret, eError);

LOG_FUNCTION_NAME_EXIT;

return (ret | ErrorUtils::omxToAndroidError(eError));

}

上面所說的過程個人感覺比較抽象,越到底層越是不太好理解了

上面camerbuffer申請處理結束,setpreviewwindow初始化結束,下面就是開始真正的preview,在真正開始preview這個方法中,其實總結起來只有一點,就是通過fillbuffer方法將你申請好的buffer填充到組件port,等待port處理完視頻數據會放到你填充給他的buffer中,好像你讓別人幫你盛飯似的,你得把飯碗給別人,人家盛飯完了,再把飯碗給你,這時候飯碗裡已經有飯了,同樣的,我們的buffer中已經有數據了,那我們怎麼得到這個數據呢??我們給到port的buffer如果已經填滿了數據,當然我們開始給到port時這個buffer是空的,port填充滿這個buffer,會通知我們,同時攜帶著把這個buffer給到我們,這是通過fillbufferdone這個回調實現的

[html]

#endif

/*========================================================*/

/* @ fn SampleTest_FillBufferDone :: Application callback*/

/*========================================================*/

OMX_ERRORTYPE OMXCameraAdapter::OMXCameraAdapterFillBufferDone(OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_BUFFERHEADERTYPE* pBuffHeader)

{

status_t stat = NO_ERROR;

status_t res1, res2;

OMXCameraPortParameters *pPortParam;

OMX_ERRORTYPE eError = OMX_ErrorNone;

CameraFrame::FrameType typeOfFrame = CameraFrame::ALL_FRAMES;

unsigned int refCount = 0;

BaseCameraAdapter::AdapterState state, nextState;

BaseCameraAdapter::getState(state);

BaseCameraAdapter::getNextState(nextState);

sp<CameraMetadataResult> metadataResult = NULL;

unsigned int mask = 0xFFFF;

CameraFrame cameraFrame;

OMX_OTHER_EXTRADATATYPE *extraData;

OMX_TI_ANCILLARYDATATYPE *ancillaryData = NULL;

bool snapshotFrame = false;

if ( NULL == pBuffHeader ) {

return OMX_ErrorBadParameter;

}

#ifdef CAMERAHAL_OMX_PROFILING

storeProfilingData(pBuffHeader);

#endif

res1 = res2 = NO_ERROR;

if ( !pBuffHeader || !pBuffHeader->pBuffer ) {

CAMHAL_LOGEA("NULL Buffer from OMX");

return OMX_ErrorNone;

}

pPortParam = &(mCameraAdapterParameters.mCameraPortParams[pBuffHeader->nOutputPortIndex]);

// Find buffer and mark it as filled

for (int i = 0; i < pPortParam->mNumBufs; i++) {

if (pPortParam->mBufferHeader[i] == pBuffHeader) {

pPortParam->mStatus[i] = OMXCameraPortParameters::DONE;

}

}

if (pBuffHeader->nOutputPortIndex == OMX_CAMERA_PORT_VIDEO_OUT_PREVIEW)

{

if ( ( PREVIEW_ACTIVE & state ) != PREVIEW_ACTIVE )

{

return OMX_ErrorNone;

}

if ( mWaitingForSnapshot ) {

extraData = getExtradata(pBuffHeader->pPlatformPrivate,

(OMX_EXTRADATATYPE) OMX_AncillaryData);

if ( NULL != extraData ) {

ancillaryData = (OMX_TI_ANCILLARYDATATYPE*) extraData->data;

if ((OMX_2D_Snap == ancillaryData->eCameraView)

|| (OMX_3D_Left_Snap == ancillaryData->eCameraView)

|| (OMX_3D_Right_Snap == ancillaryData->eCameraView)) {

snapshotFrame = OMX_TRUE;

} else {

snapshotFrame = OMX_FALSE;

}

mPending3Asettings |= SetFocus;

}

}

///Prepare the frames to be sent - initialize CameraFrame object and reference count

// TODO(XXX): ancillary data for snapshot frame is not being sent for video snapshot

// if we are waiting for a snapshot and in video mode...go ahead and send

// this frame as a snapshot

if( mWaitingForSnapshot && (mCapturedFrames > 0) &&

(snapshotFrame || (mCapMode == VIDEO_MODE)))

{

typeOfFrame = CameraFrame::SNAPSHOT_FRAME;

mask = (unsigned int)CameraFrame::SNAPSHOT_FRAME;

// video snapshot gets ancillary data and wb info from last snapshot frame

mCaptureAncillaryData = ancillaryData;

mWhiteBalanceData = NULL;

extraData = getExtradata(pBuffHeader->pPlatformPrivate,

(OMX_EXTRADATATYPE) OMX_WhiteBalance);

if ( NULL != extraData )

{

mWhiteBalanceData = (OMX_TI_WHITEBALANCERESULTTYPE*) extraData->data;

}

}

else

{

typeOfFrame = CameraFrame::PREVIEW_FRAME_SYNC;

mask = (unsigned int)CameraFrame::PREVIEW_FRAME_SYNC;

}

if (mRecording)

{

mask |= (unsigned int)CameraFrame::VIDEO_FRAME_SYNC;

mFramesWithEncoder++;

}

//LOGV("FBD pBuffer = 0x%x", pBuffHeader->pBuffer);

if( mWaitingForSnapshot )

{

if (!mBracketingEnabled &&

((HIGH_SPEED == mCapMode) || (VIDEO_MODE == mCapMode)) )

{

notifyShutterSubscribers();

}

}

stat = sendCallBacks(cameraFrame, pBuffHeader, mask, pPortParam);

mFramesWithDisplay++;

mFramesWithDucati--;

#ifdef CAMERAHAL_DEBUG

if(mBuffersWithDucati.indexOfKey((uint32_t)pBuffHeader->pBuffer)<0)

{

LOGE("Buffer was never with Ducati!! %p", pBuffHeader->pBuffer);

for(unsigned int i=0;i<mBuffersWithDucati.size();i++) LOGE("0x%x", mBuffersWithDucati.keyAt(i));

}

mBuffersWithDucati.removeItem((int)pBuffHeader->pBuffer);

#endif

if(mDebugFcs)

CAMHAL_LOGEB("C[%d] D[%d] E[%d]", mFramesWithDucati, mFramesWithDisplay, mFramesWithEncoder);

recalculateFPS();

createPreviewMetadata(pBuffHeader, metadataResult, pPortParam->mWidth, pPortParam->mHeight);

if ( NULL != metadataResult.get() ) {

notifyMetadataSubscribers(metadataResult);

metadataResult.clear();

}

{

Mutex::Autolock lock(mFaceDetectionLock);

if ( mFDSwitchAlgoPriority ) {

//Disable region priority and enable face priority for AF

setAlgoPriority(REGION_PRIORITY, FOCUS_ALGO, false);

setAlgoPriority(FACE_PRIORITY, FOCUS_ALGO , true);

//Disable Region priority and enable Face priority

setAlgoPriority(REGION_PRIORITY, EXPOSURE_ALGO, false);

setAlgoPriority(FACE_PRIORITY, EXPOSURE_ALGO, true);

mFDSwitchAlgoPriority = false;

}

}

sniffDccFileDataSave(pBuffHeader);

stat |= advanceZoom();

// On the fly update to 3A settings not working

// Do not update 3A here if we are in the middle of a capture

// or in the middle of transitioning to it

if( mPending3Asettings &&

( (nextState & CAPTURE_ACTIVE) == 0 ) &&

( (state & CAPTURE_ACTIVE) == 0 ) ) {

apply3Asettings(mParameters3A);

}

}

else if( pBuffHeader->nOutputPortIndex == OMX_CAMERA_PORT_VIDEO_OUT_MEASUREMENT )

{

typeOfFrame = CameraFrame::FRAME_DATA_SYNC;

mask = (unsigned int)CameraFrame::FRAME_DATA_SYNC;

stat = sendCallBacks(cameraFrame, pBuffHeader, mask, pPortParam);

}

else if( pBuffHeader->nOutputPortIndex == OMX_CAMERA_PORT_IMAGE_OUT_IMAGE )

{

OMX_COLOR_FORMATTYPE pixFormat;

const char *valstr = NULL;

pixFormat = pPortParam->mColorFormat;

if ( OMX_COLOR_FormatUnused == pixFormat )

{

typeOfFrame = CameraFrame::IMAGE_FRAME;

mask = (unsigned int) CameraFrame::IMAGE_FRAME;

} else if ( pixFormat == OMX_COLOR_FormatCbYCrY &&

((mPictureFormatFromClient &&

!strcmp(mPictureFormatFromClient,

CameraParameters::PIXEL_FORMAT_JPEG)) ||

!mPictureFormatFromClient) ) {

// signals to callbacks that this needs to be coverted to jpeg

// before returning to framework

typeOfFrame = CameraFrame::IMAGE_FRAME;

mask = (unsigned int) CameraFrame::IMAGE_FRAME;

cameraFrame.mQuirks |= CameraFrame::ENCODE_RAW_YUV422I_TO_JPEG;

cameraFrame.mQuirks |= CameraFrame::FORMAT_YUV422I_UYVY;

// populate exif data and pass to subscribers via quirk

// subscriber is in charge of freeing exif data

ExifElementsTable* exif = new ExifElementsTable();

setupEXIF_libjpeg(exif, mCaptureAncillaryData, mWhiteBalanceData);

cameraFrame.mQuirks |= CameraFrame::HAS_EXIF_DATA;

cameraFrame.mCookie2 = (void*) exif;

} else {

typeOfFrame = CameraFrame::RAW_FRAME;

mask = (unsigned int) CameraFrame::RAW_FRAME;

}

pPortParam->mImageType = typeOfFrame;

if((mCapturedFrames>0) && !mCaptureSignalled)

{

mCaptureSignalled = true;

mCaptureSem.Signal();

}

if( ( CAPTURE_ACTIVE & state ) != CAPTURE_ACTIVE )

{

goto EXIT;

}

{

Mutex::Autolock lock(mBracketingLock);

if ( mBracketingEnabled )

{

doBracketing(pBuffHeader, typeOfFrame);

return eError;

}

}

if (mZoomBracketingEnabled) {

doZoom(mZoomBracketingValues[mCurrentZoomBracketing]);

CAMHAL_LOGDB("Current Zoom Bracketing: %d", mZoomBracketingValues[mCurrentZoomBracketing]);

mCurrentZoomBracketing++;

if (mCurrentZoomBracketing == ARRAY_SIZE(mZoomBracketingValues)) {

mZoomBracketingEnabled = false;

}

}

if ( 1 > mCapturedFrames )

{

goto EXIT;

}

#ifdef OMAP_ENHANCEMENT_CPCAM

setMetaData(cameraFrame.mMetaData, pBuffHeader->pPlatformPrivate);

#endif

CAMHAL_LOGDB("Captured Frames: %d", mCapturedFrames);

mCapturedFrames--;

#ifdef CAMERAHAL_USE_RAW_IMAGE_SAVING

if (mYuvCapture) {

struct timeval timeStampUsec;

gettimeofday(&timeStampUsec, NULL);

time_t saveTime;

time(&saveTime);

const struct tm * const timeStamp = gmtime(&saveTime);

char filename[256];

snprintf(filename,256, "%s/yuv_%d_%d_%d_%lu.yuv",

kYuvImagesOutputDirPath,

timeStamp->tm_hour,

timeStamp->tm_min,

timeStamp->tm_sec,

timeStampUsec.tv_usec);

const status_t saveBufferStatus = saveBufferToFile(((CameraBuffer*)pBuffHeader->pAppPrivate)->mapped,

pBuffHeader->nFilledLen, filename);

if (saveBufferStatus != OK) {

CAMHAL_LOGE("ERROR: %d, while saving yuv!", saveBufferStatus);

} else {

CAMHAL_LOGD("yuv_%d_%d_%d_%lu.yuv successfully saved in %s",

timeStamp->tm_hour,

timeStamp->tm_min,

timeStamp->tm_sec,

timeStampUsec.tv_usec,

kYuvImagesOutputDirPath);

}

}

#endif

stat = sendCallBacks(cameraFrame, pBuffHeader, mask, pPortParam);

}

else if (pBuffHeader->nOutputPortIndex == OMX_CAMERA_PORT_VIDEO_OUT_VIDEO) {

typeOfFrame = CameraFrame::RAW_FRAME;

pPortParam->mImageType = typeOfFrame;

{

Mutex::Autolock lock(mLock);

if( ( CAPTURE_ACTIVE & state ) != CAPTURE_ACTIVE ) {

goto EXIT;

}

}

CAMHAL_LOGD("RAW buffer done on video port, length = %d", pBuffHeader->nFilledLen);

mask = (unsigned int) CameraFrame::RAW_FRAME;

#ifdef CAMERAHAL_USE_RAW_IMAGE_SAVING

if ( mRawCapture ) {

struct timeval timeStampUsec;

gettimeofday(&timeStampUsec, NULL);

time_t saveTime;

time(&saveTime);

const struct tm * const timeStamp = gmtime(&saveTime);

char filename[256];

snprintf(filename,256, "%s/raw_%d_%d_%d_%lu.raw",

kRawImagesOutputDirPath,

timeStamp->tm_hour,

timeStamp->tm_min,

timeStamp->tm_sec,

timeStampUsec.tv_usec);

const status_t saveBufferStatus = saveBufferToFile( ((CameraBuffer*)pBuffHeader->pAppPrivate)->mapped,

pBuffHeader->nFilledLen, filename);

if (saveBufferStatus != OK) {

CAMHAL_LOGE("ERROR: %d , while saving raw!", saveBufferStatus);

} else {

CAMHAL_LOGD("raw_%d_%d_%d_%lu.raw successfully saved in %s",

timeStamp->tm_hour,

timeStamp->tm_min,

timeStamp->tm_sec,

timeStampUsec.tv_usec,

kRawImagesOutputDirPath);

stat = sendCallBacks(cameraFrame, pBuffHeader, mask, pPortParam);

}

}

#endif

} else {

CAMHAL_LOGEA("Frame received for non-(preview/capture/measure) port. This is yet to be supported");

goto EXIT;

}

if ( NO_ERROR != stat )

{

CameraBuffer *camera_buffer;

camera_buffer = (CameraBuffer *)pBuffHeader->pAppPrivate;

CAMHAL_LOGDB("sendFrameToSubscribers error: %d", stat);

returnFrame(camera_buffer, typeOfFrame);

}

return eError;

EXIT:

CAMHAL_LOGEB("Exiting function %s because of ret %d eError=%x", __FUNCTION__, stat, eError);

if ( NO_ERROR != stat )

{

if ( NULL != mErrorNotifier )

{

mErrorNotifier->errorNotify(CAMERA_ERROR_UNKNOWN);

}

}

return eError;

}

#endif

/*========================================================*/

/* @ fn SampleTest_FillBufferDone :: Application callback*/

/*========================================================*/

OMX_ERRORTYPE OMXCameraAdapter::OMXCameraAdapterFillBufferDone(OMX_IN OMX_HANDLETYPE hComponent,

OMX_IN OMX_BUFFERHEADERTYPE* pBuffHeader)

{

status_t stat = NO_ERROR;

status_t res1, res2;

OMXCameraPortParameters *pPortParam;

OMX_ERRORTYPE eError = OMX_ErrorNone;

CameraFrame::FrameType typeOfFrame = CameraFrame::ALL_FRAMES;

unsigned int refCount = 0;

BaseCameraAdapter::AdapterState state, nextState;

BaseCameraAdapter::getState(state);

BaseCameraAdapter::getNextState(nextState);

sp<CameraMetadataResult> metadataResult = NULL;

unsigned int mask = 0xFFFF;

CameraFrame cameraFrame;

OMX_OTHER_EXTRADATATYPE *extraData;

OMX_TI_ANCILLARYDATATYPE *ancillaryData = NULL;

bool snapshotFrame = false;

if ( NULL == pBuffHeader ) {

return OMX_ErrorBadParameter;

}

#ifdef CAMERAHAL_OMX_PROFILING

storeProfilingData(pBuffHeader);

#endif

res1 = res2 = NO_ERROR;

if ( !pBuffHeader || !pBuffHeader->pBuffer ) {

CAMHAL_LOGEA("NULL Buffer from OMX");

return OMX_ErrorNone;

}

pPortParam = &(mCameraAdapterParameters.mCameraPortParams[pBuffHeader->nOutputPortIndex]);

// Find buffer and mark it as filled

for (int i = 0; i < pPortParam->mNumBufs; i++) {

if (pPortParam->mBufferHeader[i] == pBuffHeader) {

pPortParam->mStatus[i] = OMXCameraPortParameters::DONE;

}

}

if (pBuffHeader->nOutputPortIndex == OMX_CAMERA_PORT_VIDEO_OUT_PREVIEW)

{

if ( ( PREVIEW_ACTIVE & state ) != PREVIEW_ACTIVE )

{

return OMX_ErrorNone;

}

if ( mWaitingForSnapshot ) {

extraData = getExtradata(pBuffHeader->pPlatformPrivate,

(OMX_EXTRADATATYPE) OMX_AncillaryData);

if ( NULL != extraData ) {

ancillaryData = (OMX_TI_ANCILLARYDATATYPE*) extraData->data;

if ((OMX_2D_Snap == ancillaryData->eCameraView)

|| (OMX_3D_Left_Snap == ancillaryData->eCameraView)

|| (OMX_3D_Right_Snap == ancillaryData->eCameraView)) {

snapshotFrame = OMX_TRUE;

} else {

snapshotFrame = OMX_FALSE;

}

mPending3Asettings |= SetFocus;

}

}

///Prepare the frames to be sent - initialize CameraFrame object and reference count

// TODO(XXX): ancillary data for snapshot frame is not being sent for video snapshot

// if we are waiting for a snapshot and in video mode...go ahead and send

// this frame as a snapshot

if( mWaitingForSnapshot && (mCapturedFrames > 0) &&

(snapshotFrame || (mCapMode == VIDEO_MODE)))

{

typeOfFrame = CameraFrame::SNAPSHOT_FRAME;

mask = (unsigned int)CameraFrame::SNAPSHOT_FRAME;

// video snapshot gets ancillary data and wb info from last snapshot frame

mCaptureAncillaryData = ancillaryData;

mWhiteBalanceData = NULL;

extraData = getExtradata(pBuffHeader->pPlatformPrivate,

(OMX_EXTRADATATYPE) OMX_WhiteBalance);

if ( NULL != extraData )

{

mWhiteBalanceData = (OMX_TI_WHITEBALANCERESULTTYPE*) extraData->data;

}

}

else

{

typeOfFrame = CameraFrame::PREVIEW_FRAME_SYNC;

mask = (unsigned int)CameraFrame::PREVIEW_FRAME_SYNC;

}

if (mRecording)

{

mask |= (unsigned int)CameraFrame::VIDEO_FRAME_SYNC;

mFramesWithEncoder++;

}

//LOGV("FBD pBuffer = 0x%x", pBuffHeader->pBuffer);

if( mWaitingForSnapshot )

{

if (!mBracketingEnabled &&

((HIGH_SPEED == mCapMode) || (VIDEO_MODE == mCapMode)) )

{

notifyShutterSubscribers();

}

}

stat = sendCallBacks(cameraFrame, pBuffHeader, mask, pPortParam);

mFramesWithDisplay++;

mFramesWithDucati--;

#ifdef CAMERAHAL_DEBUG

if(mBuffersWithDucati.indexOfKey((uint32_t)pBuffHeader->pBuffer)<0)

{

LOGE("Buffer was never with Ducati!! %p", pBuffHeader->pBuffer);

for(unsigned int i=0;i<mBuffersWithDucati.size();i++) LOGE("0x%x", mBuffersWithDucati.keyAt(i));

}

mBuffersWithDucati.removeItem((int)pBuffHeader->pBuffer);

#endif

if(mDebugFcs)

CAMHAL_LOGEB("C[%d] D[%d] E[%d]", mFramesWithDucati, mFramesWithDisplay, mFramesWithEncoder);

recalculateFPS();

createPreviewMetadata(pBuffHeader, metadataResult, pPortParam->mWidth, pPortParam->mHeight);

if ( NULL != metadataResult.get() ) {

notifyMetadataSubscribers(metadataResult);

metadataResult.clear();

}

{

Mutex::Autolock lock(mFaceDetectionLock);

if ( mFDSwitchAlgoPriority ) {

//Disable region priority and enable face priority for AF

setAlgoPriority(REGION_PRIORITY, FOCUS_ALGO, false);

setAlgoPriority(FACE_PRIORITY, FOCUS_ALGO , true);

//Disable Region priority and enable Face priority

setAlgoPriority(REGION_PRIORITY, EXPOSURE_ALGO, false);

setAlgoPriority(FACE_PRIORITY, EXPOSURE_ALGO, true);

mFDSwitchAlgoPriority = false;

}

}

sniffDccFileDataSave(pBuffHeader);

stat |= advanceZoom();

// On the fly update to 3A settings not working

// Do not update 3A here if we are in the middle of a capture

// or in the middle of transitioning to it

if( mPending3Asettings &&

( (nextState & CAPTURE_ACTIVE) == 0 ) &&

( (state & CAPTURE_ACTIVE) == 0 ) ) {

apply3Asettings(mParameters3A);

}

}

else if( pBuffHeader->nOutputPortIndex == OMX_CAMERA_PORT_VIDEO_OUT_MEASUREMENT )

{

typeOfFrame = CameraFrame::FRAME_DATA_SYNC;

mask = (unsigned int)CameraFrame::FRAME_DATA_SYNC;

stat = sendCallBacks(cameraFrame, pBuffHeader, mask, pPortParam);

}

else if( pBuffHeader->nOutputPortIndex == OMX_CAMERA_PORT_IMAGE_OUT_IMAGE )

{

OMX_COLOR_FORMATTYPE pixFormat;

const char *valstr = NULL;

pixFormat = pPortParam->mColorFormat;

if ( OMX_COLOR_FormatUnused == pixFormat )

{

typeOfFrame = CameraFrame::IMAGE_FRAME;

mask = (unsigned int) CameraFrame::IMAGE_FRAME;

} else if ( pixFormat == OMX_COLOR_FormatCbYCrY &&

((mPictureFormatFromClient &&

!strcmp(mPictureFormatFromClient,

CameraParameters::PIXEL_FORMAT_JPEG)) ||

!mPictureFormatFromClient) ) {

// signals to callbacks that this needs to be coverted to jpeg

// before returning to framework

typeOfFrame = CameraFrame::IMAGE_FRAME;

mask = (unsigned int) CameraFrame::IMAGE_FRAME;

cameraFrame.mQuirks |= CameraFrame::ENCODE_RAW_YUV422I_TO_JPEG;

cameraFrame.mQuirks |= CameraFrame::FORMAT_YUV422I_UYVY;

// populate exif data and pass to subscribers via quirk

// subscriber is in charge of freeing exif data

ExifElementsTable* exif = new ExifElementsTable();

setupEXIF_libjpeg(exif, mCaptureAncillaryData, mWhiteBalanceData);

cameraFrame.mQuirks |= CameraFrame::HAS_EXIF_DATA;

cameraFrame.mCookie2 = (void*) exif;

} else {

typeOfFrame = CameraFrame::RAW_FRAME;

mask = (unsigned int) CameraFrame::RAW_FRAME;

}

pPortParam->mImageType = typeOfFrame;

if((mCapturedFrames>0) && !mCaptureSignalled)

{

mCaptureSignalled = true;

mCaptureSem.Signal();

}

if( ( CAPTURE_ACTIVE & state ) != CAPTURE_ACTIVE )

{

goto EXIT;

}

{

Mutex::Autolock lock(mBracketingLock);

if ( mBracketingEnabled )

{

doBracketing(pBuffHeader, typeOfFrame);

return eError;

}

}

if (mZoomBracketingEnabled) {

doZoom(mZoomBracketingValues[mCurrentZoomBracketing]);

CAMHAL_LOGDB("Current Zoom Bracketing: %d", mZoomBracketingValues[mCurrentZoomBracketing]);

mCurrentZoomBracketing++;

if (mCurrentZoomBracketing == ARRAY_SIZE(mZoomBracketingValues)) {

mZoomBracketingEnabled = false;

}

}

if ( 1 > mCapturedFrames )

{

goto EXIT;

}

#ifdef OMAP_ENHANCEMENT_CPCAM

setMetaData(cameraFrame.mMetaData, pBuffHeader->pPlatformPrivate);

#endif

CAMHAL_LOGDB("Captured Frames: %d", mCapturedFrames);

mCapturedFrames--;

#ifdef CAMERAHAL_USE_RAW_IMAGE_SAVING

if (mYuvCapture) {

struct timeval timeStampUsec;

gettimeofday(&timeStampUsec, NULL);

time_t saveTime;

time(&saveTime);

const struct tm * const timeStamp = gmtime(&saveTime);

char filename[256];

snprintf(filename,256, "%s/yuv_%d_%d_%d_%lu.yuv",

kYuvImagesOutputDirPath,

timeStamp->tm_hour,

timeStamp->tm_min,

timeStamp->tm_sec,

timeStampUsec.tv_usec);

const status_t saveBufferStatus = saveBufferToFile(((CameraBuffer*)pBuffHeader->pAppPrivate)->mapped,

pBuffHeader->nFilledLen, filename);

if (saveBufferStatus != OK) {

CAMHAL_LOGE("ERROR: %d, while saving yuv!", saveBufferStatus);

} else {

CAMHAL_LOGD("yuv_%d_%d_%d_%lu.yuv successfully saved in %s",

timeStamp->tm_hour,

timeStamp->tm_min,

timeStamp->tm_sec,

timeStampUsec.tv_usec,

kYuvImagesOutputDirPath);

}

}

#endif

stat = sendCallBacks(cameraFrame, pBuffHeader, mask, pPortParam);

}

else if (pBuffHeader->nOutputPortIndex == OMX_CAMERA_PORT_VIDEO_OUT_VIDEO) {

typeOfFrame = CameraFrame::RAW_FRAME;

pPortParam->mImageType = typeOfFrame;

{

Mutex::Autolock lock(mLock);

if( ( CAPTURE_ACTIVE & state ) != CAPTURE_ACTIVE ) {

goto EXIT;

}

}

CAMHAL_LOGD("RAW buffer done on video port, length = %d", pBuffHeader->nFilledLen);

mask = (unsigned int) CameraFrame::RAW_FRAME;

#ifdef CAMERAHAL_USE_RAW_IMAGE_SAVING

if ( mRawCapture ) {

struct timeval timeStampUsec;

gettimeofday(&timeStampUsec, NULL);

time_t saveTime;

time(&saveTime);

const struct tm * const timeStamp = gmtime(&saveTime);

char filename[256];

snprintf(filename,256, "%s/raw_%d_%d_%d_%lu.raw",

kRawImagesOutputDirPath,

timeStamp->tm_hour,

timeStamp->tm_min,

timeStamp->tm_sec,

timeStampUsec.tv_usec);

const status_t saveBufferStatus = saveBufferToFile( ((CameraBuffer*)pBuffHeader->pAppPrivate)->mapped,

pBuffHeader->nFilledLen, filename);

if (saveBufferStatus != OK) {

CAMHAL_LOGE("ERROR: %d , while saving raw!", saveBufferStatus);

} else {

CAMHAL_LOGD("raw_%d_%d_%d_%lu.raw successfully saved in %s",

timeStamp->tm_hour,

timeStamp->tm_min,

timeStamp->tm_sec,

timeStampUsec.tv_usec,

kRawImagesOutputDirPath);

stat = sendCallBacks(cameraFrame, pBuffHeader, mask, pPortParam);

}

}

#endif

} else {

CAMHAL_LOGEA("Frame received for non-(preview/capture/measure) port. This is yet to be supported");

goto EXIT;

}

if ( NO_ERROR != stat )

{

CameraBuffer *camera_buffer;

camera_buffer = (CameraBuffer *)pBuffHeader->pAppPrivate;

CAMHAL_LOGDB("sendFrameToSubscribers error: %d", stat);

returnFrame(camera_buffer, typeOfFrame);

}

return eError;

EXIT:

CAMHAL_LOGEB("Exiting function %s because of ret %d eError=%x", __FUNCTION__, stat, eError);

if ( NO_ERROR != stat )

{

if ( NULL != mErrorNotifier )

{

mErrorNotifier->errorNotify(CAMERA_ERROR_UNKNOWN);

}

}

return eError;

}

暫且先到這裡

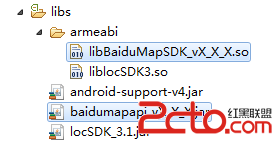

Android之旅十八 百度地圖環境搭建

Android之旅十八 百度地圖環境搭建

在android中使用百度地圖,我們可以先看看百度地圖相應的SDK信息:http://developer.baidu.com/map/index.php?title=an

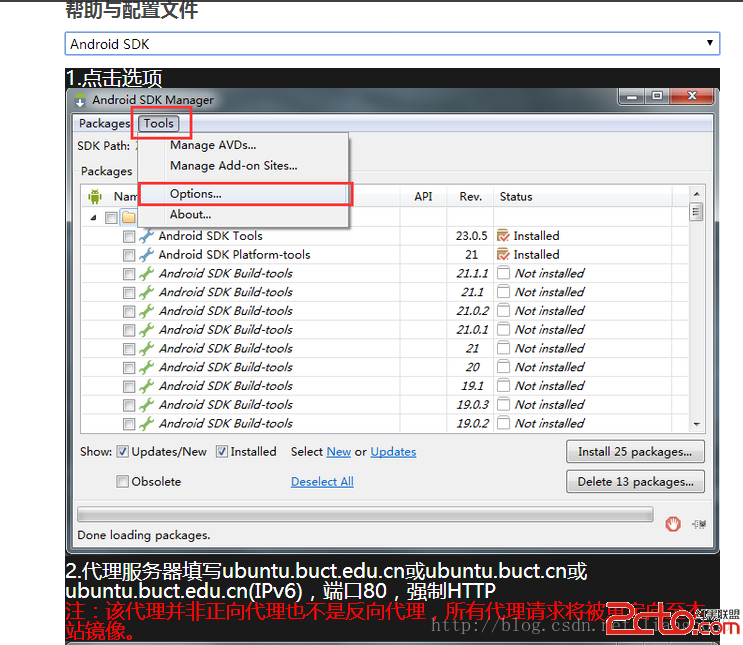

解決國內android sdk無法更新,google不能的簡單辦法

解決國內android sdk無法更新,google不能的簡單辦法

在國內屏蔽了許多外國網站,連google 和android都屏蔽了,做程序員的就苦了!不過車到山前必有路,我們也有我們的辦法!首先要先進去google等的一系列網站,那麼

Android高仿微信聊天界面代碼分享

Android高仿微信聊天界面代碼分享

微信聊天現在非常火,是因其界面漂亮嗎,哈哈,也許吧。微信每條消息都帶有一個氣泡,非常迷人,看起來感覺實現起來非常難,其實並不難。下面小編給大家分享實現代碼。先給大家展示下

Android學會屬性動畫的基本用法(下),Interpolator 與ViewPropertyAnimator的用法

Android學會屬性動畫的基本用法(下),Interpolator 與ViewPropertyAnimator的用法

Interpolator的用法Interpolator這個東西很難進行翻譯,直譯過來的話是補間器的意思,它的主要作用是可以控制動畫的變化速率,比如去實現一種非線性運動的動