編輯:關於Android編程

Android Camera 運行流程

一

首先既然Camera是利用binder通信,它肯定要將它的service注冊到ServiceManager裡面,以備後續Client引用,那麼這一步是在哪裡進行的呢?細心的人會發現,在frameworks\base\media\mediaserver\Main_MediaServer.cpp下有個main函數,可以用來注冊媒體服務。沒錯就是在這裡,CameraService完成了服務的注冊,相關代碼如下:

int main(int argc, char** argv)

{

sp<ProcessState> proc(ProcessState::self());

sp<IServiceManager> sm = defaultServiceManager();

LOGI("ServiceManager: %p", sm.get());

AudioFlinger::instantiate();

MediaPlayerService::instantiate();

CameraService::instantiate();

AudioPolicyService::instantiate();

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

}

可是我們到CameraService文件裡面卻找不到instantiate()這個函數,它在哪?繼續追到它的一個父類BinderService,

CameraService的定義在frameworks/base/services/camera/libcameraservice/CameraService.h中

class CameraService :

public BinderService<CameraService>,

public BnCameraService

{

class Client;

friend class BinderService<CameraService>;

public:

static char const* getServiceName() { return "media.camera"; }

.....

.....

}

從以上定義可以看出CameraService 繼承於BinderService,所以CameraService::instantiate(); 其實是調用BinderService中的instantiate

BinderService的定義在frameworks/base/include/binder/BinderService.h中

// ---------------------------------------------------------------------------

namespace android {

template<typename SERVICE>

class BinderService

{

public:

static status_t publish() {

sp<IServiceManager> sm(defaultServiceManager());

return sm->addService(String16(SERVICE::getServiceName()), new SERVICE());

}

static void publishAndJoinThreadPool() {

sp<ProcessState> proc(ProcessState::self());

sp<IServiceManager> sm(defaultServiceManager());

sm->addService(String16(SERVICE::getServiceName()), new SERVICE());

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

}

static void instantiate() { publish(); }

static status_t shutdown() {

return NO_ERROR;

}

};

}; // namespace android

// ---------------------------------------------------------------------------

可以發現在publish()函數中,CameraService完成服務的注冊 。這裡面有個SERVICE,源碼中有說明

template<typename SERVICE>

這表示SERVICE是個模板,這裡是注冊CameraService,所以可以用CameraService代替

return sm->addService(String16(CameraService::getServiceName()), new CameraService());

好了這樣,Camera就在ServiceManager完成服務注冊,提供給client隨時使用。

Main_MediaServer主函數由init.rc在啟動是調用,所以在設備開機的時候Camera就會注冊一個服務,用作binder通信。

二

Binder服務已注冊,那接下來就看看client如何連上server端,並打開camera模塊。咱們先從camera app的源碼入手。在onCreate()函數中專門有一個open Camera的線程

camera app的源碼文件在以下目錄packages/apps/OMAPCamera/src/com/ti/omap4/android/camera/camera.java

@Override

public void onCreate(Bundle icicle) {

super.onCreate(icicle);

getPreferredCameraId();

String[] defaultFocusModes = getResources().getStringArray(

R.array.pref_camera_focusmode_default_array);

mFocusManager = new FocusManager(mPreferences, defaultFocusModes);

/*

* To reduce startup time, we start the camera open and preview threads.

* We make sure the preview is started at the end of onCreate.

*/

mCameraOpenThread.start();

PreferenceInflater inflater = new PreferenceInflater(this);

PreferenceGroup group =

(PreferenceGroup) inflater.inflate(R.xml.camera_preferences);

ListPreference gbce = group.findPreference(CameraSettings.KEY_GBCE);

if (gbce != null) {

mGBCEOff = gbce.findEntryValueByEntry(getString(R.string.pref_camera_gbce_entry_off));

if (mGBCEOff == null) {

mGBCEOff = "";

}

}

ListPreference autoConvergencePreference = group.findPreference(CameraSettings.KEY_AUTO_CONVERGENCE);

if (autoConvergencePreference != null) {

mTouchConvergence = autoConvergencePreference.findEntryValueByEntry(getString(R.string.pref_camera_autoconvergence_entry_mode_touch));

if (mTouchConvergence == null) {

mTouchConvergence = "";

}

mManualConvergence = autoConvergencePreference.findEntryValueByEntry(getString(R.string.pref_camera_autoconvergence_entry_mode_manual));

if (mManualConvergence == null) {

mManualConvergence = "";

}

}

ListPreference exposure = group.findPreference(CameraSettings.KEY_EXPOSURE_MODE_MENU);

if (exposure != null) {

mManualExposure = exposure.findEntryValueByEntry(getString(R.string.pref_camera_exposuremode_entry_manual));

if (mManualExposure == null) {

mManualExposure = "";

}

}

ListPreference temp = group.findPreference(CameraSettings.KEY_MODE_MENU);

if (temp != null) {

mTemporalBracketing = temp.findEntryValueByEntry(getString(R.string.pref_camera_mode_entry_temporal_bracketing));

if (mTemporalBracketing == null) {

mTemporalBracketing = "";

}

mExposureBracketing = temp.findEntryValueByEntry(getString(R.string.pref_camera_mode_entry_exp_bracketing));

if (mExposureBracketing == null) {

mExposureBracketing = "";

}

mZoomBracketing = temp.findEntryValueByEntry(getString(R.string.pref_camera_mode_entry_zoom_bracketing));

if (mZoomBracketing == null) {

mZoomBracketing = "";

}

mHighPerformance = temp.findEntryValueByEntry(getString(R.string.pref_camera_mode_entry_hs));

if (mHighPerformance == null) {

mHighPerformance = "";

}

mHighQuality = temp.findEntryValueByEntry(getString(R.string.pref_camera_mode_entry_hq));

if (mHighQuality == null) {

mHighQuality = "";

}

mHighQualityZsl = temp.findEntryValueByEntry(getString(R.string.pref_camera_mode_entry_zsl));

if (mHighQualityZsl == null) {

mHighQualityZsl = "";

}

}

getPreferredCameraId();

mFocusManager = new FocusManager(mPreferences,

defaultFocusModes);

mTouchManager = new TouchManager();

mIsImageCaptureIntent = isImageCaptureIntent();

setContentView(R.layout.camera);

if (mIsImageCaptureIntent) {

mReviewDoneButton = (Rotatable) findViewById(R.id.btn_done);

mReviewCancelButton = (Rotatable) findViewById(R.id.btn_cancel);

findViewById(R.id.btn_cancel).setVisibility(View.VISIBLE);

} else {

mThumbnailView = (RotateImageView) findViewById(R.id.thumbnail);

mThumbnailView.enableFilter(false);

mThumbnailView.setVisibility(View.VISIBLE);

}

mRotateDialog = new RotateDialogController(this, R.layout.rotate_dialog);

mCaptureLayout = getString(R.string.pref_camera_capture_layout_default);

mPreferences.setLocalId(this, mCameraId);

CameraSettings.upgradeLocalPreferences(mPreferences.getLocal());

mNumberOfCameras = CameraHolder.instance().getNumberOfCameras();

mQuickCapture = getIntent().getBooleanExtra(EXTRA_QUICK_CAPTURE, false);

// we need to reset exposure for the preview

resetExposureCompensation();

Util.enterLightsOutMode(getWindow());

// don't set mSurfaceHolder here. We have it set ONLY within

// surfaceChanged / surfaceDestroyed, other parts of the code

// assume that when it is set, the surface is also set.

SurfaceView preview = (SurfaceView) findViewById(R.id.camera_preview);

SurfaceHolder holder = preview.getHolder();

holder.addCallback(this);

s3dView = new S3DViewWrapper(holder);

holder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

// Make sure camera device is opened.

try {

mCameraOpenThread.join();

mCameraOpenThread = null;

if (mOpenCameraFail) {

Util.showErrorAndFinish(this, R.string.cannot_connect_camera);

return;

} else if (mCameraDisabled) {

Util.showErrorAndFinish(this, R.string.camera_disabled);

return;

}

} catch (InterruptedException ex) {

// ignore

}

mCameraPreviewThread.start();

if (mIsImageCaptureIntent) {

setupCaptureParams();

} else {

mModePicker = (ModePicker) findViewById(R.id.mode_picker);

mModePicker.setVisibility(View.VISIBLE);

mModePicker.setOnModeChangeListener(this);

mModePicker.setCurrentMode(ModePicker.MODE_CAMERA);

}

mZoomControl = (ZoomControl) findViewById(R.id.zoom_control);

mOnScreenIndicators = (Rotatable) findViewById(R.id.on_screen_indicators);

mLocationManager = new LocationManager(this, this);

// Wait until the camera settings are retrieved.

synchronized (mCameraPreviewThread) {

try {

mCameraPreviewThread.wait();

} catch (InterruptedException ex) {

// ignore

}

}

// Do this after starting preview because it depends on camera

// parameters.

initializeIndicatorControl();

mCameraSound = new CameraSound();

// Make sure preview is started.

try {

mCameraPreviewThread.join();

} catch (InterruptedException ex) {

// ignore

}

mCameraPreviewThread = null;

}

再看看mCameraOpenThread

Thread mCameraOpenThread = new Thread(new Runnable() {

public void run() {

try {

mCameraDevice = Util.openCamera(Camera.this, mCameraId);

} catch (CameraHardwareException e) {

mOpenCameraFail = true;

} catch (CameraDisabledException e) {

mCameraDisabled = true;

}

}

});

繼續追Util.openCamera ,Util類的定義在以下目錄:packages/apps/OMAPCamera/src/com/ti/omap4/android/camera/Util.java

public static android.hardware.Camera openCamera(Activity activity, int cameraId)

throws CameraHardwareException, CameraDisabledException {

// Check if device policy has disabled the camera.

DevicePolicyManager dpm = (DevicePolicyManager) activity.getSystemService(

Context.DEVICE_POLICY_SERVICE);

if (dpm.getCameraDisabled(null)) {

throw new CameraDisabledException();

}

try {

return CameraHolder.instance().open(cameraId);

} catch (CameraHardwareException e) {

// In eng build, we throw the exception so that test tool

// can detect it and report it

if ("eng".equals(Build.TYPE)) {

throw new RuntimeException("openCamera failed", e);

} else {

throw e;

}

}

}

又來了個CameraHolder,該類用一個實例openCamera

CameraHolder的定義在以下目錄:packages/apps/OMAPCamera/src/com/ti/omap4/android/camera/CameraHolder.java

public synchronized android.hardware.Camera open(int cameraId)

throws CameraHardwareException {

Assert(mUsers == 0);

if (mCameraDevice != null && mCameraId != cameraId) {

mCameraDevice.release();

mCameraDevice = null;

mCameraId = -1;

}

if (mCameraDevice == null) {

try {

Log.v(TAG, "open camera " + cameraId);

mCameraDevice = android.hardware.Camera.open(cameraId);

mCameraId = cameraId;

} catch (RuntimeException e) {

Log.e(TAG, "fail to connect Camera", e);

throw new CameraHardwareException(e);

}

mParameters = mCameraDevice.getParameters();

} else {

try {

mCameraDevice.reconnect();

} catch (IOException e) {

Log.e(TAG, "reconnect failed.");

throw new CameraHardwareException(e);

}

mCameraDevice.setParameters(mParameters);

}

++mUsers;

mHandler.removeMessages(RELEASE_CAMERA);

mKeepBeforeTime = 0;

return mCameraDevice;

}

在這裡就開始進入framework層了,調用frameworks\base\core\java\android\hardware\Camera.java類的open方法 。

public static Camera open(int cameraId) {

return new Camera(cameraId);

}

這裡調用了Camera的構造函數,在看看構造函數

Camera(int cameraId) {

mShutterCallback = null;

mRawImageCallback = null;

mJpegCallback = null;

mPreviewCallback = null;

mPostviewCallback = null;

mZoomListener = null;

Looper looper;

if ((looper = Looper.myLooper()) != null) {

mEventHandler = new EventHandler(this, looper);

} else if ((looper = Looper.getMainLooper()) != null) {

mEventHandler = new EventHandler(this, looper);

} else {

mEventHandler = null;

}

native_setup(new WeakReference<Camera>(this), cameraId);

}

好,終於來到JNI了

三

繼續看camera的JNI文件:frameworks/base/core/jni# gedit android_hardware_Camera.cpp

由於前面Camera的構造函數裡調用了native_setup(new WeakReference<Camera>(this), cameraId);

那麼native_setup()的定義在那裡呢

通過我的查看,在frameworks/base/core/jni# gedit android_hardware_Camera.cpp中有這樣一個定義,

我認為通過這個定義,使得native_setup和android_hardware_Camera_native_setup 關聯起來

static JNINativeMethod camMethods[] = {

{ "getNumberOfCameras",

"()I",

(void *)android_hardware_Camera_getNumberOfCameras },

{ "getCameraInfo",

"(ILandroid/hardware/Camera$CameraInfo;)V",

(void*)android_hardware_Camera_getCameraInfo },

{ "native_setup",

"(Ljava/lang/Object;I)V",

(void*)android_hardware_Camera_native_setup },

{ "native_release",

"()V",

(void*)android_hardware_Camera_release },

{ "setPreviewDisplay",

"(Landroid/view/Surface;)V",

(void *)android_hardware_Camera_setPreviewDisplay },

{ "setPreviewTexture",

"(Landroid/graphics/SurfaceTexture;)V",

(void *)android_hardware_Camera_setPreviewTexture },

{ "startPreview",

"()V",

(void *)android_hardware_Camera_startPreview },

{ "_stopPreview",

"()V",

(void *)android_hardware_Camera_stopPreview },

{ "previewEnabled",

"()Z",

(void *)android_hardware_Camera_previewEnabled },

{ "setHasPreviewCallback",

"(ZZ)V",

(void *)android_hardware_Camera_setHasPreviewCallback },

{ "_addCallbackBuffer",

"([BI)V",

(void *)android_hardware_Camera_addCallbackBuffer },

{ "native_autoFocus",

"()V",

(void *)android_hardware_Camera_autoFocus },

{ "native_cancelAutoFocus",

"()V",

(void *)android_hardware_Camera_cancelAutoFocus },

{ "native_takePicture",

"(I)V",

(void *)android_hardware_Camera_takePicture },

{ "native_setParameters",

"(Ljava/lang/String;)V",

(void *)android_hardware_Camera_setParameters },

{ "native_getParameters",

"()Ljava/lang/String;",

(void *)android_hardware_Camera_getParameters },

{ "reconnect",

"()V",

(void*)android_hardware_Camera_reconnect },

{ "lock",

"()V",

(void*)android_hardware_Camera_lock },

{ "unlock",

"()V",

(void*)android_hardware_Camera_unlock },

{ "startSmoothZoom",

"(I)V",

(void *)android_hardware_Camera_startSmoothZoom },

{ "stopSmoothZoom",

"()V",

(void *)android_hardware_Camera_stopSmoothZoom },

{ "setDisplayOrientation",

"(I)V",

(void *)android_hardware_Camera_setDisplayOrientation },

{ "_startFaceDetection",

"(I)V",

(void *)android_hardware_Camera_startFaceDetection },

{ "_stopFaceDetection",

"()V",

(void *)android_hardware_Camera_stopFaceDetection},

};

所以,native_setup(new WeakReference<Camera>(this), cameraId);這個調用即是對下面android_hardware_Camera_native_setup這個函數的調用

// connect to camera service

static void android_hardware_Camera_native_setup(JNIEnv *env, jobject thiz,

jobject weak_this, jint cameraId)

{

sp<Camera> camera = Camera::connect(cameraId);

if (camera == NULL) {

jniThrowRuntimeException(env, "Fail to connect to camera service");

return;

}

// make sure camera hardware is alive

if (camera->getStatus() != NO_ERROR) {

jniThrowRuntimeException(env, "Camera initialization failed");

return;

}

jclass clazz = env->GetObjectClass(thiz);

if (clazz == NULL) {

jniThrowRuntimeException(env, "Can't find android/hardware/Camera");

return;

}

// We use a weak reference so the Camera object can be garbage collected.

// The reference is only used as a proxy for callbacks.

sp<JNICameraContext> context = new JNICameraContext(env, weak_this, clazz, camera);

context->incStrong(thiz);

camera->setListener(context);

// save context in opaque field

env->SetIntField(thiz, fields.context, (int)context.get());

}

JNI函數裡面,我們找到Camera C/S架構的客戶端了,它調用connect函數向服務器發送連接請求。JNICameraContext這個類是一個監聽類,用於處理底層Camera回調函數傳來的數據和消息

看看客戶端的connect函數有什麼,connect定義在以下路徑frameworks/base/libs/camera/camera.cpp

sp<Camera> Camera::connect(int cameraId)

{

LOGV("connect");

sp<Camera> c = new Camera();

const sp<ICameraService>& cs = getCameraService();

if (cs != 0) {

c->mCamera = cs->connect(c, cameraId);

}

if (c->mCamera != 0) {

c->mCamera->asBinder()->linkToDeath(c);

c->mStatus = NO_ERROR;

} else {

c.clear();

}

return c;

}

const sp<ICameraService>& cs =getCameraService();獲取CameraService實例。

進入getCameraService()中

// establish binder interface to camera service

const sp<ICameraService>& Camera::getCameraService()

{

Mutex::Autolock _l(mLock);

if (mCameraService.get() == 0) {

sp<IServiceManager> sm = defaultServiceManager();

sp<IBinder> binder;

do {

binder = sm->getService(String16("media.camera"));

if (binder != 0)

break;

LOGW("CameraService not published, waiting...");

usleep(500000); // 0.5 s

} while(true);

if (mDeathNotifier == NULL) {

mDeathNotifier = new DeathNotifier();

}

binder->linkToDeath(mDeathNotifier);

mCameraService = interface_cast<ICameraService>(binder);

}

LOGE_IF(mCameraService==0, "no CameraService!?");

return mCameraService;

}

CameraService實例通過binder獲取的,mCameraService即為CameraService的實例。

回到sp<Camera> Camera::connect(int cameraId)中

c->mCamera = cs->connect(c, cameraId);

即:執行server的connect()函數,並且返回ICamera對象,賦值給Camera的mCamera,服務端connect()返回的是他內部類的一個實例。

server的connect()函數定義在以下路徑:frameworks/base/services/camera/libcameraservice/CameraService.cpp

sp<ICamera> CameraService::connect(

const sp<ICameraClient>& cameraClient, int cameraId) {

int callingPid = getCallingPid();

sp<CameraHardwareInterface> hardware = NULL;

LOG1("CameraService::connect E (pid %d, id %d)", callingPid, cameraId);

if (!mModule) {

LOGE("Camera HAL module not loaded");

return NULL;

}

sp<Client> client;

if (cameraId < 0 || cameraId >= mNumberOfCameras) {

LOGE("CameraService::connect X (pid %d) rejected (invalid cameraId %d).",

callingPid, cameraId);

return NULL;

}

char value[PROPERTY_VALUE_MAX];

property_get("sys.secpolicy.camera.disabled", value, "0");

if (strcmp(value, "1") == 0) {

// Camera is disabled by DevicePolicyManager.

LOGI("Camera is disabled. connect X (pid %d) rejected", callingPid);

return NULL;

}

Mutex::Autolock lock(mServiceLock);

if (mClient[cameraId] != 0) {

client = mClient[cameraId].promote();

if (client != 0) {

if (cameraClient->asBinder() == client->getCameraClient()->asBinder()) {

LOG1("CameraService::connect X (pid %d) (the same client)",

callingPid);

return client;

} else {

LOGW("CameraService::connect X (pid %d) rejected (existing client).",

callingPid);

return NULL;

}

}

mClient[cameraId].clear();

}

if (mBusy[cameraId]) {

LOGW("CameraService::connect X (pid %d) rejected"

" (camera %d is still busy).", callingPid, cameraId);

return NULL;

}

struct camera_info info;

if (mModule->get_camera_info(cameraId, &info) != OK) {

LOGE("Invalid camera id %d", cameraId);

return NULL;

}

char camera_device_name[10];

snprintf(camera_device_name, sizeof(camera_device_name), "%d", cameraId);

hardware = new CameraHardwareInterface(camera_device_name);

if (hardware->initialize(&mModule->common) != OK) {

hardware.clear();

return NULL;

}

client = new Client(this, cameraClient, hardware, cameraId, info.facing, callingPid);

mClient[cameraId] = client;

LOG1("CameraService::connect X");

return client;

}

實例化Camera Hal接口 hardware,hardware調用initialize()進入HAL層打開Camear驅動。

CameraHardwareInterface中initialize()定義在以下路徑:frameworks/base/services/camera/libcameraservice/CameraHardwareInterface.h

代碼如下:

status_t initialize(hw_module_t *module)

{

LOGI("Opening camera %s", mName.string());

int rc = module->methods->open(module, mName.string(),

(hw_device_t **)&mDevice);

if (rc != OK) {

LOGE("Could not open camera %s: %d", mName.string(), rc);

return rc;

}

#ifdef OMAP_ENHANCEMENT_CPCAM

initHalPreviewWindow(&mHalPreviewWindow);

initHalPreviewWindow(&mHalTapin);

initHalPreviewWindow(&mHalTapout);

#else

initHalPreviewWindow();

#endif

return rc;

}

此處通過module->method->open()方法真正打開Camera設備,

其中module的定義在以下路徑:

class CameraService :

public BinderService<CameraService>,

public BnCameraService

{

class Client : public BnCamera

{

public:

......

private:

.....

};

camera_module_t *mModule;

};

此處還必須找到camera_module_t 的定義,以更好的理解整個運行流程,通過追根溯源找到了camera_module_t 定義,

camera_module_t的定義在以下路徑:hardware/libhardware/include/hardware/camera.h中,定義如下

typedef struct camera_module {

hw_module_t common;

int (*get_number_of_cameras)(void);

int (*get_camera_info)(int camera_id, struct camera_info *info);

} camera_module_t;

其中包含get_number_of_cameras方法和get_camera_info方法用於獲取camera info

另外hw_module_t common;這個選項十分重要,此處應重點關注,因為是使用hw_module_t結構體中的open()方法打開設備文件的

繼續找到hw_module_t 結構體的定義.在以下路徑:hardware/libhardware/include/hardware/hardware.h,代碼如下:

struct hw_module_t;

struct hw_module_methods_t;

struct hw_device_t;

/**

* Every hardware module must have a data structure named HAL_MODULE_INFO_SYM

* and the fields of this data structure must begin with hw_module_t

* followed by module specific information.

*/

typedef struct hw_module_t {

/** tag must be initialized to HARDWARE_MODULE_TAG */

uint32_t tag;

/** major version number for the module */

uint16_t version_major;

/** minor version number of the module */

uint16_t version_minor;

/** Identifier of module */

const char *id;

/** Name of this module */

const char *name;

/** Author/owner/implementor of the module */

const char *author;

/** Modules methods */

struct hw_module_methods_t* methods;

/** module's dso */

void* dso;

/** padding to 128 bytes, reserved for future use */

uint32_t reserved[32-7];

} hw_module_t;

同樣,找到hw_module_methods_t這個結構體的定義,代碼如下:

typedef struct hw_module_methods_t {

/** Open a specific device */

int (*open)(const struct hw_module_t* module, const char* id,

struct hw_device_t** device);

} hw_module_methods_t;

hw_module_methods_t 結構體中只有open()一個方法,用於打開camera driver,實現與硬件層的交互

到此為止,很容易看出:

Android中Camera的調用流程可分為以下幾個層次:

Package->Framework->JNI->Camera(cpp)--(binder)-->CameraService->Camera HAL->Camera Driver

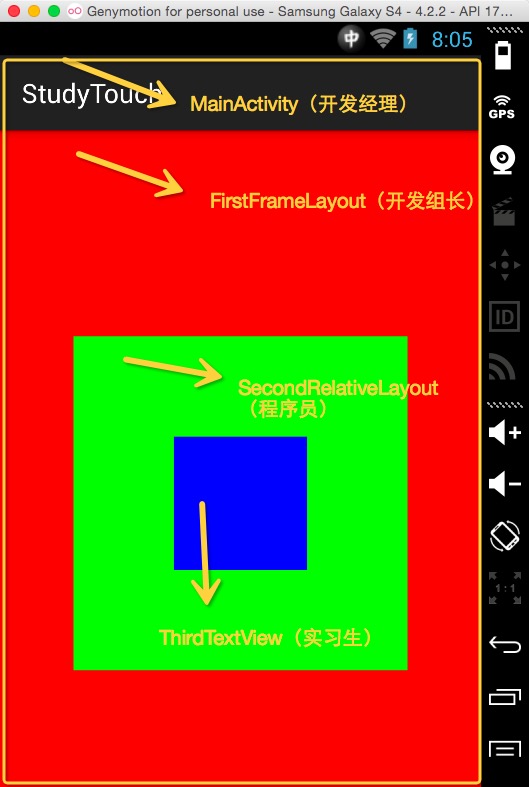

Android Touch事件傳遞機制講解

Android Touch事件傳遞機制講解

在講正題之前我們講一段有關任務傳遞的小故事,拋磚迎玉下: 話說一家軟件公司,來一個任務,分派給了開發經理去完成: 開發經理拿到,看了一下,感覺好簡單,於是 開發經理:分派

Android中自定義View實現圓環等待及相關的音量調節效果

Android中自定義View實現圓環等待及相關的音量調節效果

圓環交替、等待效果效果就這樣,分析了一下,大概有這幾個屬性,兩個顏色,一個速度,一個圓環的寬度。自定View的幾個步驟:1、自定義View的屬性2、在View的構造方法中

Android MVP模式

Android MVP模式

Android MVP模式一、MVP介紹隨著UI創建技術的功能日益增強,UI層也履行著越來越多的職責。為了更好地細分視圖(View)與模型(Model)的功能,讓View

仿QQ消息列表item橫向滑動刪除ListView中item側滑刪除

仿QQ消息列表item橫向滑動刪除ListView中item側滑刪除

仿QQ消息列表item橫向滑動刪除ListView中item側滑刪除在最近的項目中,我的ListView中item選項是長按刪除的效果(Android的通常做法長按或點擊