android使用binder作為進程間的通信工具。典型的應用是android的C/S機制,即client/service。使用這種

機制有以下優勢:

1,可擴展性

2,有效性,一個service可以有多個client

3,安全性,client和service運行在不同的進程中,即使client出問題,不會影響到service的運行

我們今天以media_server作為例子來分析binder通信機制。

首先要有這個概念,android中有個服務總管叫servicemanager,mediaserver是負責向裡面添加一些多媒體

服務的。所以從這個角度說的話,mediaserver是servicemanager的client。

在main_mediaserver.cpp中:

int main(int argc, char** argv)

{

sp<rocessState> proc(ProcessState::self());//1生成ProcessState對象

sp<IServiceManager> sm = defaultServiceManager();//2得到BpServiceManager(BpBinder(0))

LOGI("ServiceManager: %p", sm.get());

AudioFlinger::instantiate();//3初始化AudioFlinger實例,使用sm->addService()方法

MediaPlayerService::instantiate();

CameraService::instantiate();

AudioPolicyService::instantiate();

ProcessState::self()->startThreadPool();//4轉化為調用下面的joinThreadPool

IPCThreadState::self()->joinThreadPool();//5talkwithdriver,為該server中的service服務

/*這樣相當於兩個線程在和Binder驅動對話,為server中的所有service工作,隨時獲取各個service的client發來的數據,並進行處理*/

}

我們先看第一部分sp<rocessState> proc(ProcessState::self()):

上面可以寫成proc=ProcessState::self(),下面看ProcessState::self():

sp<rocessState> ProcessState::self()

{

if (gProcess != NULL) return gProcess;//在Static.cpp中定義,全局變量,同時可以看出是單例模式

AutoMutex _l(gProcessMutex);

if (gProcess == NULL) gProcess = new ProcessState;//ProcessState對象

return gProcess;

}

比較簡單,返回ProcessState對象,我們看下它的構造函數:

ProcessState:rocessState()

: mDriverFD(open_driver())//打開的就是binder驅動

, mVMStart(MAP_FAILED)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

{

...

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

...

}

我們看到構造函數中打開了binder驅動,然後映射內存。

2,sp<IServiceManager> sm = defaultServiceManager();

該部分是非常重要的部分,對它的分析直接決定了後面的分析成敗。我們找到defaultServiceManager()定義:

sp<IServiceManager> defaultServiceManager()

{

if (gDefaultServiceManager != NULL) return gDefaultServiceManager;//同樣也是單例模式

{

AutoMutex _l(gDefaultServiceManagerLock);

if (gDefaultServiceManager == NULL) {

gDefaultServiceManager = interface_cast<IServiceManager>(//interface_cast是個模板,返回IServiceManager::asInterface(obj),asInterface使用的是宏定義DECLARE_META_INTERFACE,使用IMPLEMENT_META_INTERFACE宏實現

ProcessState::self()->getContextObject(NULL));//返回BpBinder(0)

}

}

return gDefaultServiceManager;//BpServiceManager(BpBinder(0))

}

我們先看ProcessState::self()->getContextObject(NULL):

sp<IBinder> ProcessState::getContextObject(const sp<IBinder>& caller)

{

if (supportsProcesses()) {//判斷Binder打開是否正確

return getStrongProxyForHandle(0);//返回BpBinder(0)

} else {

return getContextObject(String16("default"), caller);

}

}

我們在看getStrongProxyForHandle(0):

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle)//注意上面傳下來的參數是0

{

sp<IBinder> result;

AutoMutex _l(mLock);

handle_entry* e = lookupHandleLocked(handle);//尋找0handle,如果沒有則創建

if (e != NULL) {

// We need to create a new BpBinder if there isn't currently one, OR we

// are unable to acquire a weak reference on this current one. See comment

// in getWeakProxyForHandle() for more info about this.

IBinder* b = e->binder;

if (b == NULL || !e->refs->attemptIncWeak(this)) {

b = new BpBinder(handle);//!!!根據上面傳下來的handle,這裡生成BpBinder(0)

e->binder = b;

if (b) e->refs = b->getWeakRefs();

result = b;

} else {

// This little bit of nastyness is to allow us to add a primary

// reference to the remote proxy when this team doesn't have one

// but another team is sending the handle to us.

result.force_set(b);

e->refs->decWeak(this);

}

}

return result;

}

到這裡,我們知道了ProcessState::self()->getContextObject(NULL)返回了BpBinder(0),那回到原先的defaultServiceManager()中,也就是:

gDefaultServiceManager = interface_cast<IServiceManager>(BpBinder(0))。

我們看下interface_cast定義:

template<typename INTERFACE>

inline sp<INTERFACE> interface_cast(const sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

}

將上面帶入即:

gDefaultServiceManager = IServiceManager::asInterface(BpBinder(0));

我們到IServiceManager.h中並沒有找到asInterface定義,但是我們發現由這個宏:

class IServiceManager : public IInterface

{

public:

DECLARE_META_INTERFACE(ServiceManager);

...

}

宏定義如下:

#define DECLARE_META_INTERFACE(INTERFACE) \

static const String16 descriptor; \

static sp<I##INTERFACE> asInterface(const sp<IBinder>& obj); \

virtual const String16& getInterfaceDescriptor() const; \

I##INTERFACE(); \

virtual ~I##INTERFACE(); \

帶入即:

static const String16 descriptor; \

static sp<IServiceManager> asInterface(const sp<IBinder>& obj); \

virtual const String16& getInterfaceDescriptor() const; \

IServiceManager(); \

virtual ~IServiceManager();

這裡它申明了一個asInterface方法。

在IServiceManager.cpp中有asInterface方法的實現在如下宏:

IMPLEMENT_META_INTERFACE(ServiceManager, "android.os.IServiceManager");

它的定義如下:

#define IMPLEMENT_META_INTERFACE(INTERFACE, NAME) \

const String16 I##INTERFACE::descriptor(NAME); \

const String16& I##INTERFACE::getInterfaceDescriptor() const { \

return I##INTERFACE::descriptor; \

} \

sp<I##INTERFACE> I##INTERFACE::asInterface(const sp<IBinder>& obj) \

{ \

sp<I##INTERFACE> intr; \

if (obj != NULL) { \

intr = static_cast<I##INTERFACE*>( \

obj->queryLocalInterface( \

I##INTERFACE::descriptor).get()); \

if (intr == NULL) { \

intr = new Bp##INTERFACE(obj); \

} \

} \

return intr; \

} \

I##INTERFACE::I##INTERFACE() { } \

I##INTERFACE::~I##INTERFACE() { } \

帶入後即:

const String16 IServiceManager::descriptor("android.os.IServiceManager");

const String16& IServiceManager::getInterfaceDescriptor() const {

return IServiceManager::descriptor;

}

sp<IServiceManager> IServiceManager::asInterface(const sp<IBinder>& obj)

{

sp<IServiceManager> intr;

if (obj != NULL) {

intr = static_cast<IServiceManager*>(

obj->queryLocalInterface(

IServiceManager::descriptor).get());

if (intr == NULL) {

intr = new BpServiceManager(obj); //很明顯返回了BpServiceManager對象!!!

}

}

return intr;

}

IServiceManager::IServiceManager() { }

IServiceManager::~IServiceManager() { }

到此,我們帶入到gDefaultServiceManager = BpServiceManager(BpBinder(0))

也就是sp<IServiceManager> sm = defaultServiceManager()= BpServiceManager(BpBinder(0));

我們看下BpServiceManager的構造函數:

BpServiceManager(const sp<IBinder>& impl)

: BpInterface<IServiceManager>(impl)

{

}

帶入也就是:

BpServiceManager(BpBinder(0))

: BpInterface<IServiceManager>(BpBinder(0))

{

}

BpInterface定義:

template<typename INTERFACE>

class BpInterface : public INTERFACE, public BpRefBase

{

public:

BpInterface(const sp<IBinder>& remote);

protected:

virtual IBinder* onAsBinder();

};

上面帶入:

class BpInterface : public IServiceManager, public BpRefBase

{

public:

BpInterface(BpBinder(0));//注意這裡

protected:

virtual IBinder* onAsBinder();

};

我們看下BpInterface定義:

template<typename INTERFACE>

inline BpInterface<INTERFACE>::BpInterface(const sp<IBinder>& remote)

: BpRefBase(remote)

{

}

帶入:

BpRefBase(BpBinder(0))

我們看下其定義:

BpRefBase::BpRefBase(const sp<IBinder>& o)

: mRemote(o.get()), mRefs(NULL), mState(0)

{

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

if (mRemote) {

mRemote->incStrong(this); // Removed on first IncStrong().

mRefs = mRemote->createWeak(this); // Held for our entire lifetime.

}

}

這裡最關注的是mRemote(o.get()),即mRemote=BpBinder(0),這可要記住了,它的子類BpServiceManager會使用它進行Binder通信的。

3,AudioFlinger::instantiate():

void AudioFlinger::instantiate() {

defaultServiceManager()->addService(//使用defaultServiceManager()的addService方法

String16("media.audio_flinger"), new AudioFlinger());

}

我們在2中分析知道defaultServiceManager()返回的是BpServiceManager(BpBinder(0)),我們看BpServiceManager的addService方法:

virtual status_t addService(const String16& name, const sp<IBinder>& service)

{

Parcel data, reply;

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

data.writeStrongBinder(service);

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);//即調用BpBinder->transact()

return err == NO_ERROR ? reply.readInt32() : err;

}

我們看到addService使用了remote()->transact,也即使用了BpBinder()->transact():

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(//IPCThreadState的transact

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

我們下面再看IPCThreadState的transact:

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand "

<< handle << " / code " << TypeCode(code) << ": "

<< indent << data << dedent << endl;

}

if (err == NO_ERROR) {

LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(),

(flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY");

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);//將數據包寫到mOut buffer裡面

}

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

if ((flags & TF_ONE_WAY) == 0) {

if (reply) {

err = waitForResponse(reply);//這裡執行talkdriver和execcmd

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_REPLY thr " << (void*)pthread_self() << " / hand "

<< handle << ": ";

if (reply) alog << indent << *reply << dedent << endl;

else alog << "(none requested)" << endl;

}

} else {

err = waitForResponse(NULL, NULL);

}

return err;

}

這裡主要是兩個函數:writeTransactionData()和waitForResponse()。

writeTransactionData()主要是將數據包寫到mOut buffer裡面。我們看下waitForResponse():

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

int32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;//和Binder驅動通信對話,即將mOut數據寫到Binder中後,等待Binder回應

err = mIn.errorCheck();//check Binder返回的數據

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = mIn.readInt32();//取出cmd

IF_LOG_COMMANDS() {

alog << "rocessing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {//根據cmd執行不同的case

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

...

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

...

return err;

}

我們再看talkWithDriver():

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

...

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

IF_LOG_COMMANDS() {

alog << "About to read/write, write size = " << mOut.dataSize() << endl;

}

#if defined(HAVE_ANDROID_OS)

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)//正真的核心東西,作為client是通過ioctl把數據包寫進去,然後再讀出service端的的數據。如果作為service端,則相反

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

IF_LOG_COMMANDS() {

alog << "Finished read/write, write size = " << mOut.dataSize() << endl;

}

} while (err == -EINTR);

...

return err;

}

至此,我們client端的工作基本告一段落了,後面的工作交給service_manager。

3-1,service_manager端的工作:

int main(int argc, char **argv)

{

struct binder_state *bs;

void *svcmgr = BINDER_SERVICE_MANAGER;

bs = binder_open(128*1024);//直接打開binder驅動,並沒有使用BBinder機制

if (binder_become_context_manager(bs)) {//告訴binder驅動,我是老大,handle為0

LOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

svcmgr_handle = svcmgr;

binder_loop(bs, svcmgr_handler);

return 0;

}

我們下面看binder_loop(bs, svcmgr_handler):

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

unsigned readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(unsigned));

for (;;) {//一直循環下去,為所有service工作

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);//與binder驅動對話,先寫再讀,不過這裡寫的size為0(bwr.write_size = 0),所以這裡是只讀binder端的數據

if (res < 0) {

LOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);//分析讀回來的數據,記住這裡的func傳入的參數是svcmgr_handler

if (res == 0) {

LOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

LOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}

我們再看binder_parse(bs, 0, readbuf, bwr.read_consumed, func):

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uint32_t *ptr, uint32_t size, binder_handler func)

{

int r = 1;

uint32_t *end = ptr + (size / 4);

while (ptr < end) {

uint32_t cmd = *ptr++;

#if TRACE

fprintf(stderr,"%s:\n", cmd_name(cmd));

#endif

switch(cmd) {

...

case BR_TRANSACTION: {

struct binder_txn *txn = (void *) ptr;

if ((end - ptr) * sizeof(uint32_t) < sizeof(struct binder_txn)) {

LOGE("parse: txn too small!\n");

return -1;

}

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

res = func(bs, txn, &msg, &reply);//通過func執行client的請求,即svcmgr_handler執行

binder_send_reply(bs, &reply, txn->data, res);

}

ptr += sizeof(*txn) / sizeof(uint32_t);

break;

}

...

default:

LOGE("parse: OOPS %d\n", cmd);

return -1;

}

}

return r;

}

我們看到如果是case BR_TRANSACTION主要轉換成svcmgr_handler(bs, txn, &msg, &reply):

int svcmgr_handler(struct binder_state *bs,

struct binder_txn *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

unsigned len;

void *ptr;

// LOGI("target=%p code=%d pid=%d uid=%d\n",

// txn->target, txn->code, txn->sender_pid, txn->sender_euid);

if (txn->target != svcmgr_handle)

return -1;

s = bio_get_string16(msg, &len);

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

fprintf(stderr,"invalid id %s\n", str8(s));

return -1;

}

switch(txn->code) {//通過不同的code執行不同的case

case SVC_MGR_GET_SERVICE:

case SVC_MGR_CHECK_SERVICE:

s = bio_get_string16(msg, &len);

ptr = do_find_service(bs, s, len);//找到所需要的service

if (!ptr)

break;

bio_put_ref(reply, ptr);

return 0;

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

ptr = bio_get_ref(msg);

if (do_add_service(bs, s, len, ptr, txn->sender_euid))//將service添加到service列表

return -1;

break;

case SVC_MGR_LIST_SERVICES: {

unsigned n = bio_get_uint32(msg);

si = svclist;

while ((n-- > 0) && si)//列出表中的所有service

si = si->next;

if (si) {

bio_put_string16(reply, si->name);

return 0;

}

return -1;

}

default:

LOGE("unknown code %d\n", txn->code);

return -1;

}

bio_put_uint32(reply, 0);

return 0;

}

servicemanager工作通過跟server的注冊的service的聯系起來了。

我們總結一下,client端主要通過Bpbinder的Transact向Binder傳輸數據,servicemanager直接讀binder,然後執行相應的操作。後面我們會繼續分析具體的service和client的怎麼樣通過Binder通信。

http://www.qrsdev.com/forum.php?mod=viewthread&tid=498&extra=page%3D1

解析android 流量監測的實現原理

解析android 流量監測的實現原理

Android進階 MVP設計模式實例

Android進階 MVP設計模式實例

Android Studio SlidingMenu導入/配置 FloatMath找不到符號解決方法

Android Studio SlidingMenu導入/配置 FloatMath找不到符號解決方法

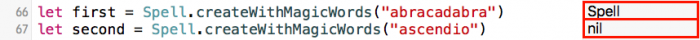

神奇的 Swift 錯誤處理

神奇的 Swift 錯誤處理