編輯:關於Android編程

前面兩篇文章,我們分別講了setdataSource和prepare的過程,獲得了mVideoTrack,mAudioTrack,mVideoSourc,mAudioSource,前兩個來自於setdataSource過程,後面兩是prepare。

status_t AwesomePlayer::setDataSource_l(const sp<MediaExtractor> &extractor) {…

if (!haveVideo && !strncasecmp(mime.string(), "video/", 6)) {

setVideoSource(extractor->getTrack(i));}

else if (!haveAudio && !strncasecmp(mime.string(), "audio/", 6)) {

setAudioSource(extractor->getTrack(i));

……………..

}

}

void AwesomePlayer::setVideoSource(sp<MediaSource> source) {

CHECK(source != NULL);

mVideoTrack = source;

}

void AwesomePlayer::setAudioSource(sp<MediaSource> source) {

CHECK(source != NULL);

mAudioTrack = source;

}

mVideoSource = OMXCodec::Create(

mClient.interface(), mVideoTrack->getFormat(),

false, // createEncoder

mVideoTrack,

NULL, flags, USE_SURFACE_ALLOC ? mNativeWindow : NULL);

mAudioSource = OMXCodec::Create(

mClient.interface(), mAudioTrack->getFormat(),

false, // createEncoder

mAudioTrack);

通過mVideoTrack,mAudioTrack我們找到了相應的解碼器,並初始化了,下面我們就開講mediaplayer如何播放了。前面的一些接口實現,我們就不講了,不懂的可以回到setdataSource這一篇繼續研究,我們直接看Awesomeplayer的實現。先看大體的時序圖吧:

status_t AwesomePlayer::play_l() {

modifyFlags(SEEK_PREVIEW, CLEAR);

…………

modifyFlags(PLAYING, SET);

modifyFlags(FIRST_FRAME, SET); ---設置PLAYING和FIRST_FRAME的標志位

…………………..

if (mAudioSource != NULL) {-----mAudioSource不為空時初始化Audioplayer

if (mAudioPlayer == NULL) {

if (mAudioSink != NULL) {

(1) mAudioPlayer = new AudioPlayer(mAudioSink, allowDeepBuffering, this);

mAudioPlayer->setSource(mAudioSource);

seekAudioIfNecessary_l();

}

}

CHECK(!(mFlags & AUDIO_RUNNING));

if (mVideoSource == NULL) {-----如果單是音頻,直接播放

….

(2) status_t err = startAudioPlayer_l(

false /* sendErrorNotification */);

modifyFlags((PLAYING | FIRST_FRAME), CLEAR);

…………..

return err;

}

}

}

……

if (mVideoSource != NULL) {-----有視頻時,發送event到queue,等待處理

// Kick off video playback

(3) postVideoEvent_l();

if (mAudioSource != NULL && mVideoSource != NULL) {----有視頻,音頻時,檢查他們是否同步

(4) postVideoLagEvent_l();

}

}

}

…………..

return OK;

}

在playe_l方法裡,我們可以看到首先是實例化一個audioplayer來播放音頻,如果單單是音頻直接就播放,現在我們是本地視頻播放,將不會走第二步,直接走第三和第四步。我們看下postVideoEvent_l()方法,跟我們在講prepareAsync_l的類似:

void AwesomePlayer::postVideoEvent_l(int64_t delayUs) {

……………

mVideoEventPending = true;

mQueue.postEventWithDelay(mVideoEvent, delayUs < 0 ? 10000 : delayUs);

}

mVideoEvent在我們構造awesomeplayer時已經定義:

mVideoEvent = new AwesomeEvent(this, &AwesomePlayer::onVideoEvent);

所以我們看onVideoEvent方法:

void AwesomePlayer::onVideoEvent() {

if (!mVideoBuffer) {

for (;;) {

(1) status_t err = mVideoSource->read(&mVideoBuffer, &options); ---mVideoSource(omxcodec)

options.clearSeekTo();

++mStats.mNumVideoFramesDecoded;

}

(2) status_t err = startAudioPlayer_l();

if ((mNativeWindow != NULL)

&& (mVideoRendererIsPreview || mVideoRenderer == NULL)) {

mVideoRendererIsPreview = false;

(3) initRenderer_l();

}

if (mVideoRenderer != NULL) {

mSinceLastDropped++;

(4) mVideoRenderer->render(mVideoBuffer);

}

(5) postVideoEvent_l();

}

我們看到通過read方法去解碼一個個sample,獲取videobuffer,然後render到surfaceTexture。

read 方法:

status_t OMXCodec::read(

MediaBuffer **buffer, const ReadOptions *options) {

if (mInitialBufferSubmit) {

mInitialBufferSubmit = false;

if (seeking) {

CHECK(seekTimeUs >= 0);

mSeekTimeUs = seekTimeUs;

mSeekMode = seekMode;

// There's no reason to trigger the code below, there's

// nothing to flush yet.

seeking = false;

mPaused = false;

}

drainInputBuffers();---對應emptybuffer,輸入端

if (mState == EXECUTING) {

// Otherwise mState == RECONFIGURING and this code will trigger

// after the output port is reenabled.

fillOutputBuffers();--對應fillbuffer,輸出端

}

}

….

size_t index = *mFilledBuffers.begin();

mFilledBuffers.erase(mFilledBuffers.begin());

BufferInfo *info = &mPortBuffers[kPortIndexOutput].editItemAt(index);

CHECK_EQ((int)info->mStatus, (int)OWNED_BY_US);

info->mStatus = OWNED_BY_CLIENT;

info->mMediaBuffer->add_ref();

if (mSkipCutBuffer != NULL) {

mSkipCutBuffer->submit(info->mMediaBuffer);

}

*buffer = info->mMediaBuffer;

}

在講read之前我們先來回顧下prepare時候的omxcodec::start方法,因為跟我們講read有千絲萬縷的關系,start方法:

status_t OMXCodec::start(MetaData *meta) {

Mutex::Autolock autoLock(mLock);

……….

sp<MetaData> params = new MetaData;

if (mQuirks & kWantsNALFragments) {

params->setInt32(kKeyWantsNALFragments, true);

}

if (meta) {

int64_t startTimeUs = 0;

int64_t timeUs;

if (meta->findInt64(kKeyTime, &timeUs)) {

startTimeUs = timeUs;

}

params->setInt64(kKeyTime, startTimeUs);

}

status_t err = mSource->start(params.get()); ---我們以mp4為例,就是mpeg4source

if (err != OK) {

return err;

}

mCodecSpecificDataIndex = 0;

mInitialBufferSubmit = true;

mSignalledEOS = false;

mNoMoreOutputData = false;

mOutputPortSettingsHaveChanged = false;

mSeekTimeUs = -1;

mSeekMode = ReadOptions::SEEK_CLOSEST_SYNC;

mTargetTimeUs = -1;

mFilledBuffers.clear();

mPaused = false;

return init();

}

status_t OMXCodec::init() {

….

err = allocateBuffers();

if (mQuirks & kRequiresLoadedToIdleAfterAllocation) {

err = mOMX->sendCommand(mNode, OMX_CommandStateSet, OMX_StateIdle);

CHECK_EQ(err, (status_t)OK);

setState(LOADED_TO_IDLE); -------發送命令到component,讓component處於Idle狀態,經過兩次回調後使component處於OMX_StateExecuting

}

….

}

由於我們以MP4為例,所以mSource就是MPEG4Source,MPEG4Source在MPEG4Extractor.cpp,我們看下start方法做了什麼:

status_t MPEG4Source::start(MetaData *params) {

Mutex::Autolock autoLock(mLock);

…………..

mGroup = new MediaBufferGroup;

int32_t max_size;

CHECK(mFormat->findInt32(kKeyMaxInputSize, &max_size));

mGroup->add_buffer(new MediaBuffer(max_size));

mSrcBuffer = new uint8_t[max_size];

mStarted = true;

return OK;

}

原來是設定輸入的最大buffer.

我再看看allocateBuffers();

status_t OMXCodec::allocateBuffers() {

status_t err = allocateBuffersOnPort(kPortIndexInput);----配置輸入端的buffer總量,大小等OMX_PARAM_PORTDEFINITIONTYPE

if (err != OK) {

return err;

}

return allocateBuffersOnPort(kPortIndexOutput);---配置輸出端,並dequeuebuffer到OMX端

}

OMX_PARAM_PORTDEFINITIONTYPE 是component的配置信息。

typedef struct OMX_PARAM_PORTDEFINITIONTYPE {

OMX_U32 nSize; /**< Size of the structure in bytes */

OMX_VERSIONTYPE nVersion; /**< OMX specification version information */

OMX_U32 nPortIndex; /**< Port number the structure applies to */

OMX_DIRTYPE eDir; /**< Direction (input or output) of this port */

OMX_U32 nBufferCountActual; /**< The actual number of buffers allocated on this port */

OMX_U32 nBufferCountMin; /**< The minimum number of buffers this port requires */

OMX_U32 nBufferSize; /**< Size, in bytes, for buffers to be used for this channel */

OMX_BOOL bEnabled; /**< Ports default to enabled and are enabled/disabled by

OMX_CommandPortEnable/OMX_CommandPortDisable.

When disabled a port is unpopulated. A disabled port

is not populated with buffers on a transition to IDLE. */

OMX_BOOL bPopulated; /**< Port is populated with all of its buffers as indicated by

nBufferCountActual. A disabled port is always unpopulated.

An enabled port is populated on a transition to OMX_StateIdle

and unpopulated on a transition to loaded. */

OMX_PORTDOMAINTYPE eDomain; /**< Domain of the port. Determines the contents of metadata below. */

union {

OMX_AUDIO_PORTDEFINITIONTYPE audio;

OMX_VIDEO_PORTDEFINITIONTYPE video;

OMX_IMAGE_PORTDEFINITIONTYPE image;

OMX_OTHER_PORTDEFINITIONTYPE other;

} format;

OMX_BOOL bBuffersContiguous;

OMX_U32 nBufferAlignment;

} OMX_PARAM_PORTDEFINITIONTYPE;

OMX_PARAM_PORTDEFINITIONTYPE的參數從哪裡來呢?原來來自解碼器端,包括輸入輸出端的buffer大小,總數等信息。

status_t OMXCodec::allocateBuffersOnPort(OMX_U32 portIndex) {

if (mNativeWindow != NULL && portIndex == kPortIndexOutput) {

return allocateOutputBuffersFromNativeWindow();------當輸出的時候走這裡,給輸出端分配內存空間,並dequeue buffer 到OMX。

}

OMX_PARAM_PORTDEFINITIONTYPE def;

InitOMXParams(&def);

def.nPortIndex = portIndex;

err = mOMX->getParameter(

mNode, OMX_IndexParamPortDefinition, &def, sizeof(def));---從component獲取OMX_PARAM_PORTDEFINITIONTYPE相關配置,具體哪些可以看上面的結構體

if (err != OK) {

return err;

}

size_t totalSize = def.nBufferCountActual * def.nBufferSize; ---從getParameter獲得的每個輸入/輸出端的buffer大小和總數

mDealer[portIndex] = new MemoryDealer(totalSize, "OMXCodec");

for (OMX_U32 i = 0; i < def.nBufferCountActual; ++i) {

sp<IMemory> mem = mDealer[portIndex]->allocate(def.nBufferSize);

CHECK(mem.get() != NULL);

BufferInfo info;

info.mData = NULL;

info.mSize = def.nBufferSize;

IOMX::buffer_id buffer;

if (portIndex == kPortIndexInput

&& ((mQuirks & kRequiresAllocateBufferOnInputPorts)

|| (mFlags & kUseSecureInputBuffers))) {

if (mOMXLivesLocally) {

mem.clear();

err = mOMX->allocateBuffer(

mNode, portIndex, def.nBufferSize, &buffer,

&info.mData);-----給輸入端分配內存空間,並使info.mData指向mNode的header

…………….

info.mBuffer = buffer;

info.mStatus = OWNED_BY_US;

info.mMem = mem;

info.mMediaBuffer = NULL;

mPortBuffers[portIndex].push(info); ---BufferInfo 放到Vector<BufferInfo> mPortBuffers[2] mPortBuffers進行管理,到read的時候用,0是輸入,1是輸出。

………………………….

}

復習完start方法,我們就來講reader方法了:

status_t OMXCodec::read(

MediaBuffer **buffer, const ReadOptions *options) {

if (mInitialBufferSubmit) {

mInitialBufferSubmit = false;

………….

drainInputBuffers();

if (mState == EXECUTING) {

// Otherwise mState == RECONFIGURING and this code will trigger

// after the output port is reenabled.

fillOutputBuffers();

}

…………………..

size_t index = *mFilledBuffers.begin();

mFilledBuffers.erase(mFilledBuffers.begin());

BufferInfo *info = &mPortBuffers[kPortIndexOutput].editItemAt(index);

CHECK_EQ((int)info->mStatus, (int)OWNED_BY_US);

info->mStatus = OWNED_BY_CLIENT;

info->mMediaBuffer->add_ref();

if (mSkipCutBuffer != NULL) {

mSkipCutBuffer->submit(info->mMediaBuffer);

}

*buffer = info->mMediaBuffer;

}

先看drainInputBuffers方法,主要是從mediasource讀取數據元,

void OMXCodec::drainInputBuffers() {

CHECK(mState == EXECUTING || mState == RECONFIGURING);

if (mFlags & kUseSecureInputBuffers) {

Vector<BufferInfo> *buffers = &mPortBuffers[kPortIndexInput];---mPortBuffers是我們allocateBuffersOnPort方法存下來的對應的輸入/輸出bufferinfo數據

for (size_t i = 0; i < buffers->size(); ++i) {---循環每次輸入端能填充數據的buffer總數,這是由component的結構決定的,各個廠商的解碼器配置不一樣

if (!drainAnyInputBuffer()-----往buffer裡面填元數據,給解碼器解碼

|| (mFlags & kOnlySubmitOneInputBufferAtOneTime)) {

break;

}

}

}

………………

}

bool OMXCodec::drainAnyInputBuffer() {

return drainInputBuffer((BufferInfo *)NULL);

}

bool OMXCodec::drainInputBuffer(BufferInfo *info) {

for (;;) {

MediaBuffer *srcBuffer;

if (mSeekTimeUs >= 0) {

if (mLeftOverBuffer) {

mLeftOverBuffer->release();

mLeftOverBuffer = NULL;

}

MediaSource::ReadOptions options;

options.setSeekTo(mSeekTimeUs, mSeekMode);

mSeekTimeUs = -1;

mSeekMode = ReadOptions::SEEK_CLOSEST_SYNC;---seek模式

mBufferFilled.signal();

err = mSource->read(&srcBuffer, &options);---讀mediasource,我們以mpeg4為例,它的實現就在MPEG4Extrator.cpp(),根據seek模式和seek時間從sampletable裡面找到meta_data。存到srcBuffer。

if (mFlags & kUseSecureInputBuffers) {

info = findInputBufferByDataPointer(srcBuffer->data());---讓bufferinfo的mData指向元數據的data

CHECK(info != NULL);

}

err = mOMX->emptyBuffer(

mNode, info->mBuffer, 0, offset,

flags, timestampUs); ----對應component的方法是OMX_EmptyThisBuffer,回調消息為:EmptyBufferDone。

if (err != OK) {

setState(ERROR);

return false;

}

info->mStatus = OWNED_BY_COMPONENT;----設置狀態為OWNED_BY_COMPONENT

}

從上面的分析,我們得知emtyBuffer後在5msec之內會有個EmptyBufferDone回調,我們看下omxcodec對該回調的處理:

void OMXCodec::on_message(const omx_message &msg) {

case omx_message::EMPTY_BUFFER_DONE:

………………

IOMX::buffer_id buffer = msg.u.extended_buffer_data.buffer;

CODEC_LOGV("EMPTY_BUFFER_DONE(buffer: %p)", buffer);

Vector<BufferInfo> *buffers = &mPortBuffers[kPortIndexInput];

size_t i = 0;

while (i < buffers->size() && (*buffers)[i].mBuffer != buffer) {

++i;

}

BufferInfo* info = &buffers->editItemAt(i);

-------------通過buffer_id找到Vector<BufferInfo> bufferInfo

info->mStatus = OWNED_BY_US;-------設置info的狀態為OWNED_BY_US

info->mMediaBuffer->release();-----釋放mediabuffer

info->mMediaBuffer = NULL;

…………….

if (mState != ERROR

&& mPortStatus[kPortIndexInput] != SHUTTING_DOWN) {

CHECK_EQ((int)mPortStatus[kPortIndexInput], (int)ENABLED);

if (mFlags & kUseSecureInputBuffers) {

drainAnyInputBuffer();----下一片段buffer移交給component

} else {

drainInputBuffer(&buffers->editItemAt(i));

}

}

emptybuffer後應該就是fillOutputBuffer:

void OMXCodec::fillOutputBuffer(BufferInfo *info) {

CHECK_EQ((int)info->mStatus, (int)OWNED_BY_US);

if (mNoMoreOutputData) {

CODEC_LOGV("There is no more output data available, not "

"calling fillOutputBuffer");--------------沒有數據了退出

return;

}

if (info->mMediaBuffer != NULL) {

sp<GraphicBuffer> graphicBuffer = info->mMediaBuffer->graphicBuffer();

if (graphicBuffer != 0) {

// When using a native buffer we need to lock the buffer before

// giving it to OMX.

CODEC_LOGV("Calling lockBuffer on %p", info->mBuffer);

int err = mNativeWindow->lockBuffer(mNativeWindow.get(),

graphicBuffer.get()); -------鎖定該buffer,准備render圖像

if (err != 0) {

CODEC_LOGE("lockBuffer failed w/ error 0x%08x", err);

setState(ERROR);

return;

}

}

}

CODEC_LOGV("Calling fillBuffer on buffer %p", info->mBuffer);

status_t err = mOMX->fillBuffer(mNode, info->mBuffer);---------填充輸出端buffer

……….

info->mStatus = OWNED_BY_COMPONENT;

}

fillbuffer後獲得mVideoBuffer就可以在Awesomeplayer的onvideoEvent方法中的mVideoRenderer->render(mVideoBuffer);進行圖像的顯示了。

以上我們就是播放的過程了。到此多媒體本地播放流程全部講完了,裡面很多細節的東西,還得大伙自己深入理解,往後有什麼需要補充和添加的,我會再次補充上。

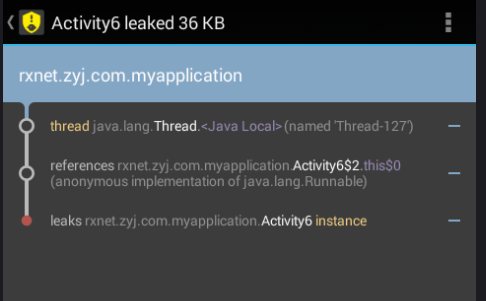

Android 有效的解決內存洩漏的問題實例詳解

Android 有效的解決內存洩漏的問題實例詳解

Android 有效的解決內存洩漏的問題Android內存洩漏,我想做Android 應用的時候遇到的話很是頭疼,這裡是我在網上找的不錯的資料,實例詳解這個問題的解決方案

android NinePatch圖片制做

android NinePatch圖片制做

NinePatch圖片以*.9.png結尾,和普通圖片的區別是四周多了一個邊框如上圖所示,左邊那條黑色線代表圖片垂直拉伸的區域,上邊的那條黑色線代表水平拉伸區域,右邊的黑

Android手機輸入法按鍵監聽-dispatchKeyEvent

Android手機輸入法按鍵監聽-dispatchKeyEvent

最近在項目開發中遇到一個關於手機輸入鍵盤的坑,特來記錄下。應用場景:項目中有一個界面是用viewpaper加三個fragment寫的,其中viewpaper被我屏蔽了左右

Android 中構建快速可靠的 UI 測試

Android 中構建快速可靠的 UI 測試

前言讓我一起來看看 Iván Carballo和他的團隊是如何使用Espresso, Mockito 和Dagger 2 編寫250個UI測試,並且只花了三分鐘就運行成功